Using AI tools for coding: good or bad?

Explores the balanced use of AI coding tools like GitHub Copilot, discussing benefits, risks of hallucinations, and best practices for developers.

Explores the balanced use of AI coding tools like GitHub Copilot, discussing benefits, risks of hallucinations, and best practices for developers.

A simple explanation of Retrieval-Augmented Generation (RAG), covering its core components: LLMs, context, and vector databases.

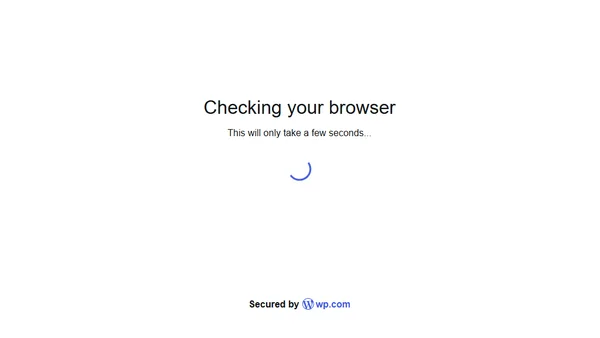

Explores adversarial attacks and jailbreak prompts that can make large language models produce unsafe or undesired outputs, bypassing safety measures.

Explores building enterprise applications using Azure OpenAI and Microsoft's data platform for secure, integrated AI solutions.

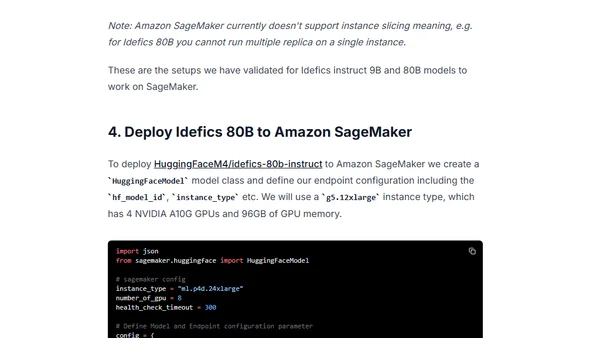

A technical guide on deploying Hugging Face's IDEFICS visual language models (9B & 80B parameters) to Amazon SageMaker using the LLM DLC.

A benchmark analysis of deploying Meta's Llama 2 models on Amazon SageMaker using Hugging Face's LLM Inference Container, evaluating cost, latency, and throughput.

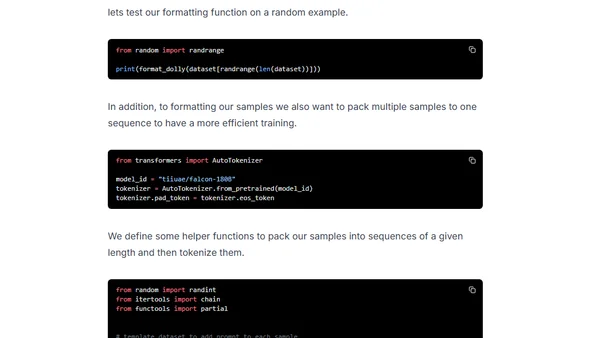

A technical guide on fine-tuning the massive Falcon 180B language model using DeepSpeed ZeRO, LoRA, and Flash Attention for efficient training.

An introduction to Semantic Kernel's Planner, a tool for automatically generating and executing complex AI tasks using plugins and natural language goals.

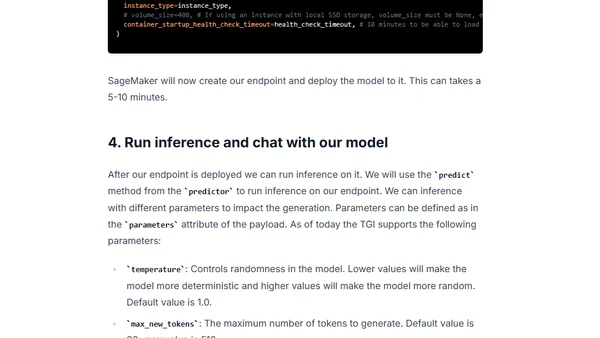

Guide to deploying open-source LLMs like BLOOM and Open Assistant to Amazon SageMaker using Hugging Face's new LLM Inference Container.

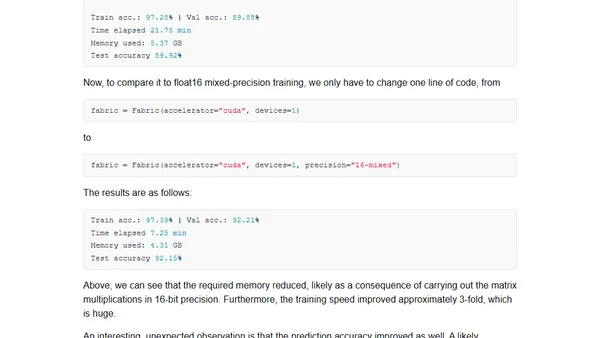

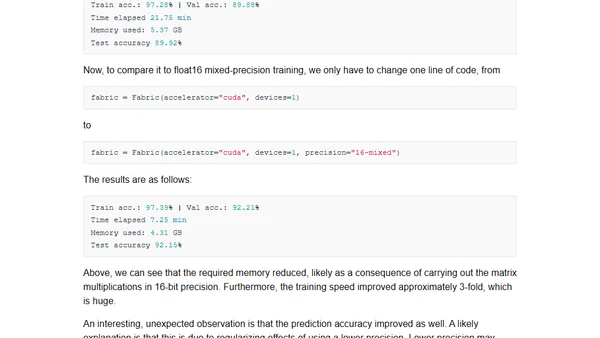

Exploring mixed-precision techniques to speed up large language model training and inference by up to 3x without losing accuracy.

Explores how mixed-precision training techniques can speed up large language model training and inference by up to 3x, reducing memory use.

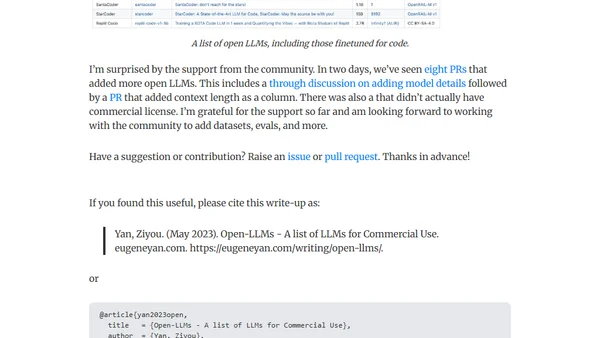

A curated list of open-source Large Language Models (LLMs) available for commercial use, including community-contributed updates and details.

A technical tutorial on fine-tuning a 20B+ parameter LLM using PyTorch FSDP and Hugging Face on Amazon SageMaker's multi-GPU infrastructure.

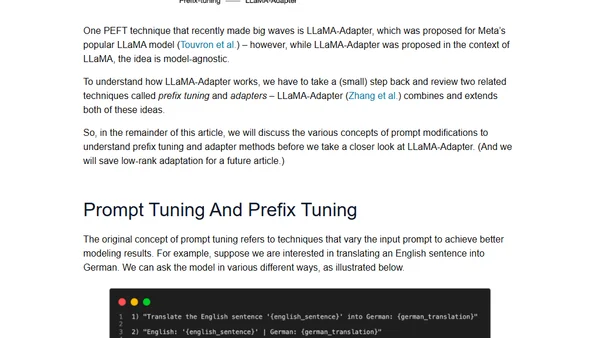

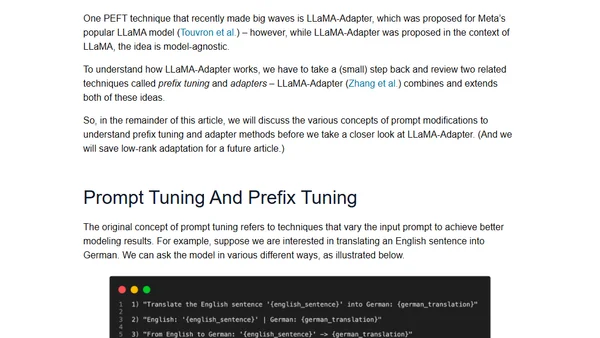

A guide to parameter-efficient finetuning methods for large language models, covering techniques like prefix tuning and LLaMA-Adapters.

Explains parameter-efficient finetuning methods for large language models, covering techniques like prefix tuning and LLaMA-Adapters.

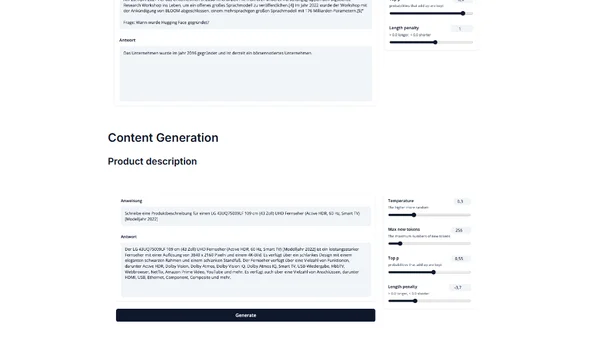

Introduces IGEL, an instruction-tuned German large language model based on BLOOM, for NLP tasks like translation and QA.

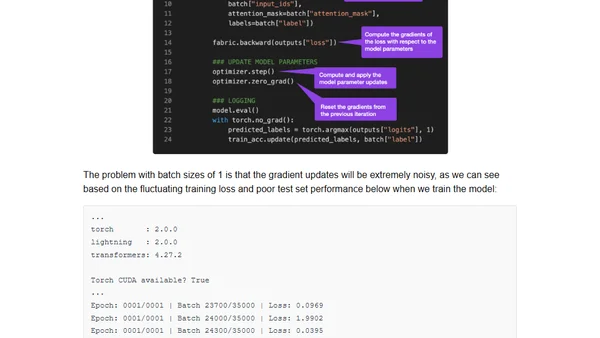

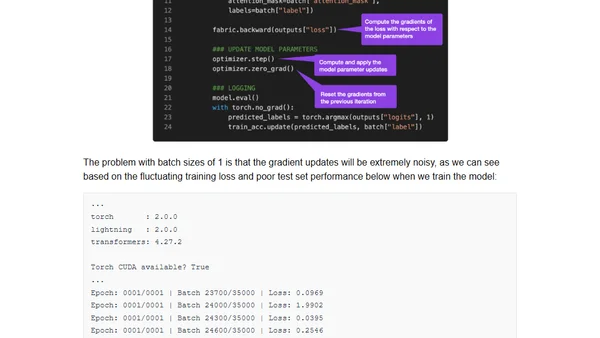

Guide to finetuning large language models on a single GPU using gradient accumulation to overcome memory limitations.

A guide to finetuning large language models like BLOOM on a single GPU using gradient accumulation to overcome memory limits.

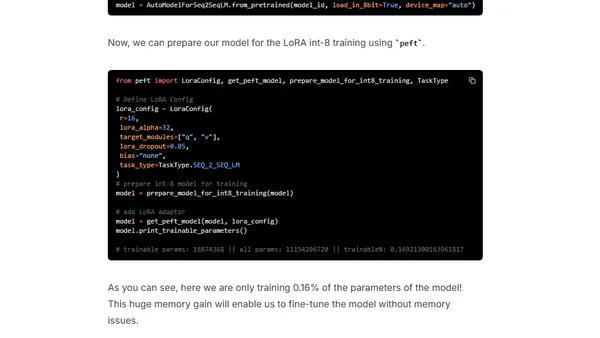

A technical guide on fine-tuning the large FLAN-T5 XXL model efficiently using LoRA and Hugging Face libraries on a single GPU.

Guide to fine-tuning the large FLAN-T5 XXL model using Amazon SageMaker managed training and DeepSpeed for optimization.