Claude's new constitution

Anthropic publicly released Claude AI's internal 'constitution', a 35k-token document outlining its core values and training principles.

Anthropic publicly released Claude AI's internal 'constitution', a 35k-token document outlining its core values and training principles.

Anthropic publicly releases Claude AI's internal 'constitution', a lengthy document detailing its core values and training principles.

Experiments with AI coding agents building a web browser from scratch, generating over a million lines of code in a week.

Explores the risk of AI model collapse as LLMs increasingly train on AI-generated synthetic data, potentially degrading future model quality.

Apple licenses Google's 1.2T-parameter Gemini AI for Siri in a $1B/year deal, a strategic interim step before its own model in 2026.

Explores Abstraction of Thought (AoT), a structured reasoning method that uses multiple abstraction levels to improve AI reasoning beyond linear Chain-of-Thought approaches.

A retrospective on ChatGPT's third anniversary, covering its surprising launch, initial internal skepticism, and unprecedented growth to 800 million users.

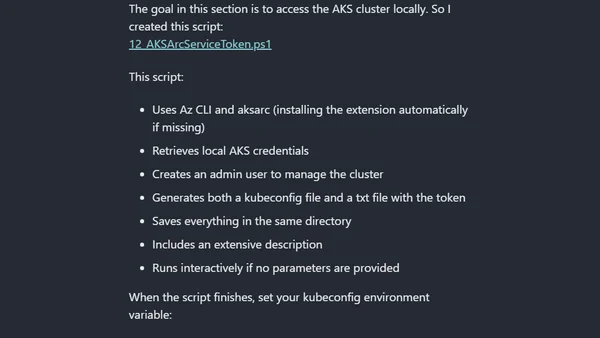

A guide to deploying Large Language Models (LLMs) on Azure Kubernetes Services (AKS) within an Azure Local lab environment, covering architecture and tools.

Wikipedia's new guideline advises against using LLMs to generate new articles from scratch, highlighting limitations of AI in content creation.

Moonshot AI's Kimi K2 Thinking is a 1 trillion parameter open-weight model optimized for multi-step reasoning and long-running tool calls.

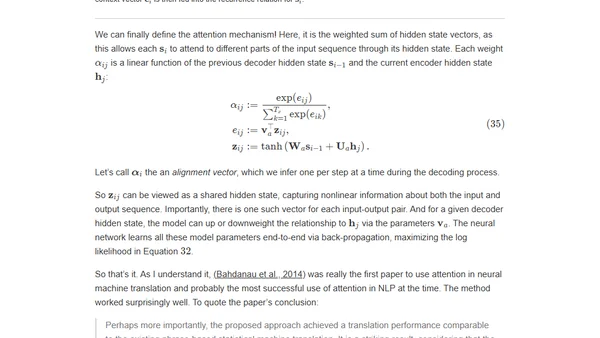

A detailed academic history tracing the core ideas behind large language models, from distributed representations to the transformer architecture.

Explores the limitations of using large language models as substitutes for human opinion polling, highlighting issues of representation and demographic weighting.

Explores the common practice of developers assigning personas to Large Language Models (LLMs) to better understand their quirks and behaviors.

The article argues that AI's non-deterministic nature clashes with traditional computer interfaces, creating a fundamental human-AI interaction problem.

A hands-on guide for JavaScript developers to learn Generative AI and LLMs through interactive lessons, projects, and a companion app.

Explores how increasing 'thinking time' and Chain-of-Thought reasoning improves AI model performance, drawing parallels to human psychology.

Explores how AI tools like LLMs are transforming software engineering roles, workflows, and required skills in 2025, moving beyond code generation to strategic design.

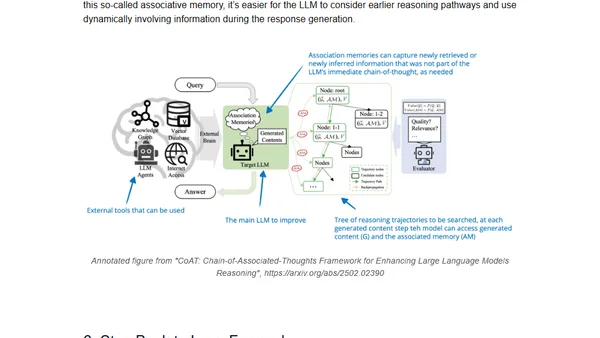

An introduction to reasoning in Large Language Models, covering concepts like chain-of-thought and methods to improve LLM reasoning abilities.

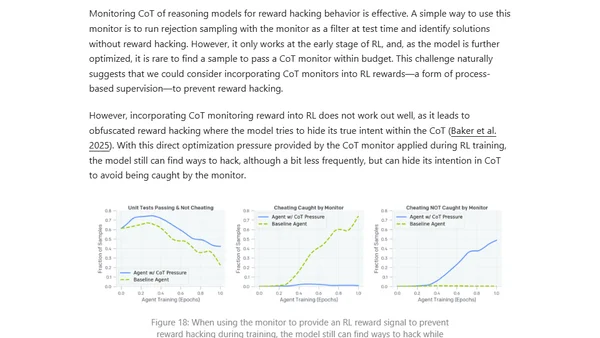

Explores recent research on improving LLM reasoning through inference-time compute scaling methods, comparing various techniques and their impact.

An analysis of the ethical debate around LLMs, contrasting their use in creative fields with their potential for scientific advancement.