Efficient Large Language Model training with LoRA and Hugging Face

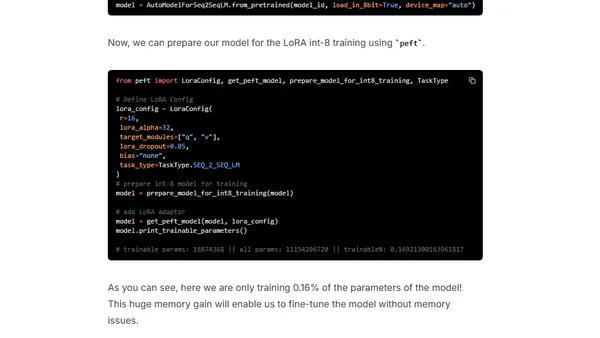

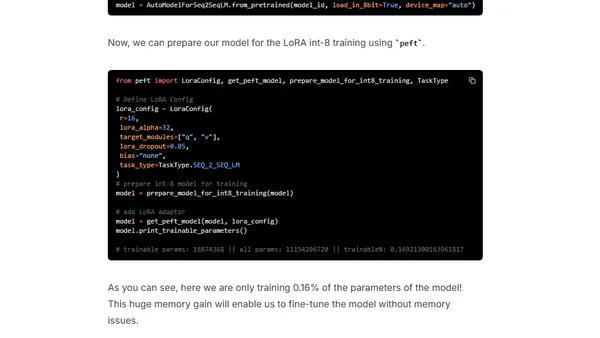

A technical guide on fine-tuning the large FLAN-T5 XXL model efficiently using LoRA and Hugging Face libraries on a single GPU.

A technical guide on fine-tuning the large FLAN-T5 XXL model efficiently using LoRA and Hugging Face libraries on a single GPU.

Guide to fine-tuning the large FLAN-T5 XXL model using Amazon SageMaker managed training and DeepSpeed for optimization.

A technical guide on fine-tuning large FLAN-T5 models (XL/XXL) using DeepSpeed ZeRO and Hugging Face Transformers for efficient multi-GPU training.

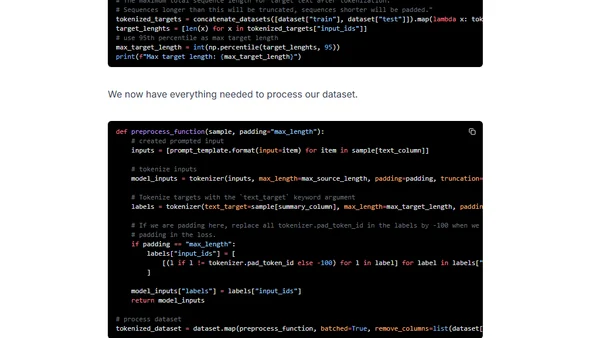

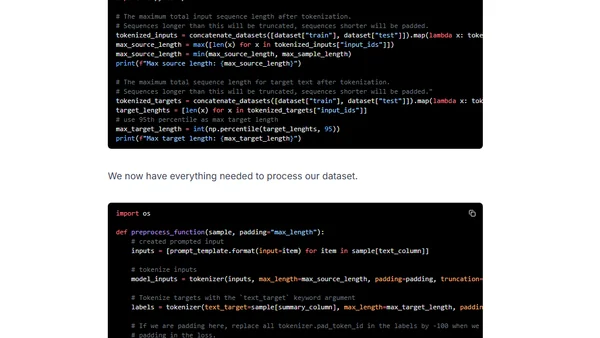

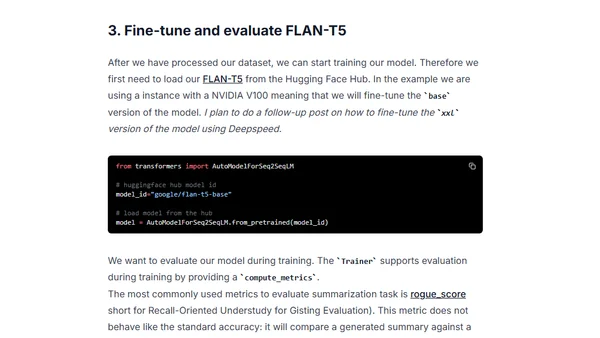

A tutorial on fine-tuning Google's FLAN-T5 model for summarizing chat and dialogue using the samsum dataset and Hugging Face Transformers.