Deploy Llama 2 70B on AWS Inferentia2 with Hugging Face Optimum

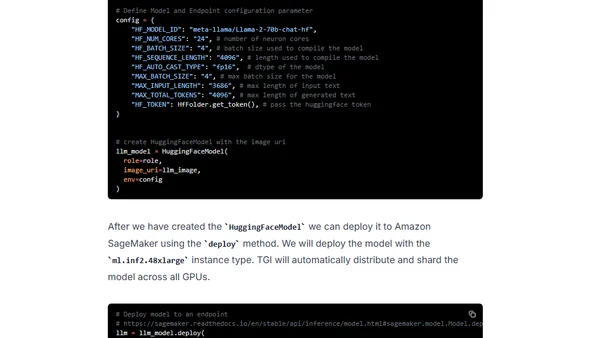

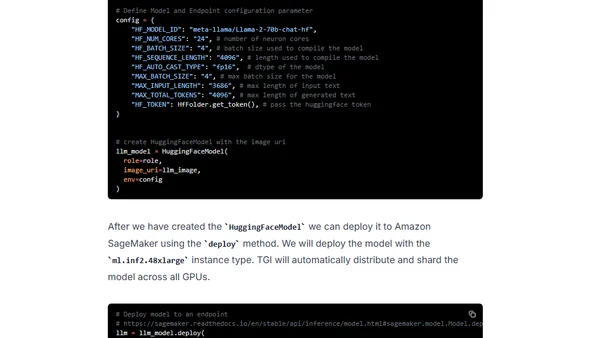

A technical guide on deploying Meta's Llama 2 70B large language model on AWS Inferentia2 hardware using Hugging Face Optimum and SageMaker.

A technical guide on deploying Meta's Llama 2 70B large language model on AWS Inferentia2 hardware using Hugging Face Optimum and SageMaker.

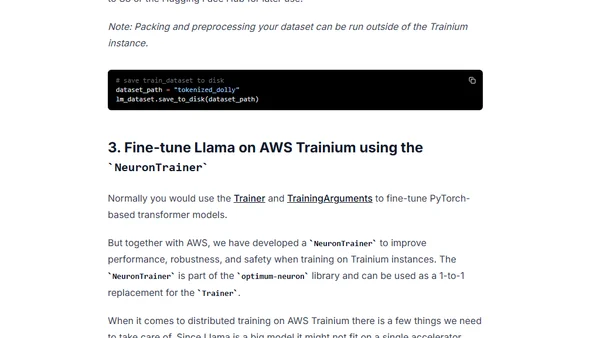

A technical tutorial on fine-tuning the Llama 2 7B large language model using AWS Trainium instances and Hugging Face libraries.

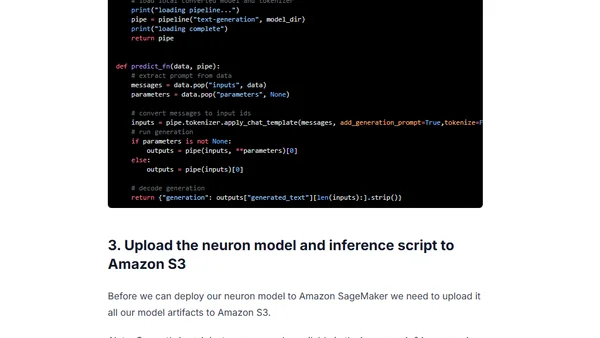

A tutorial on deploying Meta's Llama 2 7B model on AWS Inferentia2 using Amazon SageMaker and the optimum-neuron library.

A benchmark analysis of deploying Meta's Llama 2 models on Amazon SageMaker using Hugging Face's LLM Inference Container, evaluating cost, latency, and throughput.

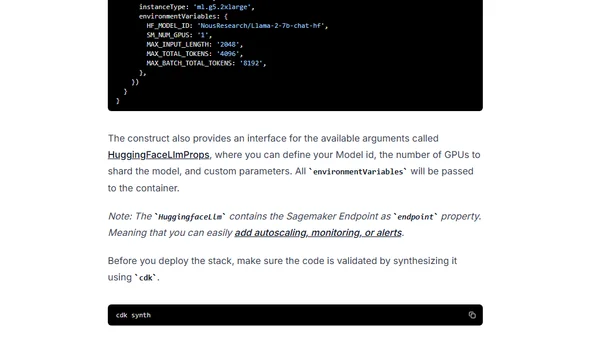

A technical guide on deploying open-source LLMs like Llama 2 using Infrastructure as Code with AWS CDK and the Hugging Face LLM construct.

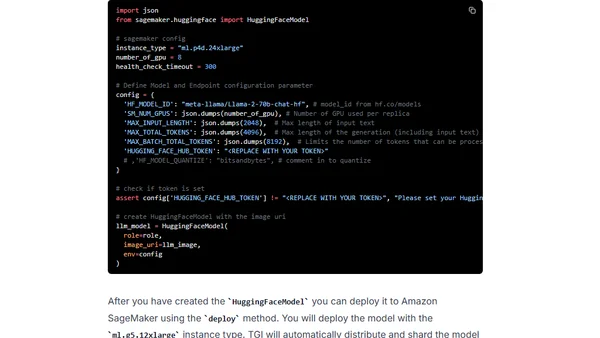

A technical guide on deploying Meta's Llama 2 large language models (7B, 13B, 70B) on Amazon SageMaker using the Hugging Face LLM DLC.

A technical guide on instruction-tuning Meta's Llama 2 model to generate instructions from inputs, enabling personalized LLM applications.

A comprehensive guide to Meta's LLaMA 2 open-source language model, covering resources, playgrounds, benchmarks, and technical details.

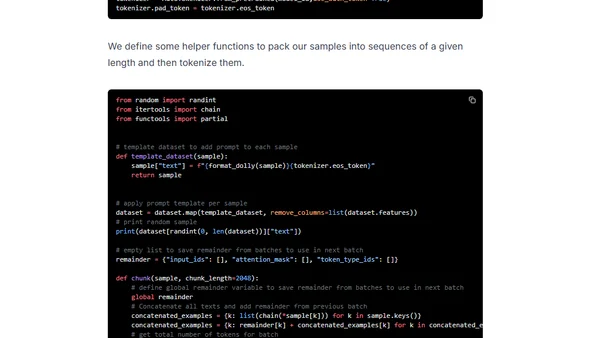

A technical guide on fine-tuning LLaMA 2 models (7B to 70B) using QLoRA and PEFT on Amazon SageMaker for efficient large language model adaptation.