State of AI 2026 with Sebastian Raschka, Nathan Lambert, and Lex Fridman

A 4.5-hour interview discussing the state of AI in 2026, covering LLMs, geopolitics, training, open vs. closed models, AGI timelines, and industry implications.

SebastianRaschka.com is the personal blog of Sebastian Raschka, PhD, an LLM research engineer whose work bridges academia and industry in AI and machine learning. On his blog and notes section he publishes deep, well-documented articles on topics such as LLMs (large language models), reasoning models, machine learning in Python, neural networks, data science workflows, and deep learning architecture. Recent posts explore advanced themes like “reasoning LLMs”, comparisons of modern open-weight transformer architectures, and guides for building, training, or analyzing neural networks and model internals.

103 articles from this blog

A 4.5-hour interview discussing the state of AI in 2026, covering LLMs, geopolitics, training, open vs. closed models, AGI timelines, and industry implications.

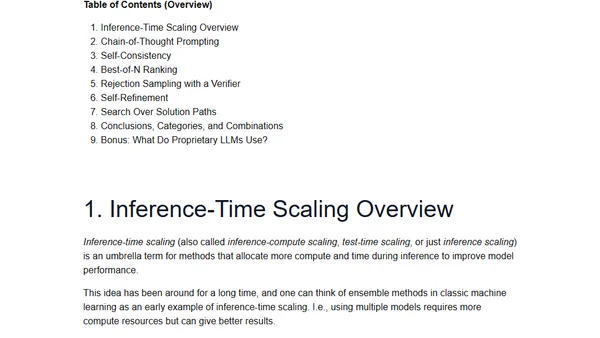

An overview of inference-time scaling methods for improving LLM reasoning, categorizing techniques and highlighting recent research.

A 2025 year-in-review analysis of large language models (LLMs), covering key developments in reasoning, architecture, costs, and predictions for 2026.

A curated list of notable LLM research papers from the second half of 2025, categorized by topics like reasoning, training, and multimodal models.

A timeline of beginner-friendly 'Hello World' examples in machine learning and AI, from Random Forests in 2013 to modern RLVR models in 2025.

A technical analysis of the DeepSeek model series, from V3 to the latest V3.2, covering architecture, performance, and release timeline.

Author's method for effectively reading technical books, including multiple read-throughs, coding along, and doing exercises.

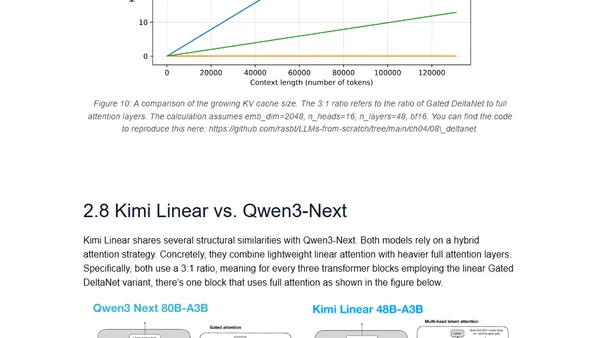

An overview of alternative LLM architectures beyond standard transformers, including linear attention hybrids, text diffusion models, and code world models.

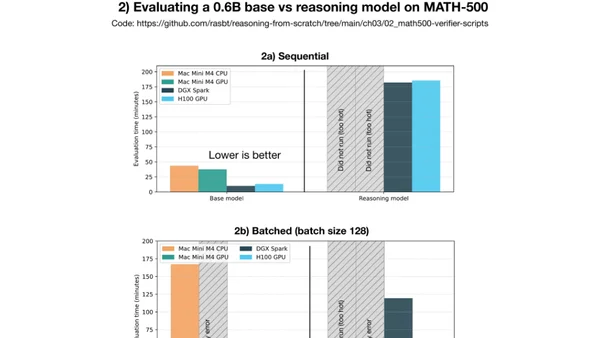

Compares DGX Spark and Mac Mini for local PyTorch development, focusing on LLM inference and fine-tuning performance benchmarks.

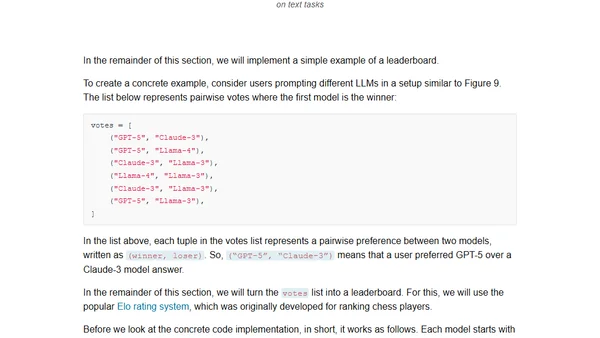

A guide to the four main methods for evaluating Large Language Models, including code examples and practical implementation details.

A hands-on guide to understanding and implementing the Qwen3 large language model architecture from scratch using pure PyTorch.

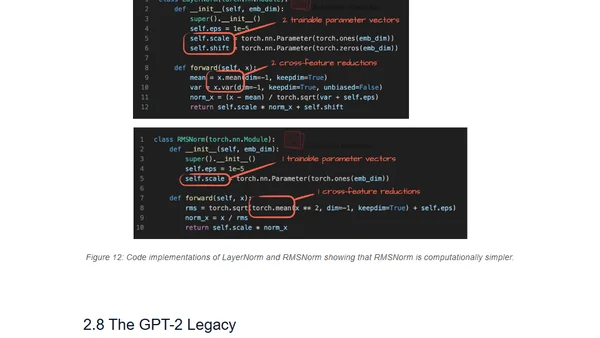

Analysis of OpenAI's new gpt-oss models, comparing architectural improvements from GPT-2 and examining optimizations like MXFP4 and Mixture-of-Experts.

A detailed comparison of architectural developments in major large language models (LLMs) released in 2024-2025, focusing on structural changes beyond benchmarks.

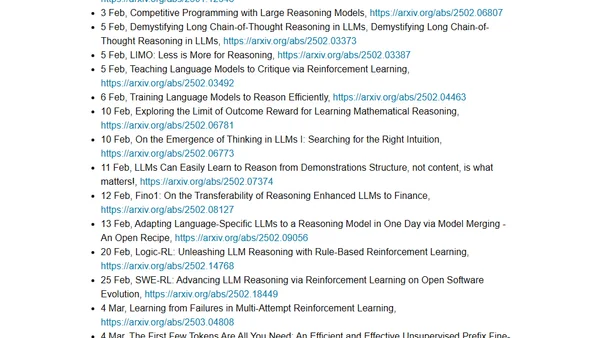

A curated list of key LLM research papers from Jan-June 2025, organized by topic including reasoning models, RL methods, and efficient training.

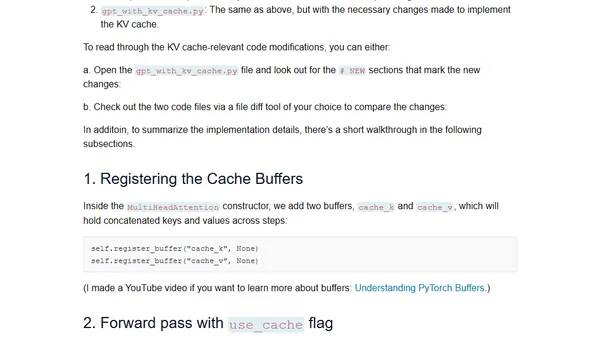

Explains the KV cache technique for efficient LLM inference with a from-scratch code implementation.

A course teaching how to code Large Language Models (LLMs) from scratch to deeply understand their inner workings and fundamentals.

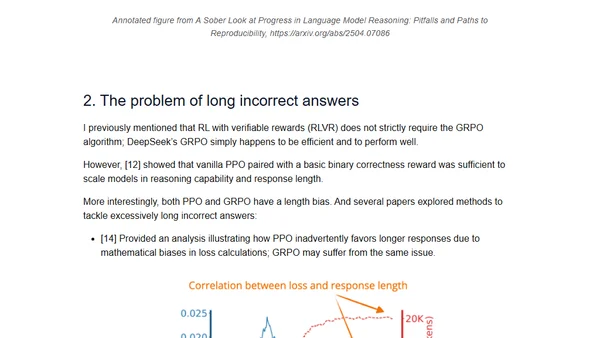

Analyzes the use of reinforcement learning to enhance reasoning capabilities in large language models (LLMs) like GPT-4.5 and o3.

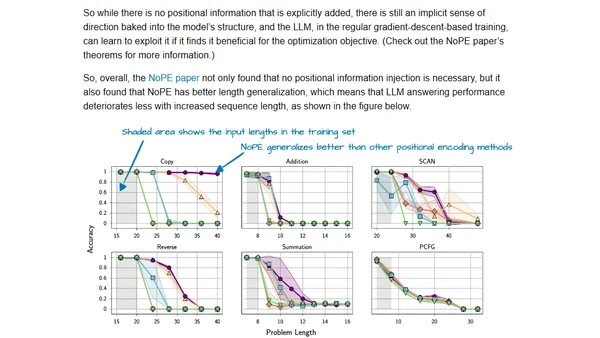

An introduction to reasoning in Large Language Models, covering concepts like chain-of-thought and methods to improve LLM reasoning abilities.

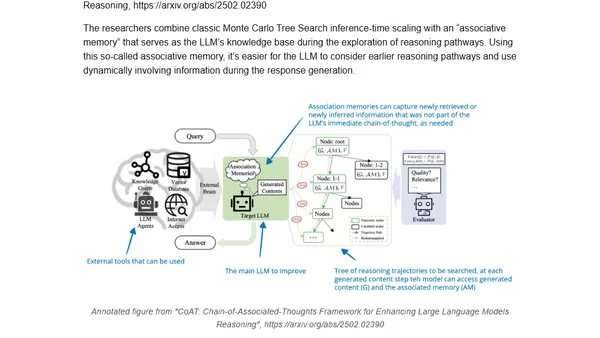

Explores inference-time compute scaling methods to enhance the reasoning capabilities of large language models (LLMs) for complex problem-solving.

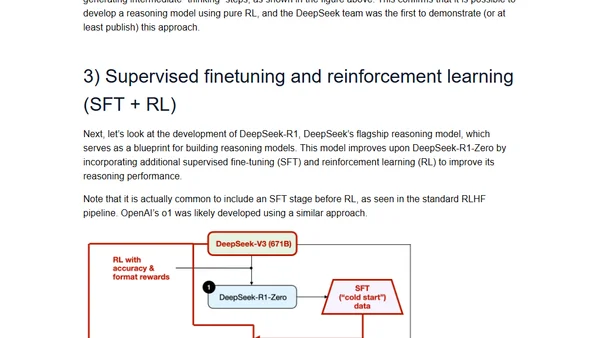

Explores four main approaches to building and enhancing reasoning capabilities in Large Language Models (LLMs) for complex tasks.