Finetuning Large Language Models On A Single GPU Using Gradient Accumulation

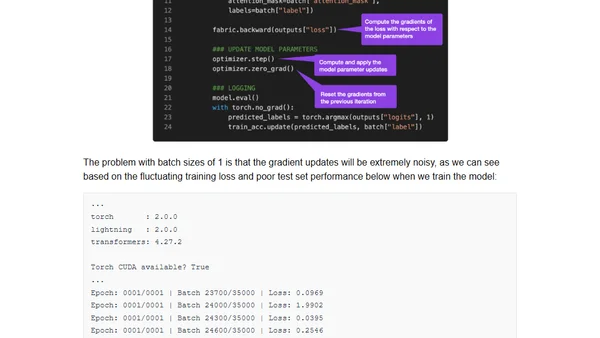

Read OriginalThis technical tutorial explains how to finetune large language models (specifically BLOOM-560M) for text classification using a single GPU. It details the gradient accumulation technique as a workaround for memory constraints, allowing for effective training with limited hardware. The article includes practical code examples using PyTorch, Lightning, and Hugging Face Transformers.

Comments

No comments yet

Be the first to share your thoughts!

Browser Extension

Get instant access to AllDevBlogs from your browser

Top of the Week

1

2

Using Browser Apis In React Practical Guide

Jivbcoop

•

2 votes

3

Better react-hook-form Smart Form Components

Maarten Hus

•

2 votes

4

Top picks — 2026 January

Paweł Grzybek

•

1 votes

5

In Praise of –dry-run

Henrik Warne

•

1 votes

6

Deep Learning is Powerful Because It Makes Hard Things Easy - Reflections 10 Years On

Ferenc Huszár

•

1 votes

7

Vibe coding your first iOS app

William Denniss

•

1 votes

8

AGI, ASI, A*I – Do we have all we need to get there?

John D. Cook

•

1 votes

9

Quoting Thariq Shihipar

Simon Willison

•

1 votes

10

Dew Drop – January 15, 2026 (#4583)

Alvin Ashcraft

•

1 votes