Product Evals in Three Simple Steps

A guide to building product evaluations for LLMs using three steps: labeling data, aligning evaluators, and running experiments.

Eugene Yan is a Principal Applied Scientist at Amazon, building AI-powered recommendation systems and experiences. He shares insights on RecSys, LLMs, and applied machine learning, while mentoring and investing in ML startups.

185 articles from this blog

A guide to building product evaluations for LLMs using three steps: labeling data, aligning evaluators, and running experiments.

A principal engineer shares advice for new principal tech ICs, covering role definition, shifting responsibilities, and the importance of influence and communication.

Explores training a hybrid LLM-recommender system using Semantic IDs for steerable, explainable recommendations.

Explores challenges and methods for evaluating question-answering AI systems when processing long documents like technical manuals or novels.

A presentation on using Large Language Model (LLM) techniques to enhance Recommendation Systems (RecSys) and Search, from the AI Engineer World's Fair 2025.

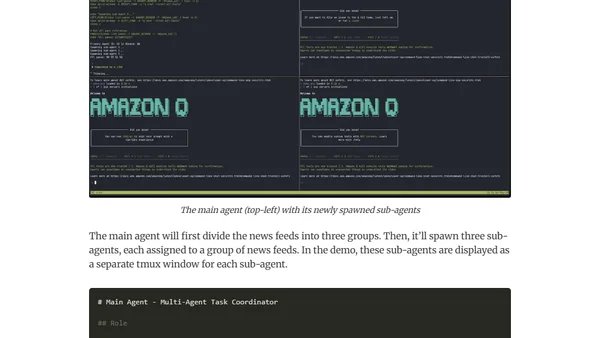

A technical guide on building automated news agents using MCP, Amazon Q CLI, and tmux to generate daily news recaps from RSS feeds.

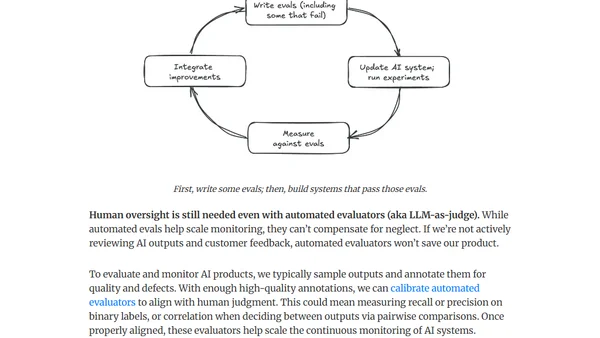

Argues that effective AI product evaluation requires a scientific, process-driven approach, not just adding LLM-as-judge tools.

Summary of a panel discussion at NVIDIA GTC 2025 on insights and lessons learned from building real-world LLM-powered applications.

Explores how large language models (LLMs) are transforming industrial recommendation systems and search, covering hybrid architectures, data generation, and unified frameworks.

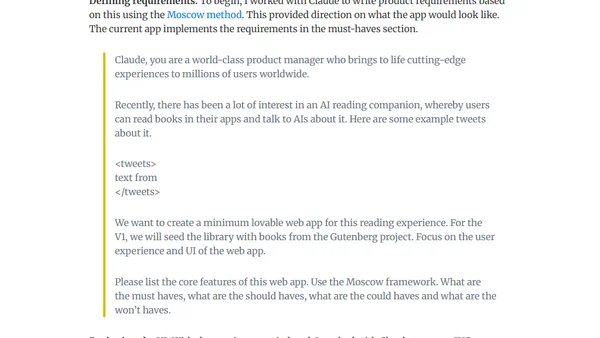

A developer builds an AI-powered reading companion called Dewey, detailing its features, design, and technical implementation.

A guide on starting and running a weekly paper club for learning about AI/ML research papers and building a technical community.

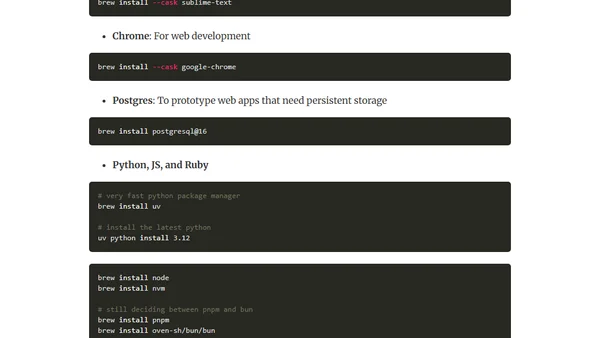

A guide to setting up a new MacBook Pro for development with minimal tools, including OS tweaks, terminal setup, and essential software.

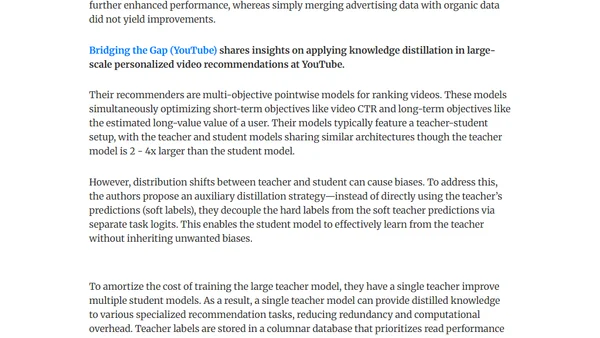

Key lessons from 2024 ML conferences on building effective machine learning systems, covering reward functions, trade-offs, and practical engineering advice.

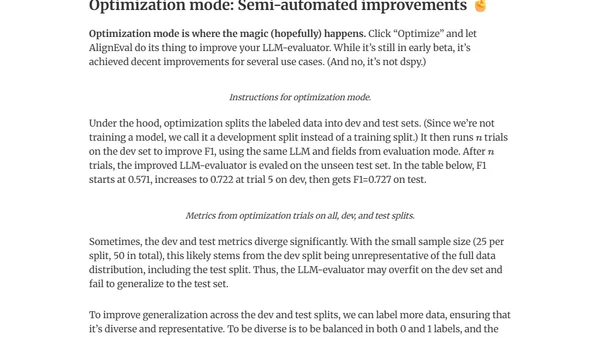

Introduces AlignEval, an app for building and automating LLM evaluators, making the process easier and more data-driven.

Author judges a Weights & Biases hackathon focused on building LLM evaluation tools, discussing key considerations and project highlights.

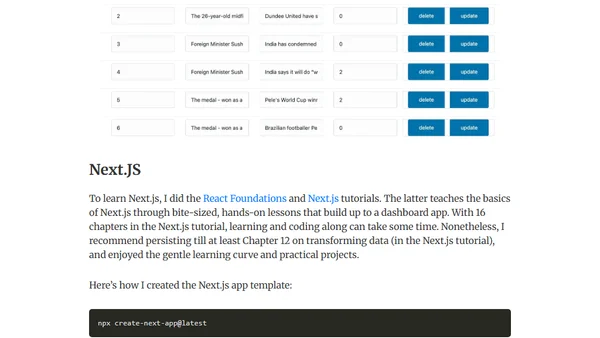

A developer compares building a simple CRUD web app using FastAPI, FastHTML, Next.js, and SvelteKit to evaluate their features and developer experience.

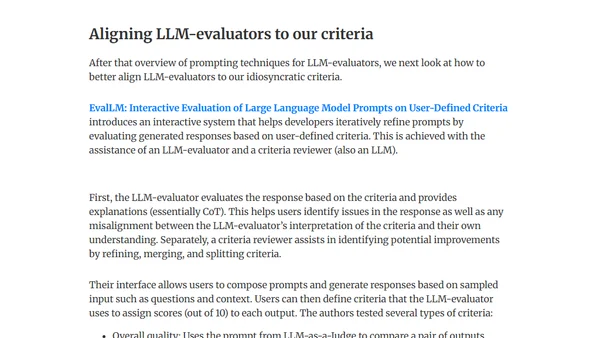

A survey of using LLMs as evaluators (LLM-as-Judge) for assessing AI model outputs, covering techniques, use cases, and critiques.

A guide to designing a reliable and valid interview process for hiring machine learning and AI engineers, covering technical skills, data literacy, and interview structure.

Reflections on delivering the closing keynote at the AI Engineer World's Fair 2024, sharing lessons from a year of building with LLMs.

A summary of a talk on applying Large Language Models (LLMs) to build and deploy recommendation systems at scale, presented at Netflix's PRS workshop.