Introducing the Hugging Face LLM Inference Container for Amazon SageMaker

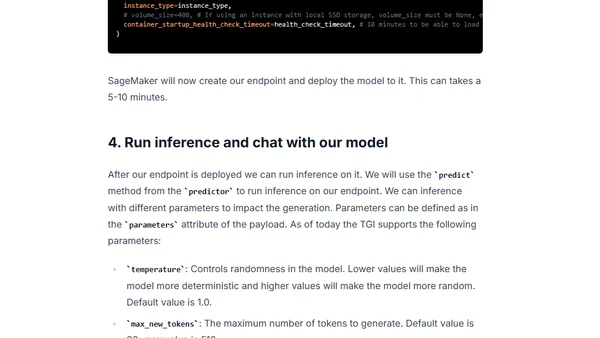

Read OriginalThis technical tutorial introduces the Hugging Face LLM Inference Container for Amazon SageMaker, powered by Text Generation Inference (TGI). It provides a step-by-step guide to deploy models like the 12B Pythia Open Assistant model, covering environment setup, deployment, inference, and creating a Gradio chatbot. It details the container's optimizations and supported model architectures.

Comments

No comments yet

Be the first to share your thoughts!

Browser Extension

Get instant access to AllDevBlogs from your browser

Top of the Week

1

2

Better react-hook-form Smart Form Components

Maarten Hus

•

2 votes

3

AGI, ASI, A*I – Do we have all we need to get there?

John D. Cook

•

1 votes

4

Quoting Thariq Shihipar

Simon Willison

•

1 votes

5

Dew Drop – January 15, 2026 (#4583)

Alvin Ashcraft

•

1 votes

6

Using Browser Apis In React Practical Guide

Jivbcoop

•

1 votes