Deep Learning is Powerful Because It Makes Hard Things Easy - Reflections 10 Years On

A reflection on a decade-old blog post about deep learning, examining past predictions on architecture, scaling, and the field's evolution.

A reflection on a decade-old blog post about deep learning, examining past predictions on architecture, scaling, and the field's evolution.

A curated collection of articles on software architecture, AI tools, code quality, and developer psychology, exploring foundational concepts and modern challenges.

A tutorial on building a transformer-based language model in R from scratch, covering tokenization, self-attention, and text generation.

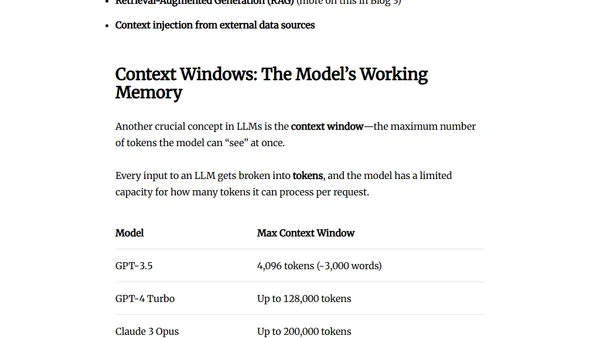

Explains how LLMs work by converting words to numerical embeddings, using vector spaces for semantic understanding, and managing context windows.

Introducing ModernBERT, a new family of state-of-the-art encoder models designed as a faster, more efficient replacement for the widely-used BERT.

The article explores how the writing process of AI models can inspire humans to overcome writer's block by adopting a less perfectionist approach.

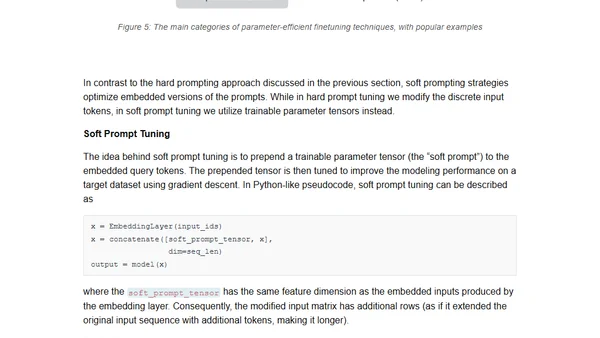

Explores methods for using and finetuning pretrained large language models, including feature-based approaches and parameter updates.

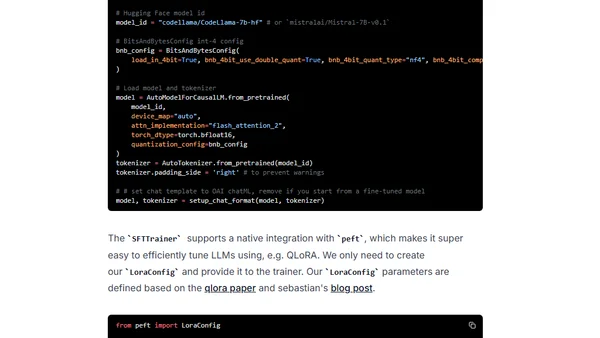

A practical guide to fine-tuning open-source large language models (LLMs) using Hugging Face's TRL and Transformers libraries in 2024.

A beginner-friendly guide to using HuggingFace's Transformers and Diffusers libraries for practical AI applications, including image generation.

Explains AI transformers, tokens, and embeddings using a simple LEGO analogy to demystify how language models process and understand text.

A guide to 9 PyTorch techniques for drastically reducing memory usage when training vision transformers and LLMs, enabling training on consumer hardware.

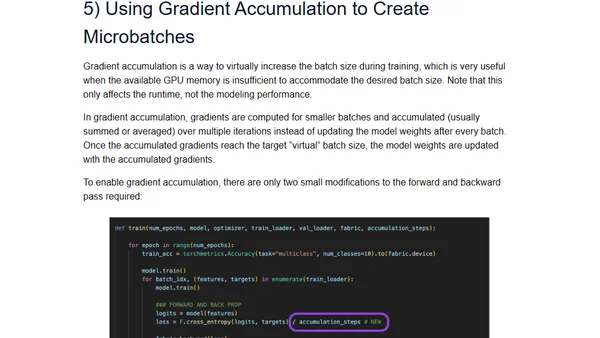

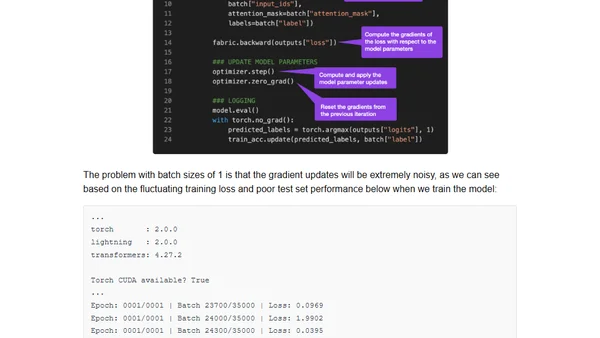

Guide to finetuning large language models on a single GPU using gradient accumulation to overcome memory limitations.

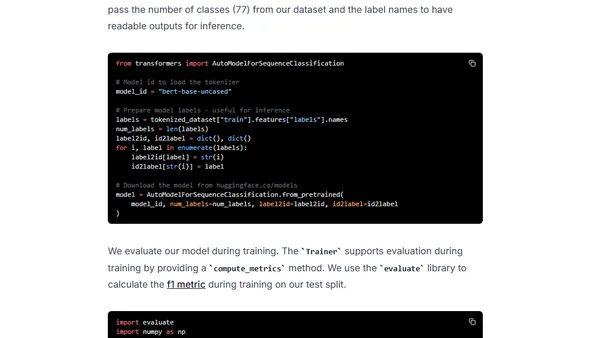

A tutorial on fine-tuning a BERT model for text classification using the new PyTorch 2.0 framework and the Hugging Face Transformers library.

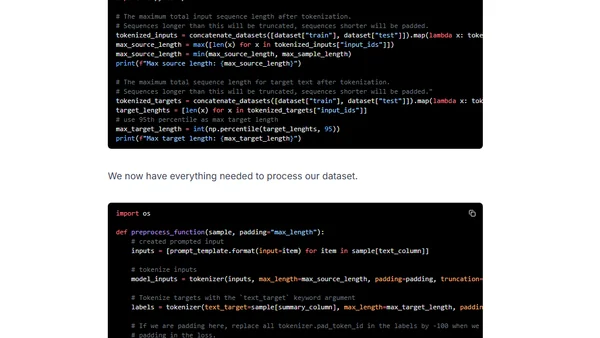

A technical guide on fine-tuning large FLAN-T5 models (XL/XXL) using DeepSpeed ZeRO and Hugging Face Transformers for efficient multi-GPU training.

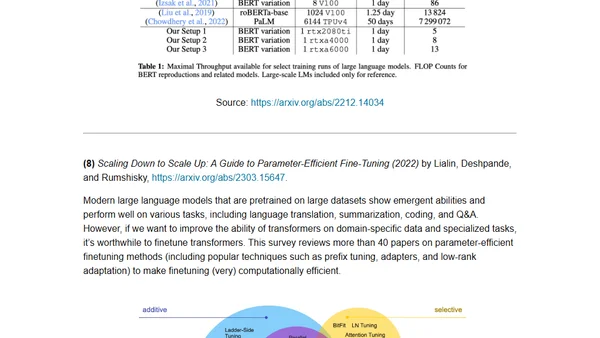

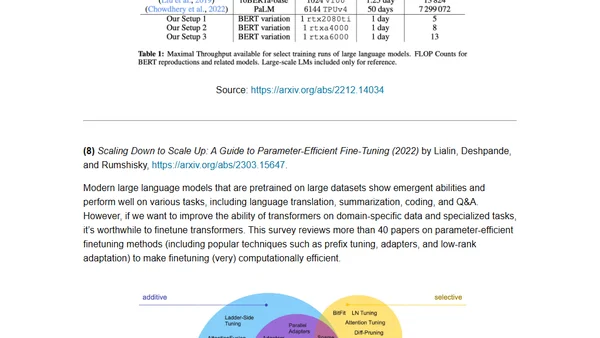

A curated reading list of key academic papers for understanding the development and architecture of large language models and transformers.

A curated reading list of key academic papers for understanding the development and architecture of large language models and transformers.

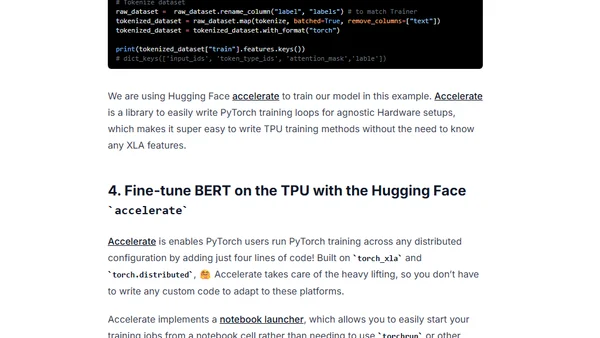

A tutorial on fine-tuning a BERT model for text classification using Hugging Face Transformers and Google Cloud TPUs with PyTorch.

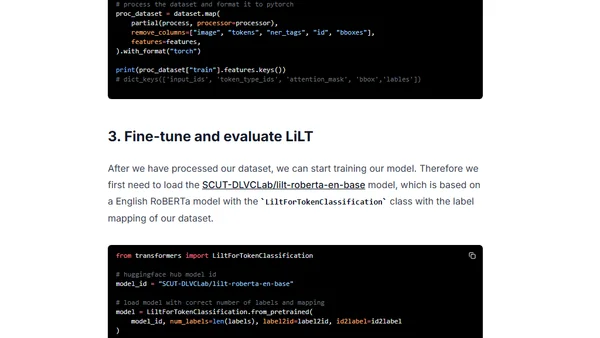

A tutorial on fine-tuning the LiLT model for language-agnostic document understanding and information extraction using Hugging Face Transformers.

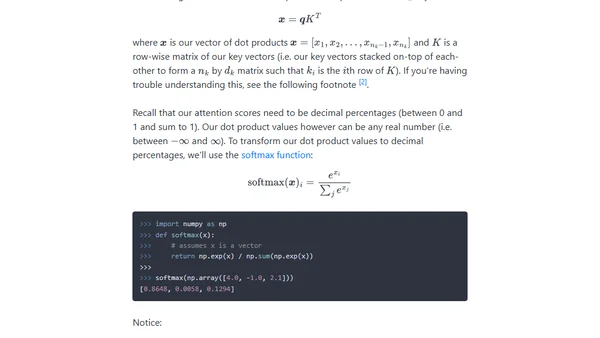

A technical explanation of the attention mechanism in transformers, building intuition from key-value lookups to the scaled dot product equation.

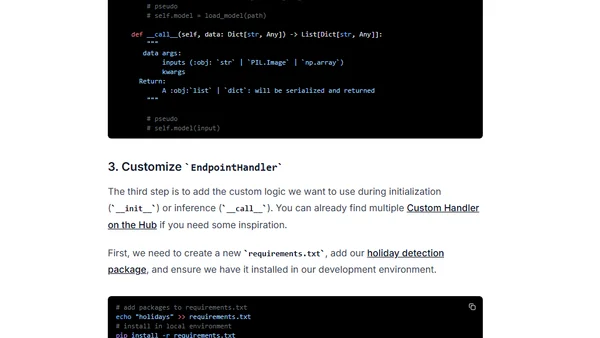

A tutorial on creating custom inference handlers for Hugging Face Inference Endpoints to add business logic and dependencies.