Finetune Granite3.1 for Reasoning

A technical guide on fine-tuning IBM's Granite3.1 AI model using Guided Reward Policy Optimization (GRPO) to enhance its reasoning capabilities.

A technical guide on fine-tuning IBM's Granite3.1 AI model using Guided Reward Policy Optimization (GRPO) to enhance its reasoning capabilities.

A summary of Chip Huyen's chapter on AI fine-tuning, arguing it's a last resort after prompt engineering and RAG, detailing its technical and organizational complexities.

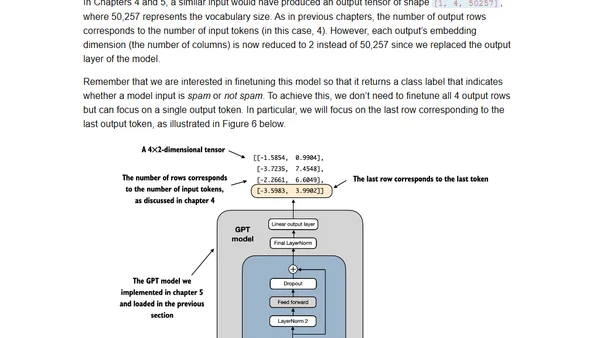

A guide to transforming pretrained LLMs into text classifiers, with insights from the author's new book on building LLMs from scratch.

A 1-hour video presentation covering the full development cycle of Large Language Models, from architecture and pretraining to finetuning and evaluation.

A 1-hour presentation on the LLM development cycle, covering architecture, training, finetuning, and evaluation methods.

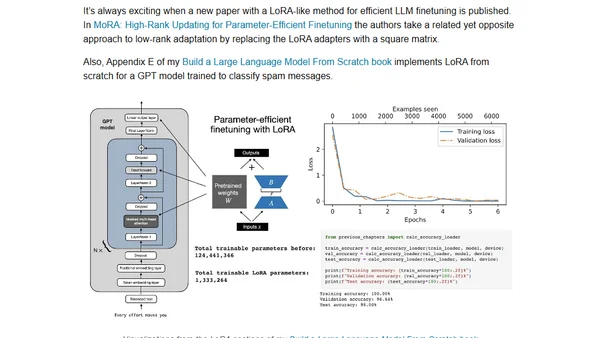

Explores new research on instruction masking and LoRA finetuning techniques for improving large language models (LLMs).

Explores methods for using and finetuning pretrained large language models, including feature-based approaches and parameter updates.

A summary of key AI research papers from February 2024, focusing on new open-source LLMs, small fine-tuned models, and efficient fine-tuning techniques.

A summary of February 2024 AI research, covering new open-source LLMs like OLMo and Gemma, and a study on small, fine-tuned models for text summarization.

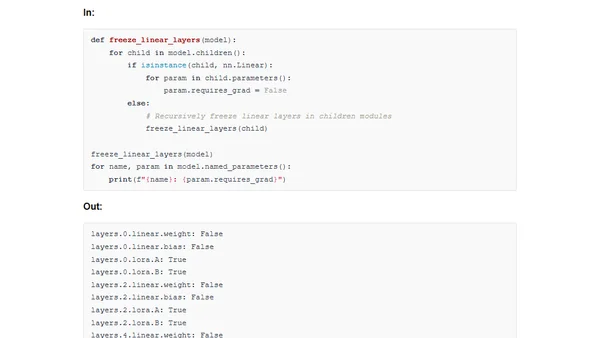

A technical guide implementing DoRA, a new low-rank adaptation method for efficient model finetuning, from scratch in PyTorch.

A guide to implementing LoRA and the new DoRA method for efficient model finetuning in PyTorch from scratch.

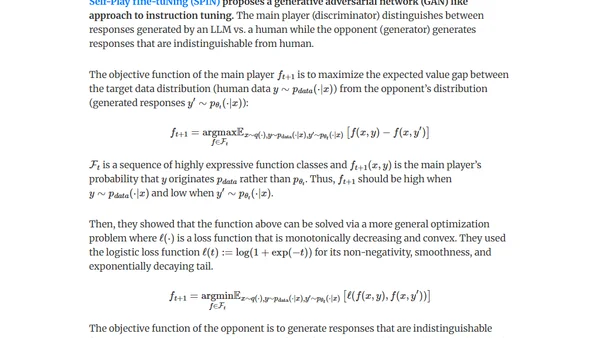

Explores methods for generating synthetic data (distillation & self-improvement) to fine-tune LLMs for pretraining, instruction-tuning, and preference-tuning.

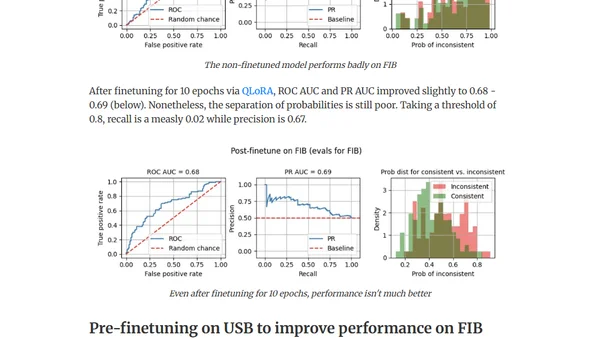

Explores using out-of-domain data to improve LLM finetuning for detecting factual inconsistencies (hallucinations) in text summaries.

Explores dataset-centric strategies for fine-tuning LLMs, focusing on instruction datasets to improve model performance without altering architecture.

Strategies for improving LLM performance through dataset-centric fine-tuning, focusing on instruction datasets rather than model architecture changes.

A guide to participating in the NeurIPS 2023 LLM Efficiency Challenge, focusing on efficient fine-tuning of large language models on a single GPU.

A guide to participating in the NeurIPS 2023 LLM Efficiency Challenge, covering setup, rules, and strategies for efficient LLM fine-tuning on limited hardware.

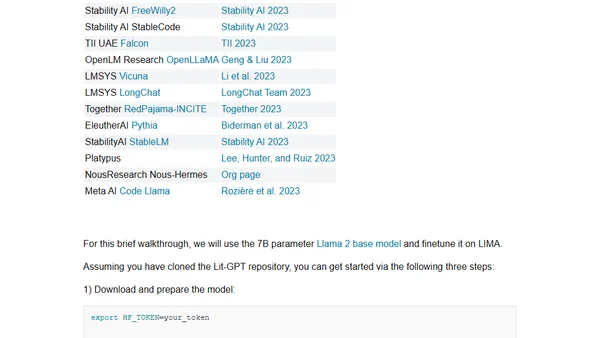

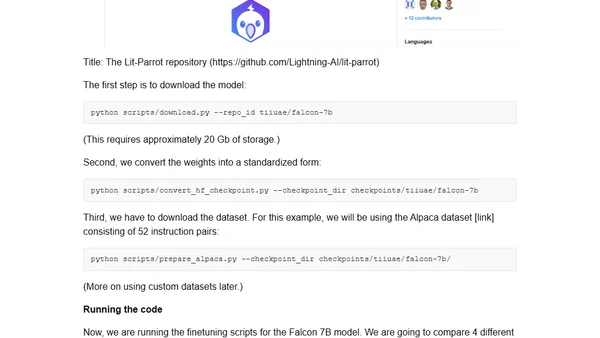

A guide to efficiently finetuning Falcon LLMs using parameter-efficient methods like LoRA and adapters to reduce compute costs.

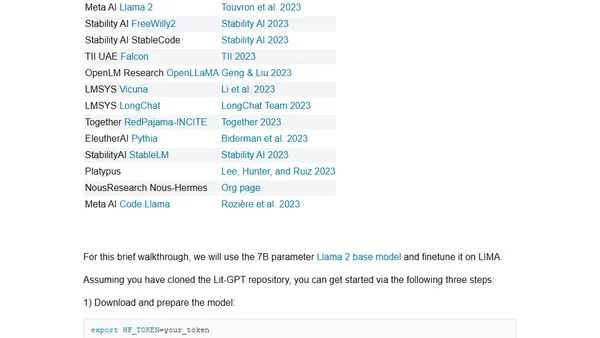

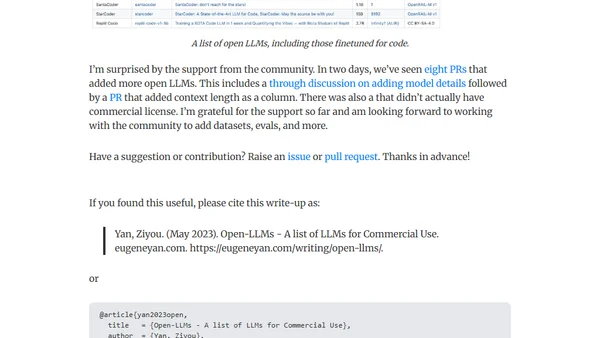

A curated list of open-source Large Language Models (LLMs) available for commercial use, including community-contributed updates and details.

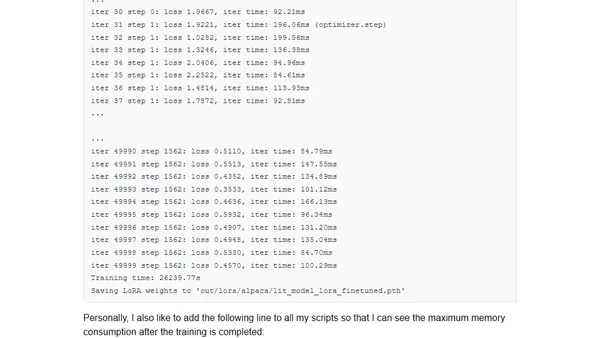

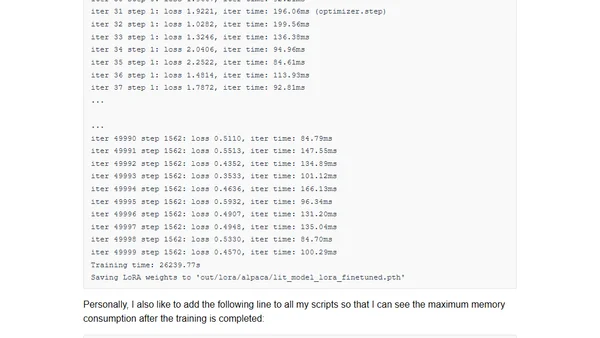

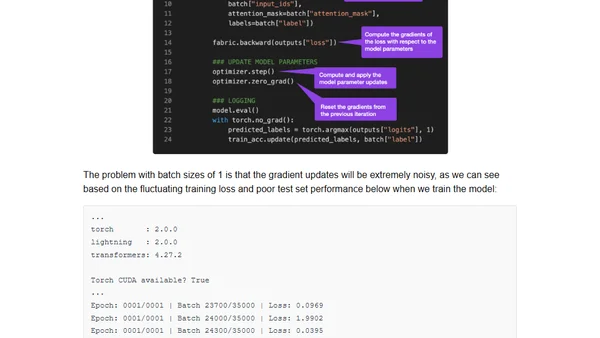

Guide to finetuning large language models on a single GPU using gradient accumulation to overcome memory limitations.