10/25/2023

•

EN

Adversarial Attacks on LLMs

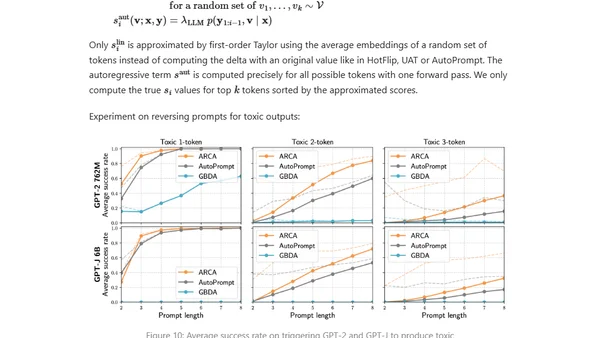

Explores adversarial attacks and jailbreak prompts that can make large language models produce unsafe or undesired outputs, bypassing safety measures.