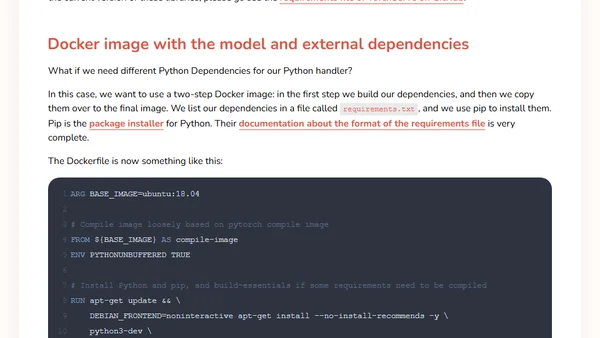

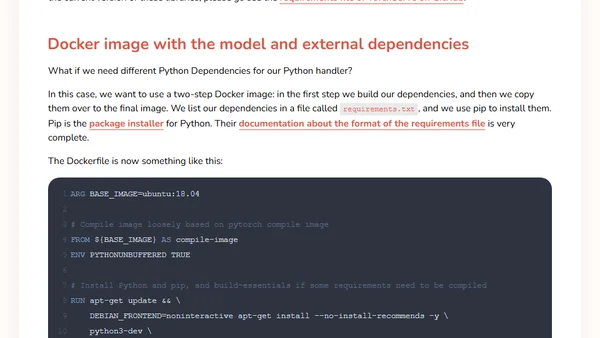

Create a PyTorch Docker image ready for production

A tutorial on creating a production-ready Docker image for PyTorch models using Torch Serve, including model archiving and dependency management.

A tutorial on creating a production-ready Docker image for PyTorch models using Torch Serve, including model archiving and dependency management.

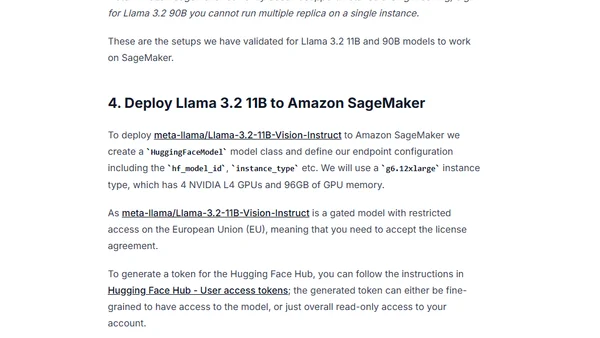

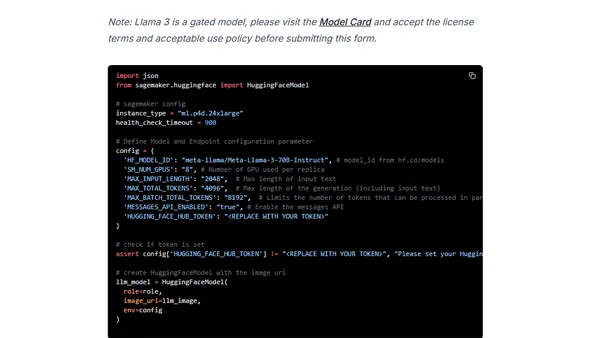

A technical guide on deploying Meta's Llama 3.2 Vision model on Amazon SageMaker using the Hugging Face LLM DLC.

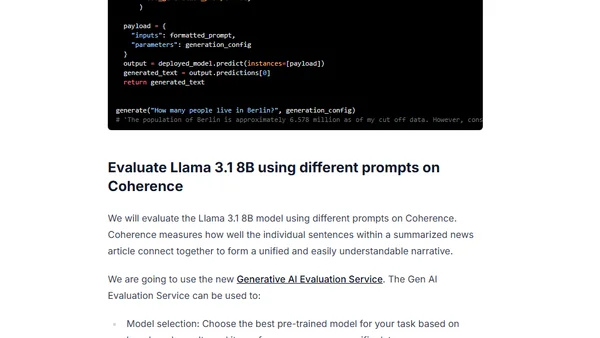

A technical guide on using Google's Vertex AI Gen AI Evaluation Service with Gemini to evaluate open LLM models like Llama 3.1.

A technical guide on deploying Meta's Llama 3 70B model on Amazon SageMaker using the Hugging Face LLM DLC and Text Generation Inference.

A guide on running a Large Language Model (LLM) locally using Ollama for privacy and offline use, covering setup and performance tips.

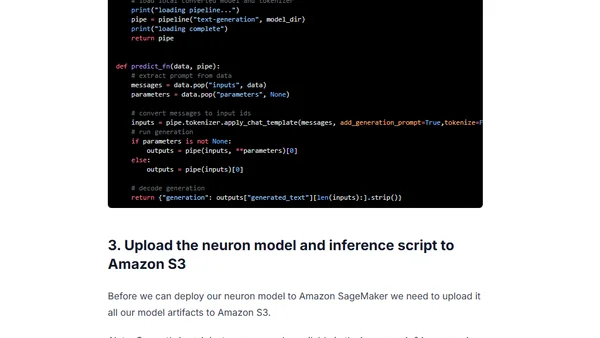

A tutorial on deploying Meta's Llama 2 7B model on AWS Inferentia2 using Amazon SageMaker and the optimum-neuron library.

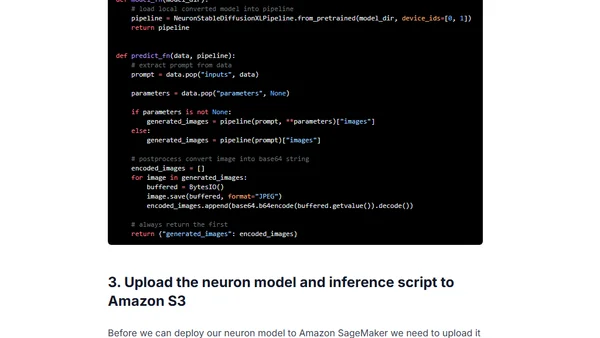

A tutorial on deploying Stable Diffusion XL for accelerated inference using AWS Inferentia2 and Amazon SageMaker.

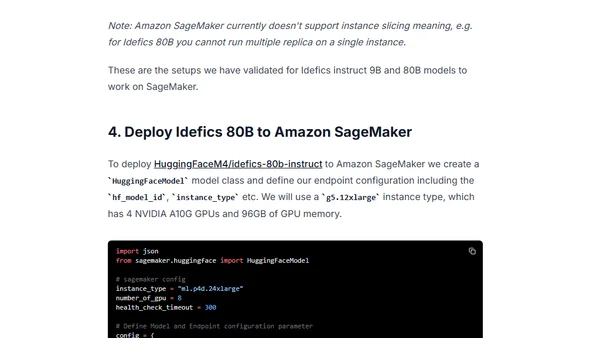

A technical guide on deploying Hugging Face's IDEFICS visual language models (9B & 80B parameters) to Amazon SageMaker using the LLM DLC.

A benchmark analysis of deploying Meta's Llama 2 models on Amazon SageMaker using Hugging Face's LLM Inference Container, evaluating cost, latency, and throughput.

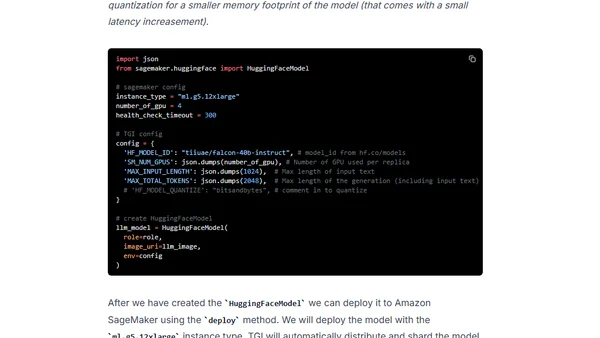

A technical guide on deploying the open-source Falcon 7B and 40B large language models to Amazon SageMaker using the Hugging Face LLM Inference Container.

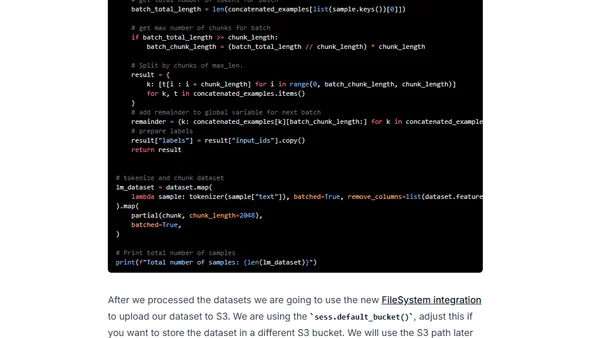

A technical guide on fine-tuning the BLOOMZ language model using PEFT and LoRA techniques, then deploying it on Amazon SageMaker.

A technical guide on deploying Google's FLAN-UL2 20B large language model for real-time inference using Amazon SageMaker and Hugging Face.

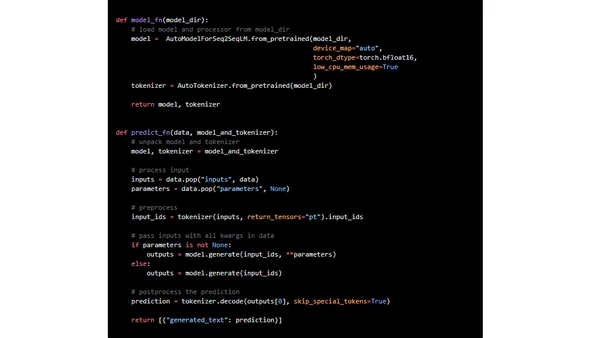

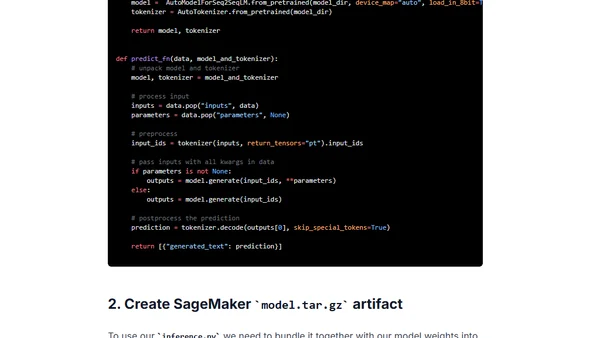

A technical guide on deploying the FLAN-T5-XXL large language model for real-time inference using Amazon SageMaker and Hugging Face.

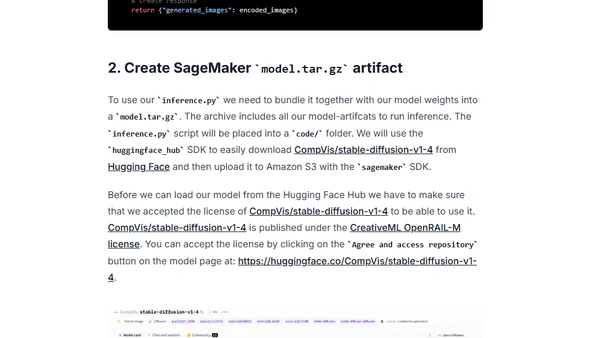

A technical guide on deploying the Stable Diffusion text-to-image model to Amazon SageMaker for real-time inference using the Hugging Face Diffusers library.

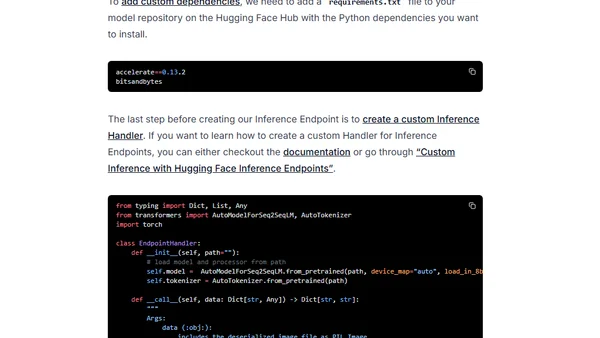

A tutorial on deploying the T5 11B language model for inference using Hugging Face Inference Endpoints on a budget.

Compares PyTorch's TorchScript tracing and scripting methods for model deployment, advocating for tracing as the preferred default approach.

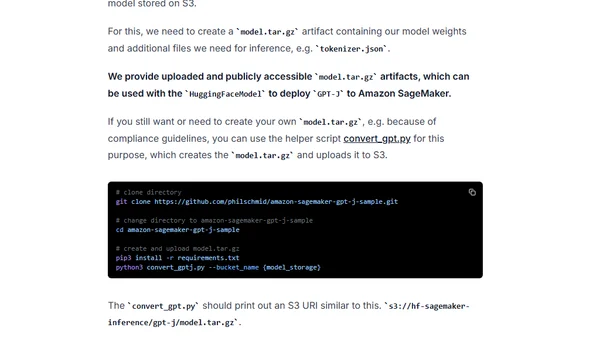

A guide to deploying the GPT-J 6B language model for production inference using Hugging Face Transformers and Amazon SageMaker.

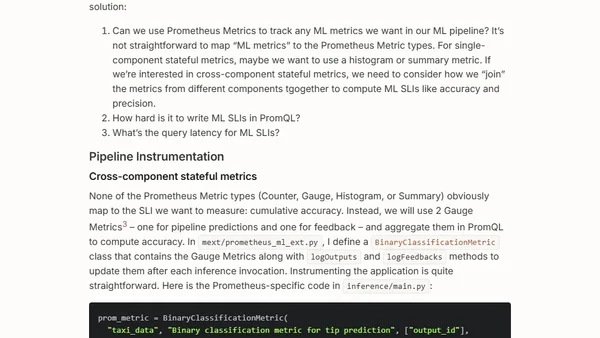

Explores the challenges of using Prometheus for ML pipeline monitoring, highlighting terminology issues and technical inadequacies.

A tutorial on deploying the BigScience T0_3B language model to AWS and Amazon SageMaker for production use.

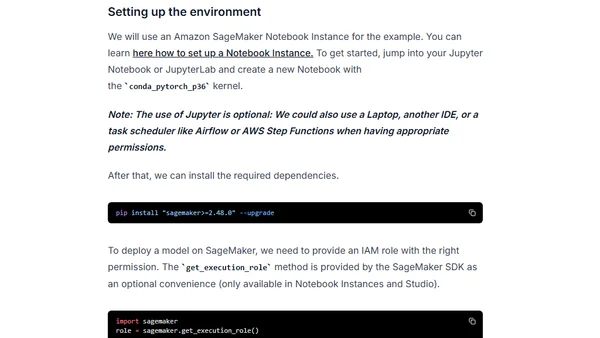

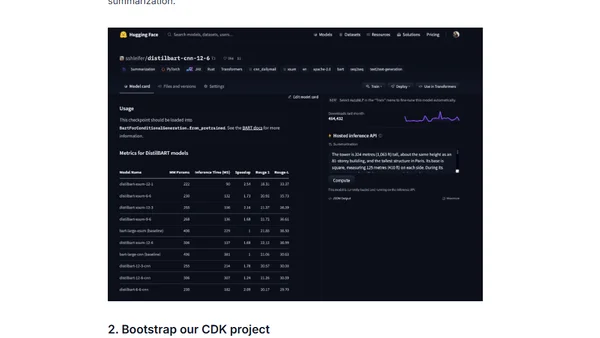

A tutorial on deploying Hugging Face Transformer models to production using AWS SageMaker, Lambda, and CDK for scalable, secure inference endpoints.