State of AI 2026 with Sebastian Raschka, Nathan Lambert, and Lex Fridman

A 4.5-hour interview discussing the state of AI in 2026, covering LLMs, geopolitics, training, open vs. closed models, AGI timelines, and industry implications.

Sebastian Raschka, PhD, is an LLM Research Engineer and AI expert bridging academia and industry, specializing in large language models, high-performance AI systems, and practical, code-driven machine learning.

97 articles from this blog

A 4.5-hour interview discussing the state of AI in 2026, covering LLMs, geopolitics, training, open vs. closed models, AGI timelines, and industry implications.

An overview of inference-time scaling methods for improving LLM reasoning, categorizing techniques like chain-of-thought and self-consistency.

A 2025 year-in-review of Large Language Models, covering major developments in reasoning, architecture, costs, and predictions for 2026.

A curated list of notable LLM (Large Language Model) research papers published from July to December 2025, categorized by topic.

A historical overview of beginner-friendly 'Hello World' examples in machine learning and AI, from 2013's Random Forests to 2025's Qwen3 with RLVR.

A technical analysis of DeepSeek V3.2's architecture, sparse attention, and reinforcement learning updates, comparing it to other flagship AI models.

Author shares a structured method for reading technical books effectively, focusing on understanding concepts and practical coding.

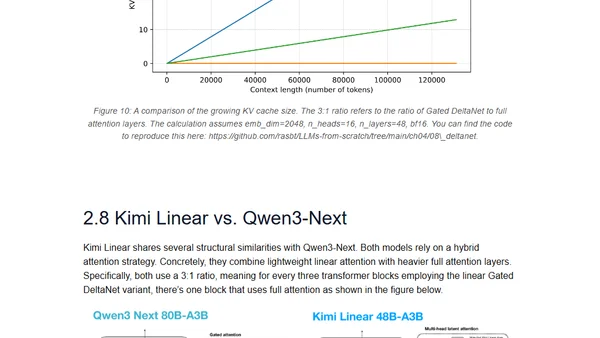

An overview of alternative LLM architectures beyond standard transformers, including linear attention hybrids, text diffusion models, and world models.

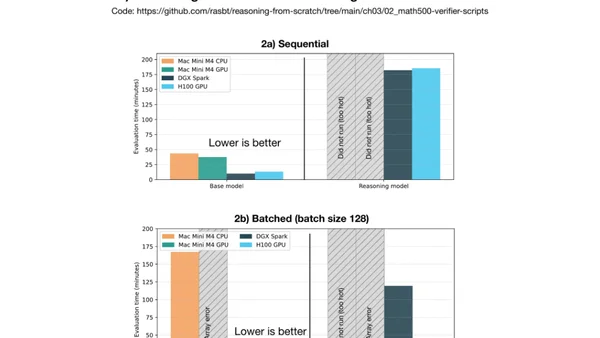

A technical comparison of the DGX Spark and Mac Mini M4 Pro for local PyTorch development and LLM inference, including benchmarks.

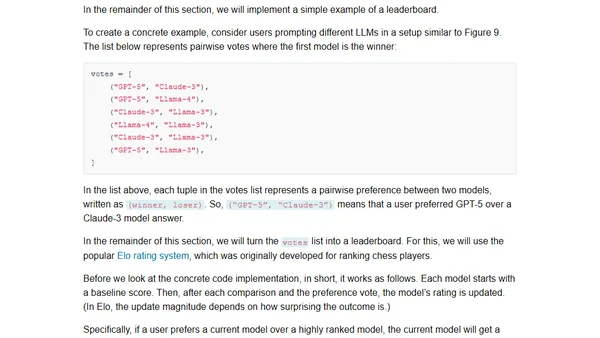

Explores four main methods for evaluating Large Language Models (LLMs), including code examples for implementing each approach from scratch.

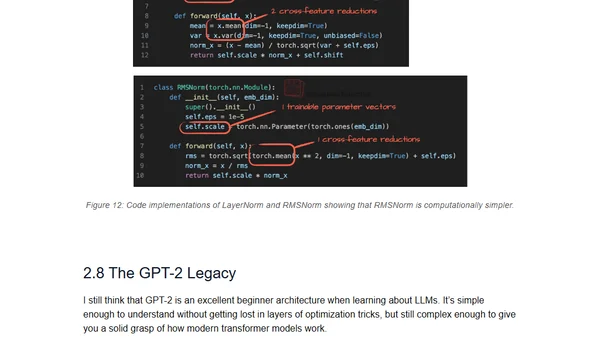

A hands-on tutorial implementing the Qwen3 large language model architecture from scratch using pure PyTorch, explaining its core components.

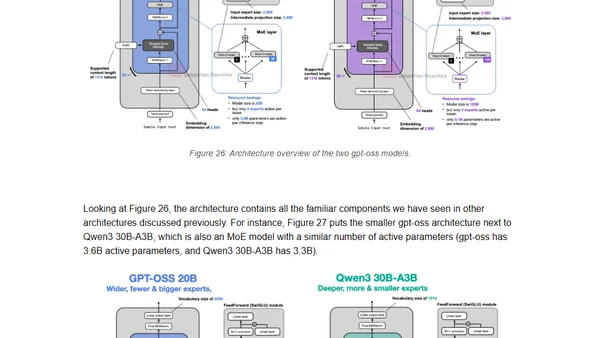

Analyzes the architectural advancements in OpenAI's new open-weight gpt-oss models, comparing them to GPT-2 and other modern LLMs.

A technical comparison of architectural changes in major Large Language Models (LLMs) from 2024-2025, focusing on structural innovations beyond benchmarks.

A curated list of key LLM research papers from the first half of 2025, organized by topic such as reasoning models and reinforcement learning.

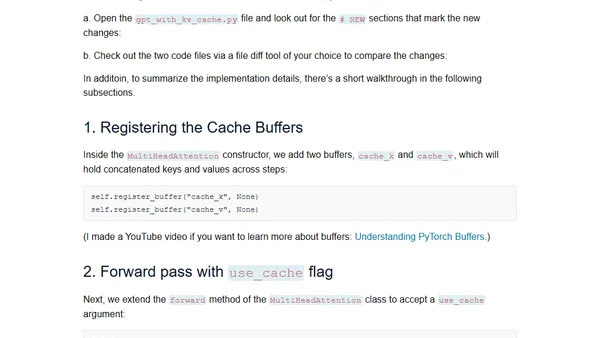

A technical tutorial explaining the concept and implementation of KV caches for efficient inference in Large Language Models (LLMs).

A course teaching how to code Large Language Models from scratch to deeply understand their inner workings, with practical video tutorials.

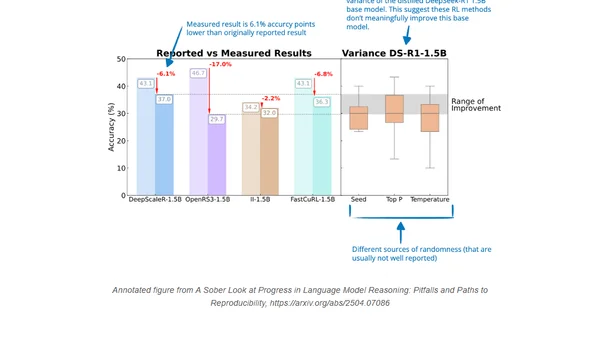

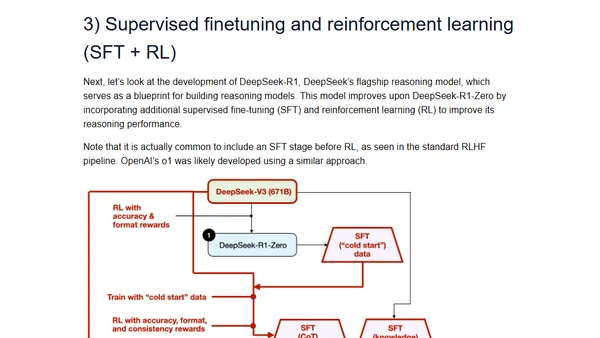

Explores the latest developments in using reinforcement learning to improve reasoning capabilities in large language models (LLMs).

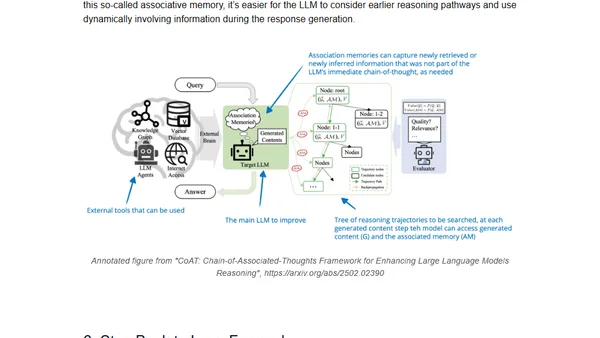

An introduction to reasoning in Large Language Models, covering key concepts like chain-of-thought and methods to improve LLM reasoning abilities.

Explores recent research on improving LLM reasoning through inference-time compute scaling methods, comparing various techniques and their impact.

Explores four main approaches to building and enhancing reasoning capabilities in Large Language Models (LLMs) for complex tasks.