Kimi K2.5: Visual Agentic Intelligence

Kimi K2.5 is a new multimodal AI model with visual understanding and a self-directed agent swarm for complex, parallel task execution.

Kimi K2.5 is a new multimodal AI model with visual understanding and a self-directed agent swarm for complex, parallel task execution.

Kimi K2.5 is a new multimodal AI model with visual understanding and a self-directed agent swarm for complex task execution.

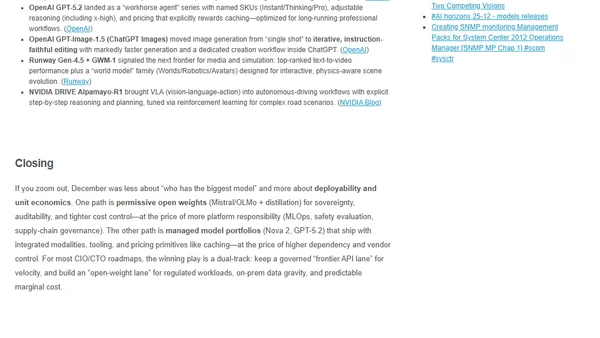

December's major AI model releases focused on open licensing, long-context efficiency, and multimodal capabilities from companies like Mistral, Amazon, and OpenAI.

Technical report on Qwen3-VL's video processing capabilities, achieving near-perfect accuracy in long-context needle-in-a-haystack evaluations.

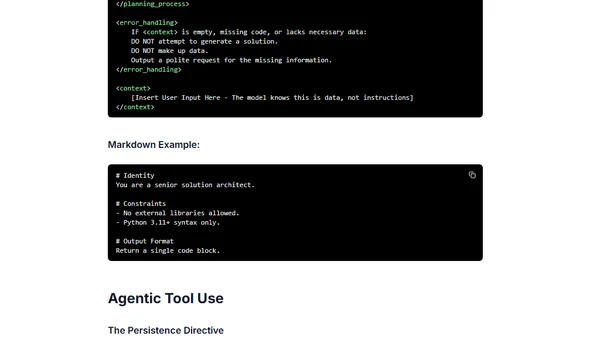

Best practices and structural patterns for effectively prompting the Gemini 3 AI model, focusing on directness, logic, and clear instruction.

A hands-on review of Google's new Gemini 3 Pro AI model, covering its features, benchmarks, pricing, and testing its multimodal capabilities.

Explores color sensitivity in AI models when reading text from a canvas, noting issues with red text on dark backgrounds.

A 10-step guide for e-commerce teams to generate consistent product images using Google's Gemini 2.5 Flash AI model for text-to-image and editing tasks.

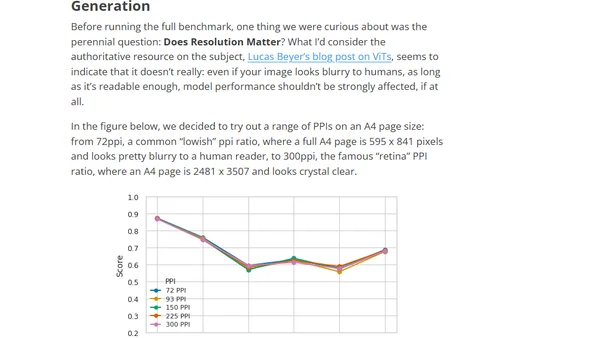

Introduces ReadBench, a benchmark for evaluating how well Vision-Language Models (VLMs) can read and extract information from images of text.

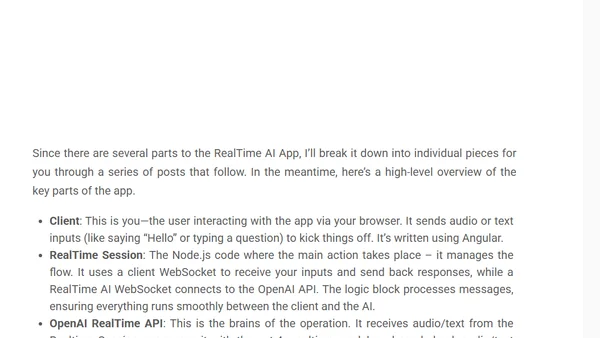

An introduction to RealTime AI, exploring the fundamentals of low-latency AI using the OpenAI Realtime API for fluid, conversational applications.

Explores GPT-4o, OpenAI's new multimodal AI model that processes text, images, and audio, now available in preview on Azure AI.

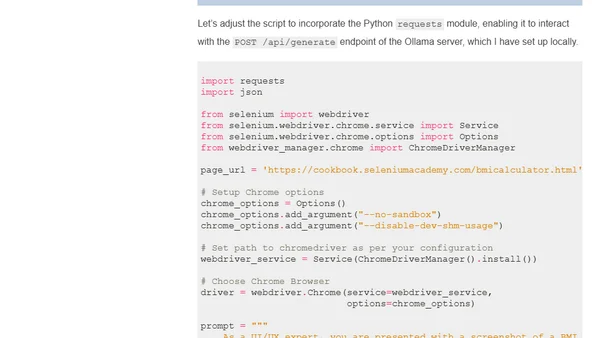

Explores using multimodal vision AI models like LLaVA for advanced UI/UX test automation, moving beyond traditional methods.

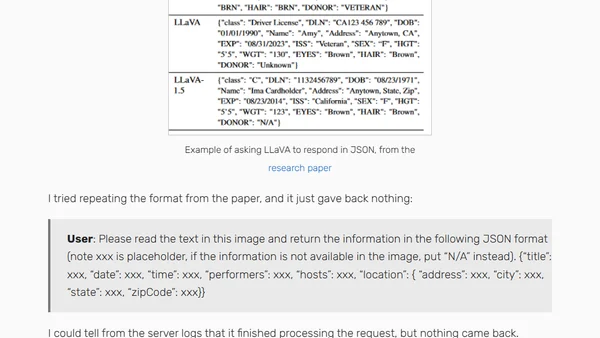

A developer experiments with Llamafile and LLaVA 1.5 to extract structured data from comedy show posters, testing its accuracy and JSON output capabilities.

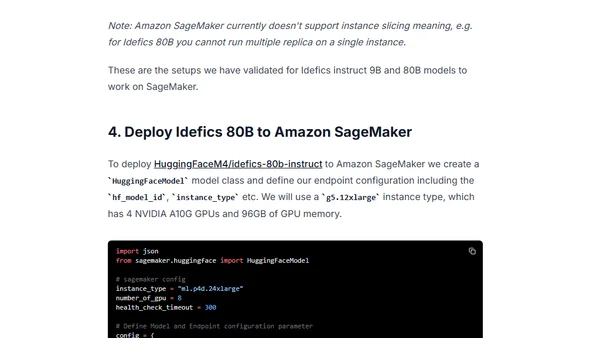

A technical guide on deploying Hugging Face's IDEFICS visual language models (9B & 80B parameters) to Amazon SageMaker using the LLM DLC.

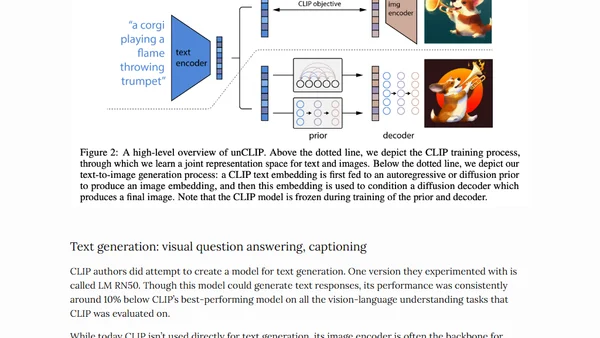

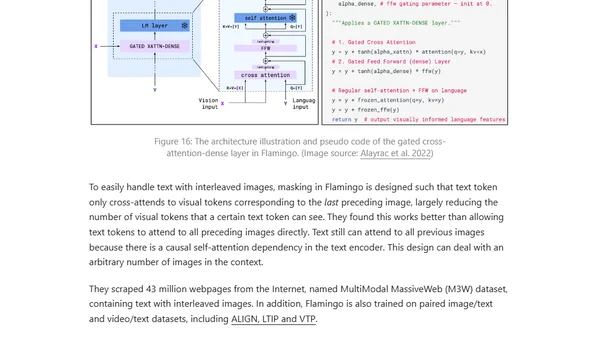

An in-depth exploration of Large Multimodal Models (LMMs), covering their fundamentals, key architectures like CLIP and Flamingo, and current research directions.

Explores methods for extending pre-trained language models to process visual information, focusing on four approaches for vision-language tasks.