A Social Network for A.I. Bots Only. No Humans Allowed.

A blog post discussing a New York Times article about Moltbook, a social network exclusively for AI bots, and the author's insights on AI behavior.

A blog post discussing a New York Times article about Moltbook, a social network exclusively for AI bots, and the author's insights on AI behavior.

A 2025 AI research review covering tabular machine learning, the societal impacts of AI scale, and open-source data-science tools.

Andrej Karpathy notes a 600x cost reduction in training a GPT-2 level model over 7 years, highlighting rapid efficiency gains in AI.

A reflection on a decade-old blog post about deep learning, examining past predictions on architecture, scaling, and the field's evolution.

Leading AI researchers debate whether current scaling and innovations are sufficient to achieve Artificial General Intelligence (AGI).

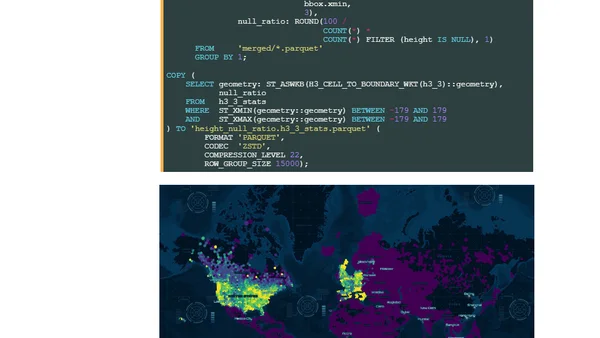

Analysis of Microsoft's 2026 Global ML Building Footprints dataset, including technical setup and data exploration using DuckDB and QGIS.

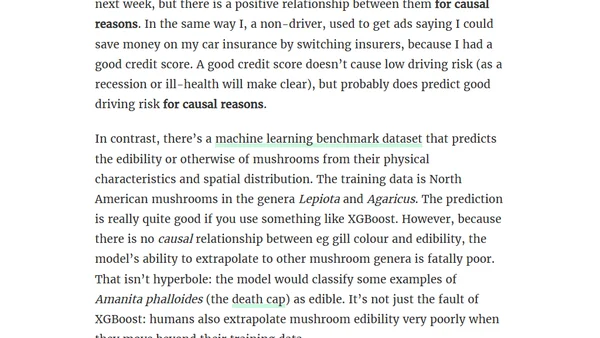

Explores whether predictive statistical models require causal relationships to be useful, using examples from data sampling and real-world scenarios.

A syllabus for a university course exploring the principles of feedback, control theory, and their connections to machine learning and optimization.

A professor reflects on the intersection of machine learning and control theory, discussing the Learning for Dynamics and Control (L4DC) conference and the need for a merged perspective.

The author announces their new role as Probabl's CSO to accelerate development of the scikit-learn machine learning library and its ecosystem.

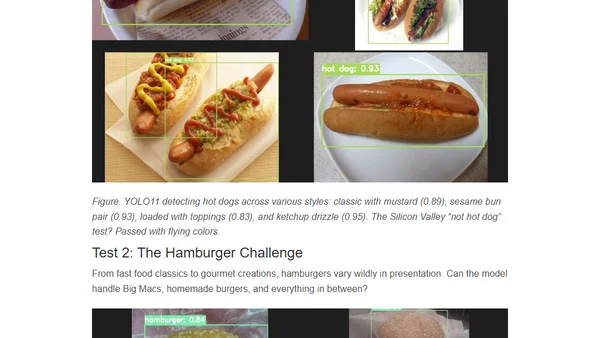

A technical comparison of YOLO-based food detection from 2018 to 2026, showing the evolution of deep learning tooling and ease of use.

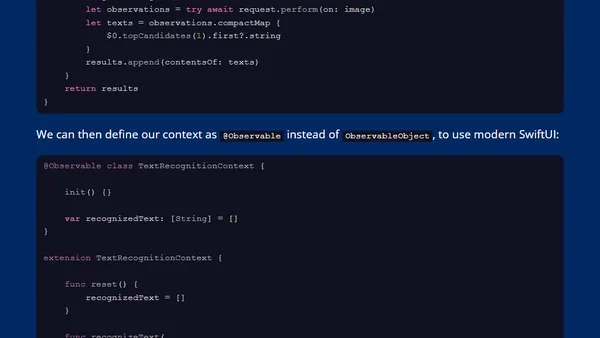

A tutorial on using Apple's Vision framework to extract text from images in Swift, covering both old and new APIs.

Explores the risk of AI model collapse as LLMs increasingly train on AI-generated synthetic data, potentially degrading future model quality.

A reflection on the arrival of Artificial General Intelligence (AGI), arguing that its 'general' nature distinguishes it from previous purpose-built AI models.

A reflection on the arrival of Artificial General Intelligence (AGI), arguing that its 'general' nature distinguishes it from all previous purpose-built AI models.

An infrastructure engineer explores AI Engineering, defining the role and its focus on using pre-trained models, prompt engineering, and practical application building.

A 2025 year-in-review analysis of large language models (LLMs), covering key developments in reasoning, architecture, costs, and predictions for 2026.

A curated list of notable LLM research papers from the second half of 2025, categorized by topics like reasoning, training, and multimodal models.

A curated list of notable LLM (Large Language Model) research papers published from July to December 2025, categorized by topic.

A year-in-review blog post reflecting on machine learning course blogging, revisiting 'The Bitter Lesson', and critiquing trends in ML and economics.