LLM Research Insights: Instruction Masking and New LoRA Finetuning Experiments?

Analysis of new LLM research on instruction masking and LoRA finetuning methods, with practical insights for developers.

Analysis of new LLM research on instruction masking and LoRA finetuning methods, with practical insights for developers.

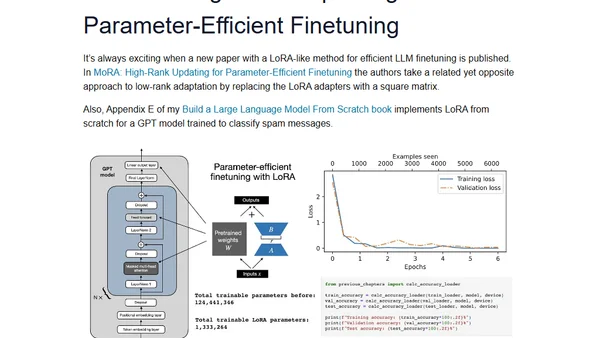

A guide to efficiently finetuning Falcon LLMs using parameter-efficient methods like LoRA and Adapters to reduce compute time and cost.

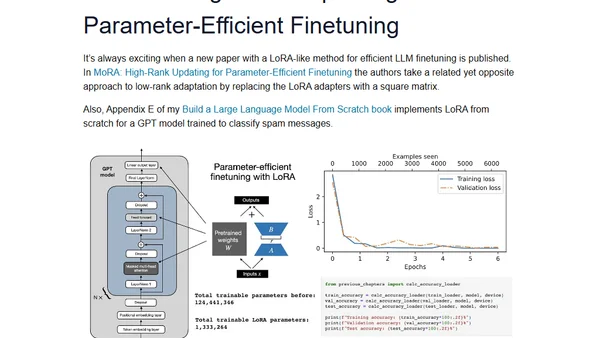

Explains Low-Rank Adaptation (LoRA), a parameter-efficient technique for fine-tuning large language models to reduce computational costs.

Learn about Low-Rank Adaptation (LoRA), a parameter-efficient method for finetuning large language models with reduced computational costs.

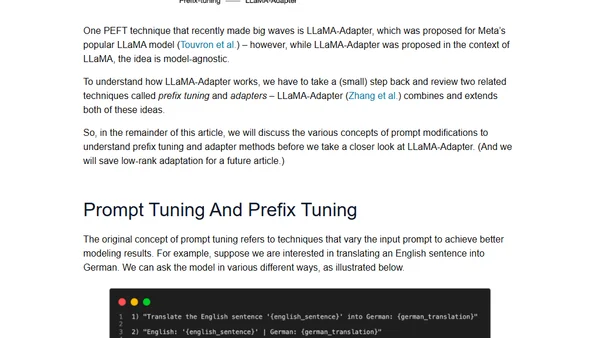

Explains parameter-efficient finetuning methods for large language models, covering techniques like prefix tuning and LLaMA-Adapters.

A guide to parameter-efficient finetuning methods for large language models, covering techniques like prefix tuning and LLaMA-Adapters.