Understanding and Coding the KV Cache in LLMs from Scratch

Explains the KV cache technique for efficient LLM inference with a from-scratch code implementation.

Explains the KV cache technique for efficient LLM inference with a from-scratch code implementation.

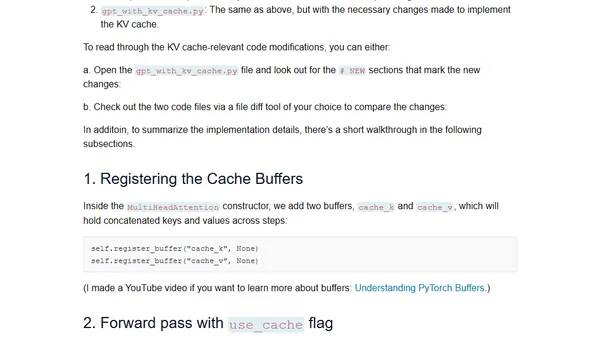

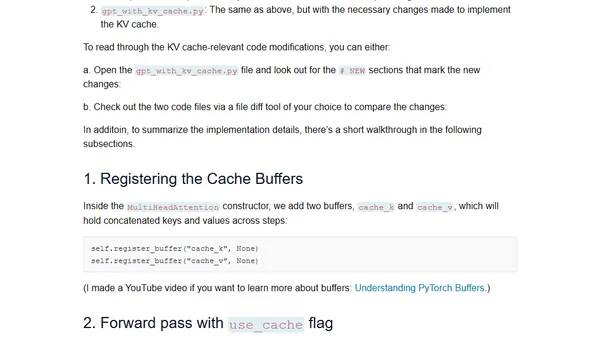

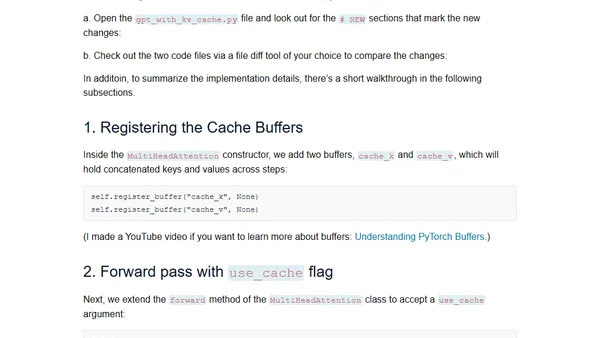

A technical tutorial explaining the concept and implementation of KV caches for efficient inference in Large Language Models (LLMs).

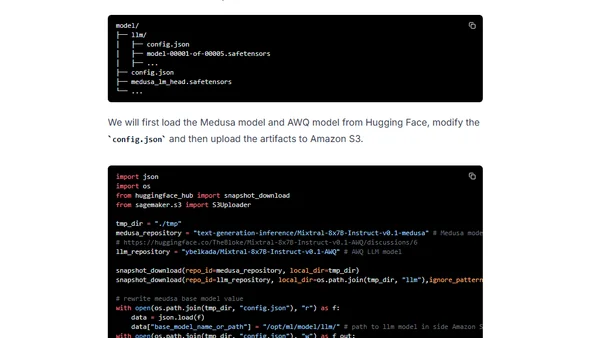

A technical guide on accelerating the Mixtral 8x7B LLM using speculative decoding (Medusa) and quantization (AWQ) for deployment on Amazon SageMaker.

A developer's monthly curated list of tech resources, covering databases, LLM performance, AI in development, and microservices.

Guide to scaling LLM inference on Amazon SageMaker using new multi-replica endpoints for improved throughput and cost efficiency.

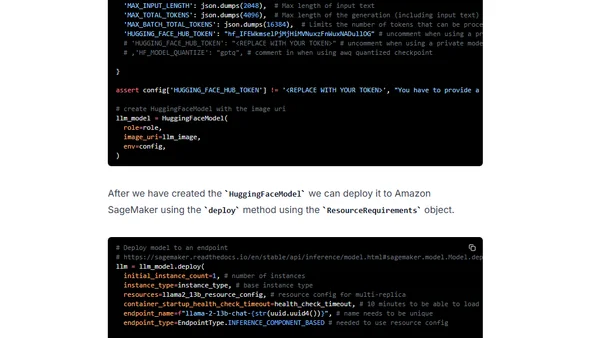

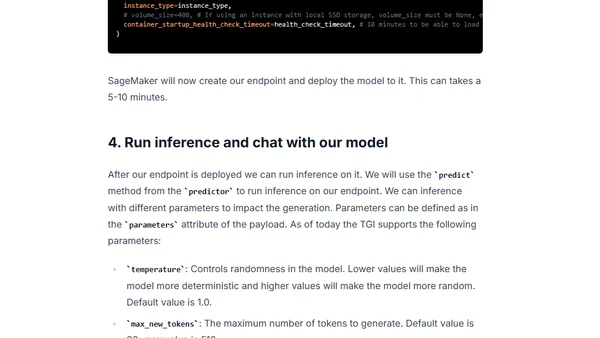

A technical guide on deploying the open-source Falcon 7B and 40B large language models to Amazon SageMaker using the Hugging Face LLM Inference Container.

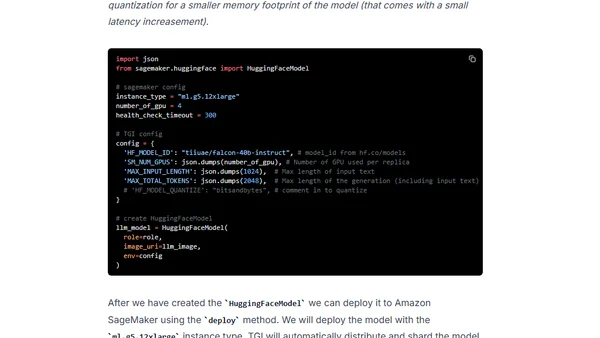

Guide to deploying open-source LLMs like BLOOM and Open Assistant to Amazon SageMaker using Hugging Face's new LLM Inference Container.