As Rocks May Think

A developer explores using AI coding agents like Claude to automate software development, research, and experimentation, focusing on implementing AlphaGo from scratch.

A developer explores using AI coding agents like Claude to automate software development, research, and experimentation, focusing on implementing AlphaGo from scratch.

A reflection on a decade-old blog post about deep learning, examining past predictions on architecture, scaling, and the field's evolution.

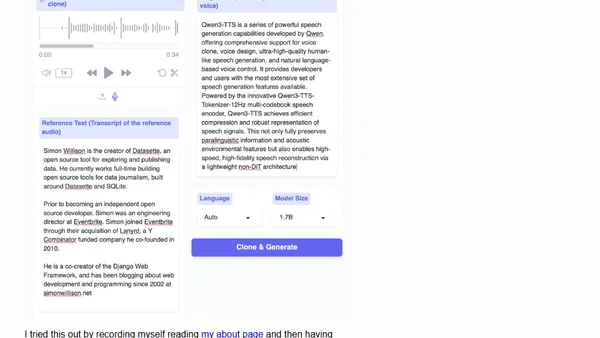

Qwen3-TTS, a family of advanced multilingual text-to-speech models, is now open source, featuring voice cloning and description-based control.

A robotics engineer reflects on leaving humanoid robotics company 1X, discussing the company's growth and the 'magical objects' driving AI progress.

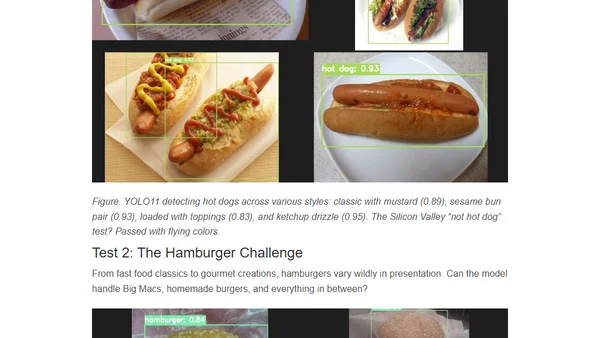

A technical comparison of YOLO-based food detection from 2018 to 2026, showing the evolution of deep learning tooling and ease of use.

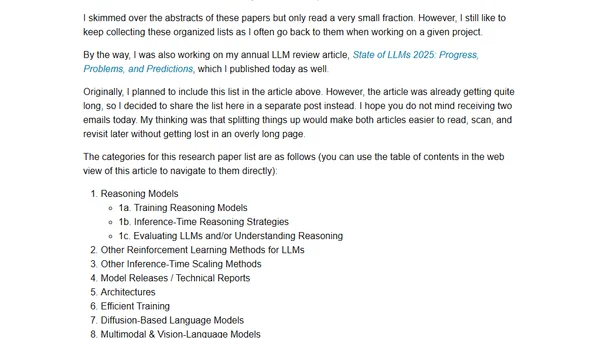

A curated list of notable LLM (Large Language Model) research papers published from July to December 2025, categorized by topic.

Explores the application of Graph Neural Networks, embeddings, and foundation models to spatial data science, with practical examples in R.

A course teaching how to code Large Language Models (LLMs) from scratch to deeply understand their inner workings and fundamentals.

A course teaching how to code Large Language Models from scratch to deeply understand their inner workings, with practical video tutorials.

Explains key AI terminology like AI, ML, deep learning, and LLMs to help engineers use the correct terms.

Explores the evolution of AI from symbolic systems to modern Large Language Models (LLMs), detailing their capabilities and limitations.

An introduction to reasoning in Large Language Models, covering key concepts like chain-of-thought and methods to improve LLM reasoning abilities.

An AI researcher shares her journey into GPU programming and introduces WebGPU Puzzles, a browser-based tool for learning GPU fundamentals from scratch.

A 3-hour coding workshop video covering the implementation, training, and use of Large Language Models (LLMs) from scratch.

A technical article exploring deep neural networks by comparing classic computational methods to modern ML, using sine function calculation as an example and implementing it in Kotlin.

Introduces CARTE, a foundation model for tabular data, explaining its architecture, pretraining on knowledge graphs, and results.

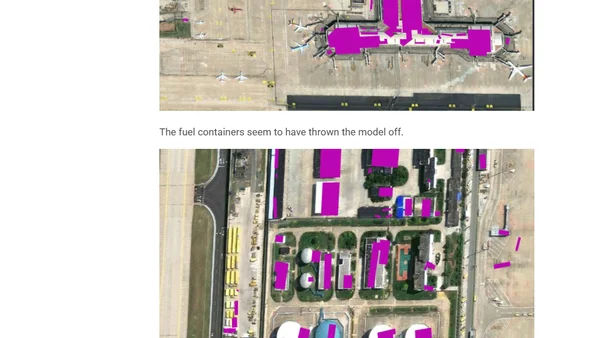

Analysis of a research paper detailing an AI model that extracted 281 million building footprints from satellite imagery across East Asia.

An analysis of the ARC Prize AI benchmark, questioning if human-level intelligence can be achieved solely through deep learning and transformers.

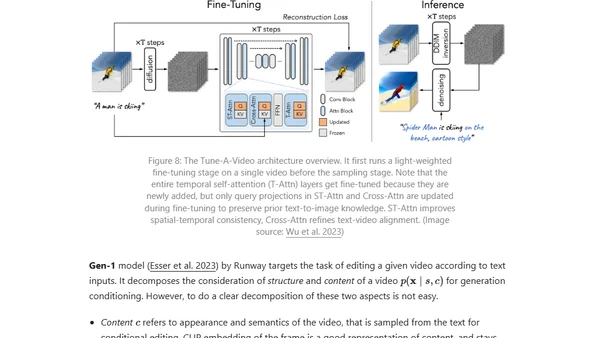

Explores the application of diffusion models to video generation, covering technical challenges, parameterization, and sampling methods.

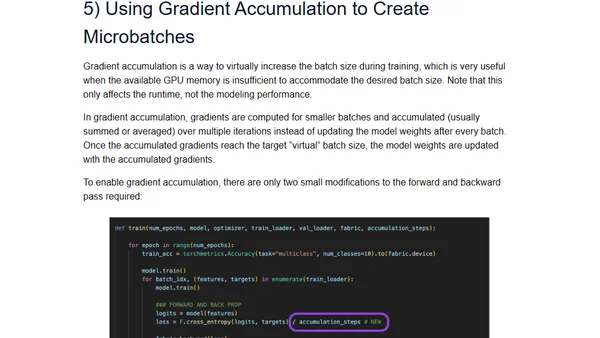

A guide to 9 PyTorch techniques for drastically reducing memory usage when training vision transformers and LLMs, enabling training on consumer hardware.