Deploy QwQ-32B-Preview the best open Reasoning Model on AWS with Hugging Face

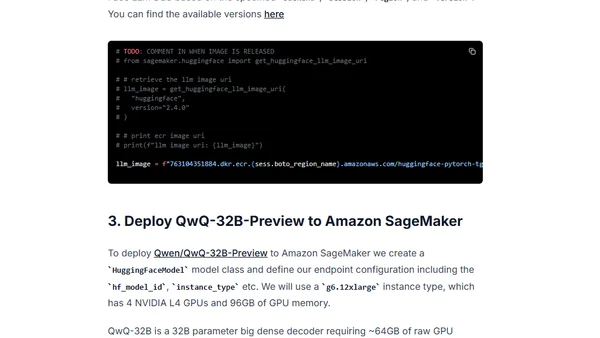

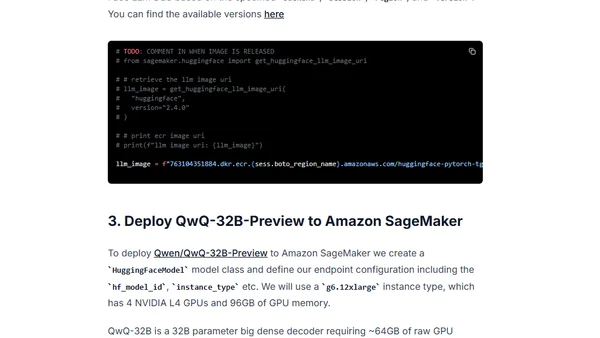

A technical guide on deploying the QwQ-32B-Preview open-source reasoning model on AWS SageMaker using Hugging Face's tools.

A technical guide on deploying the QwQ-32B-Preview open-source reasoning model on AWS SageMaker using Hugging Face's tools.

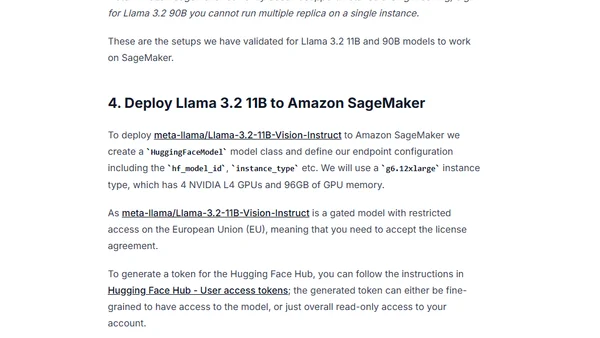

A technical guide on deploying Meta's Llama 3.2 Vision model on Amazon SageMaker using the Hugging Face LLM DLC.

A developer shares their experience taking the AWS Certified AI Practitioner beta exam, covering study methods, key topics, and exam structure.

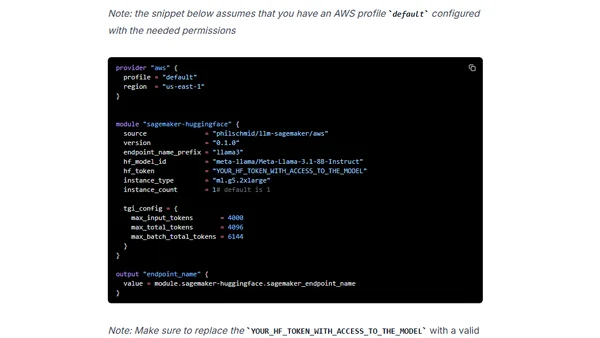

A guide to deploying open-source LLMs like Llama 3 to Amazon SageMaker using Terraform for Infrastructure as Code.

A guide to fine-tuning and deploying custom embedding models for RAG applications on Amazon SageMaker using Sentence Transformers v3.

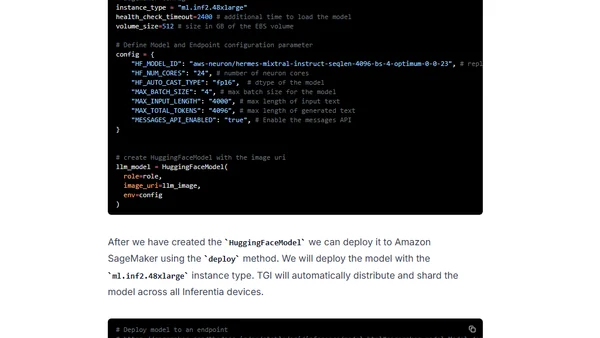

A technical guide on deploying the Mixtral 8x7B LLM on AWS Inferentia2 using Hugging Face Optimum and Amazon SageMaker.

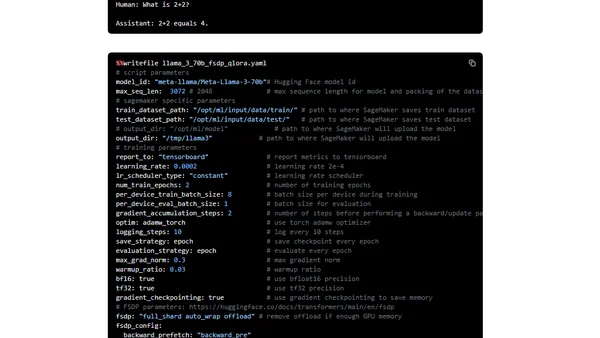

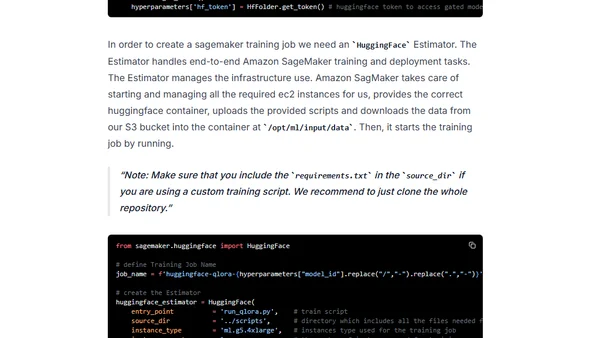

A technical guide on fine-tuning the Llama 3 LLM using PyTorch FSDP and Q-Lora on Amazon SageMaker for efficient training.

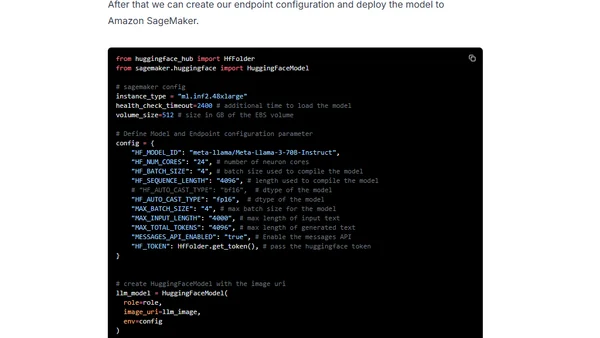

A technical guide on deploying Meta's Llama 3 70B Instruct model on AWS Inferentia2 using Hugging Face Optimum and Amazon SageMaker.

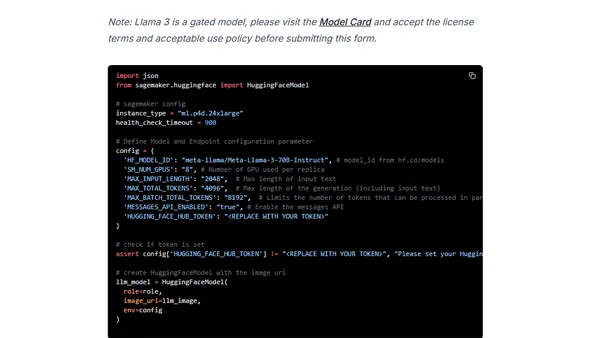

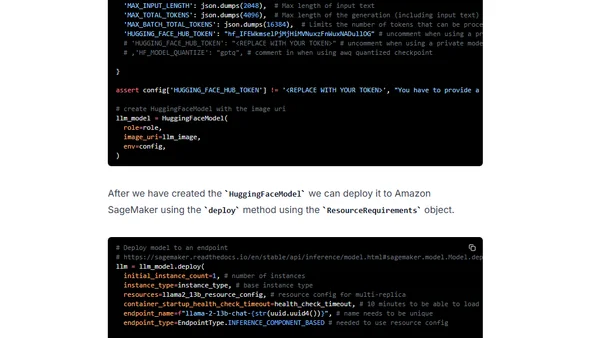

A technical guide on deploying Meta's Llama 3 70B model on Amazon SageMaker using the Hugging Face LLM DLC and Text Generation Inference.

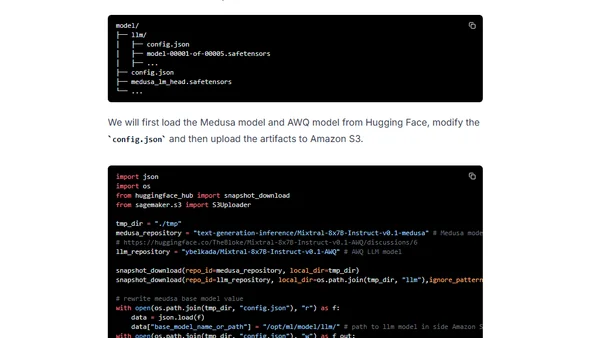

A technical guide on accelerating the Mixtral 8x7B LLM using speculative decoding (Medusa) and quantization (AWQ) for deployment on Amazon SageMaker.

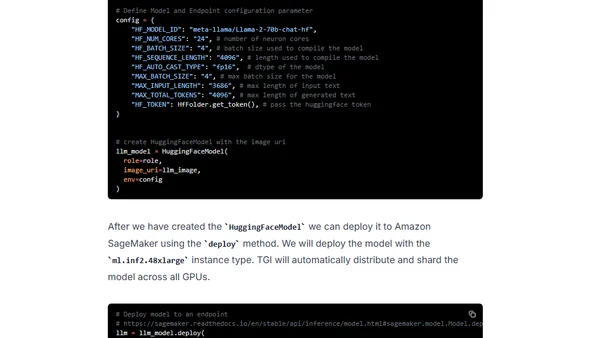

A technical guide on deploying Meta's Llama 2 70B large language model on AWS Inferentia2 hardware using Hugging Face Optimum and SageMaker.

A technical guide on fine-tuning and evaluating open-source Large Language Models (LLMs) using Amazon SageMaker and Hugging Face libraries.

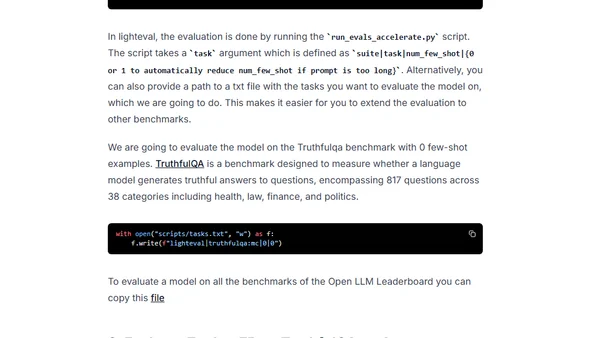

A tutorial on evaluating Large Language Models using Hugging Face's Lighteval library on Amazon SageMaker, focusing on benchmarks like TruthfulQA.

Guide to scaling LLM inference on Amazon SageMaker using new multi-replica endpoints for improved throughput and cost efficiency.

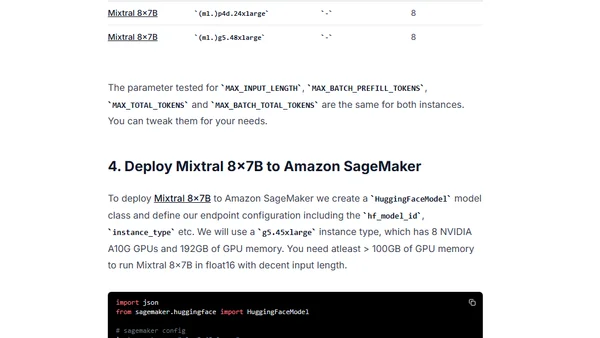

A technical guide on deploying the Mixtral 8x7B open-source LLM from Mistral AI to Amazon SageMaker using the Hugging Face LLM DLC.

Tutorial on deploying embedding models using AWS Inferentia2 and Amazon SageMaker for accelerated inference performance.

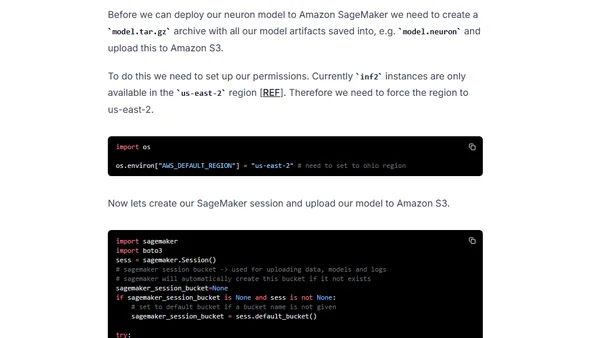

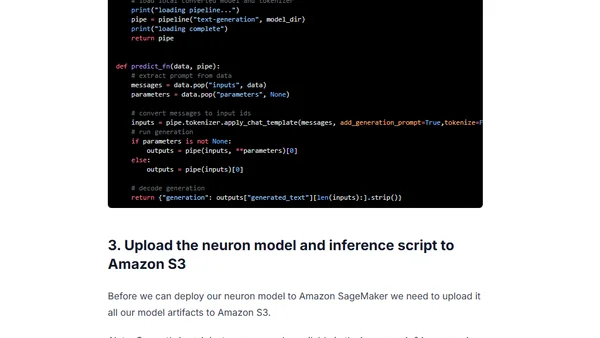

A tutorial on deploying Meta's Llama 2 7B model on AWS Inferentia2 using Amazon SageMaker and the optimum-neuron library.

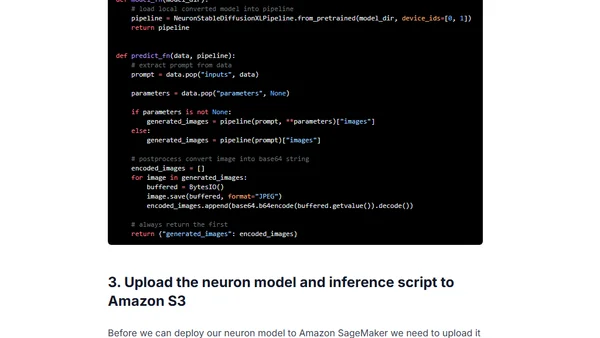

A tutorial on deploying Stable Diffusion XL for accelerated inference using AWS Inferentia2 and Amazon SageMaker.

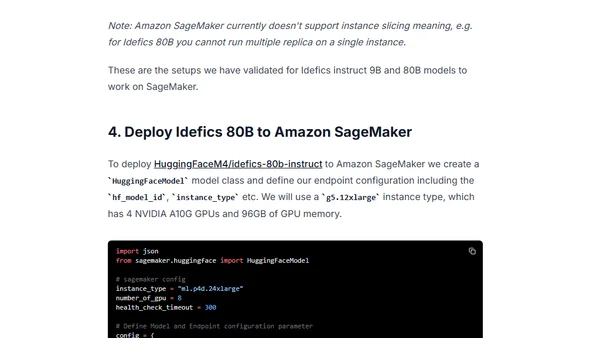

A technical guide on deploying Hugging Face's IDEFICS visual language models (9B & 80B parameters) to Amazon SageMaker using the LLM DLC.

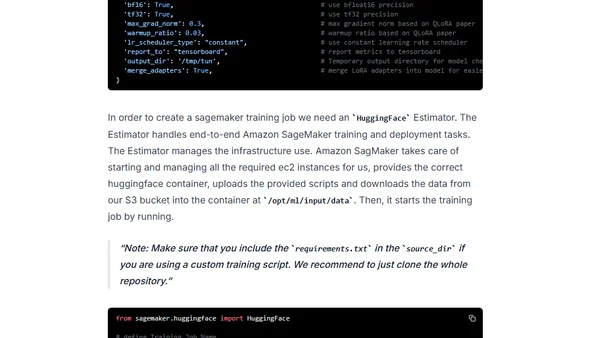

A technical guide on fine-tuning the Mistral 7B large language model using QLoRA and deploying it on Amazon SageMaker with Hugging Face tools.