Nova - The AI Co-Designer That Learns Your Taste

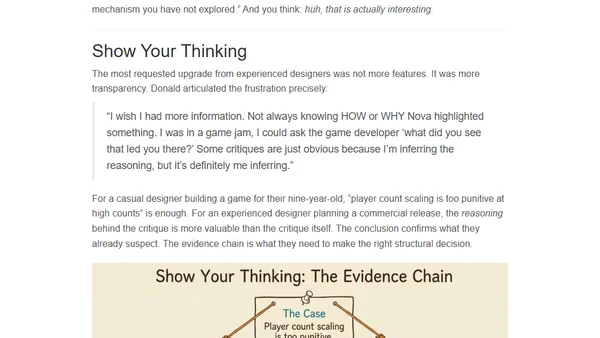

Introduces Nova, an AI co-designer for board game creation that learns a designer's preferences and remembers past decisions through conversation.

Introduces Nova, an AI co-designer for board game creation that learns a designer's preferences and remembers past decisions through conversation.

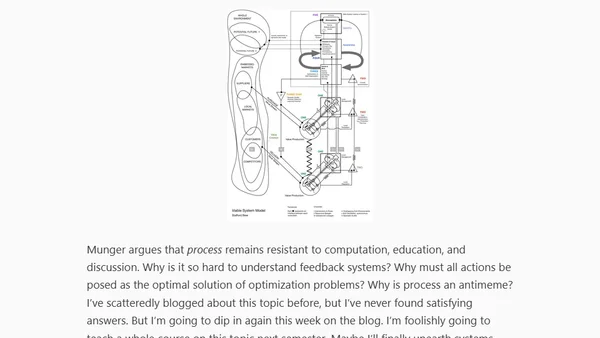

A professor reflects on the intersection of machine learning and control theory, discussing the Learning for Dynamics and Control (L4DC) conference and the need for a merged perspective.

OpenAI researchers propose 'confessions' as a method to improve AI honesty by training models to self-report misbehavior in reinforcement learning.

OpenAI researchers propose 'confessions' as a method to improve AI honesty by training models to self-report misbehavior in reinforcement learning.

A 2025 year-in-review of Large Language Models, covering major developments in reasoning, architecture, costs, and predictions for 2026.

A review of key paradigm shifts in Large Language Models (LLMs) in 2025, focusing on RLVR training and new conceptual models of AI intelligence.

Explores the tension between optimization and systems-level thinking in AI-driven scientific discovery and computational ethics.

A developer reflects on AI agent architectures, context management, and the industry's overemphasis on model development vs. building applications.

A critique of Reformist RL's inefficiency and a proposal for more effective alternatives in reinforcement learning.

A simplified, non-technical definition of reinforcement learning as an iterative optimization process based on external feedback.

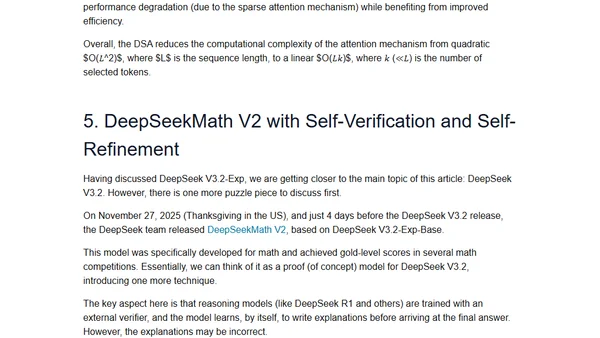

A technical analysis of DeepSeek V3.2's architecture, sparse attention, and reinforcement learning updates, comparing it to other flagship AI models.

A technical analysis of the DeepSeek model series, from V3 to the latest V3.2, covering architecture, performance, and release timeline.

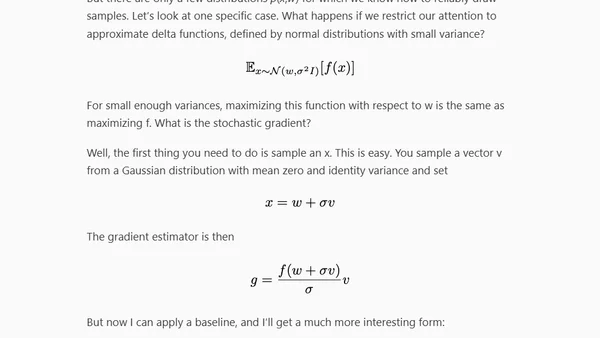

A technical lecture on applying policy gradient methods to derive optimization algorithms, focusing on the unbiased gradient estimator and its applications.

Explores Andrej Karpathy's concept of Software 2.0, where AI writes programs through objectives and gradient descent, focusing on task verifiability.

Explores the shift from RLHF to RLVR for training LLMs, focusing on using objective, verifiable rewards to improve reasoning and accuracy.

A curated list of key LLM research papers from Jan-June 2025, organized by topic including reasoning models, RL methods, and efficient training.

A curated list of key LLM research papers from the first half of 2025, organized by topic such as reasoning models and reinforcement learning.

An AI engineer explains how buying a printer for reading and annotating technical papers helps improve focus and retention.

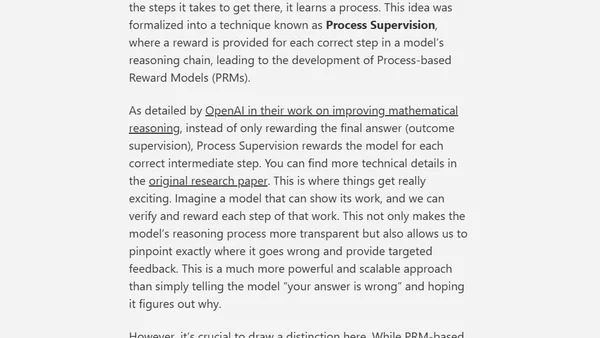

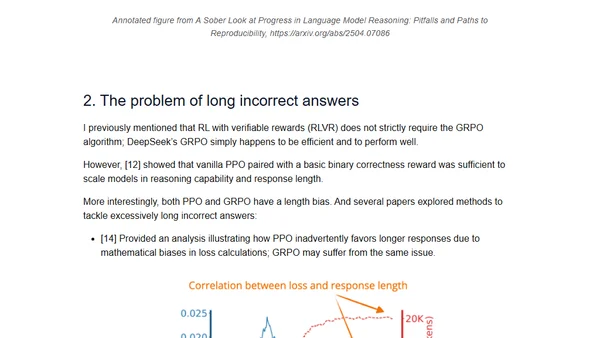

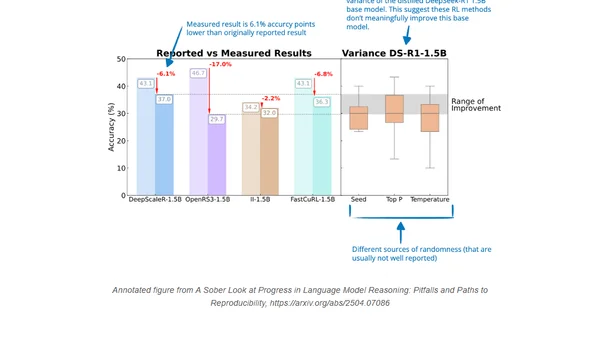

Analyzes the use of reinforcement learning to enhance reasoning capabilities in large language models (LLMs) like GPT-4.5 and o3.

Explores the latest developments in using reinforcement learning to improve reasoning capabilities in large language models (LLMs).