Deep Learning is Powerful Because It Makes Hard Things Easy - Reflections 10 Years On

A reflection on a decade-old blog post about deep learning, examining past predictions on architecture, scaling, and the field's evolution.

Ferenc Huszár is a Professor of Machine Learning at the University of Cambridge and founder of Reasonable, a deep tech startup building advanced programming LLMs. His research focuses on learning theory, reasoning, and inductive biases in deep learning.

15 articles from this blog

A reflection on a decade-old blog post about deep learning, examining past predictions on architecture, scaling, and the field's evolution.

Explores continuous-time Markov chains as a foundation for understanding discrete diffusion models in machine learning.

Explores the potential and implications of using AI to automate mathematical theorem proving, framing it as a 'tame' problem solvable by machines.

Analyzes Geoffrey Hinton's technical argument comparing biological and digital intelligence, concluding digital AI will surpass human capabilities.

Explores autoregressive models, their relationship to joint distributions, and how they handle out-of-distribution prompts, with insights relevant to LLMs.

A reflection on past skepticism of deep learning and why similar dismissal of Large Language Models (LLMs) might be a mistake.

Explores how Large Language Models perform implicit Bayesian inference through in-context learning, connecting exchangeable sequence models to prompt-based learning.

Explores the relationship between causal and statistical models, focusing on causal diagrams, Markov factorization, and structural equation models.

Explores how mutual information and KL divergence can be used to derive information-theoretic generalization bounds for Stochastic Gradient Descent (SGD).

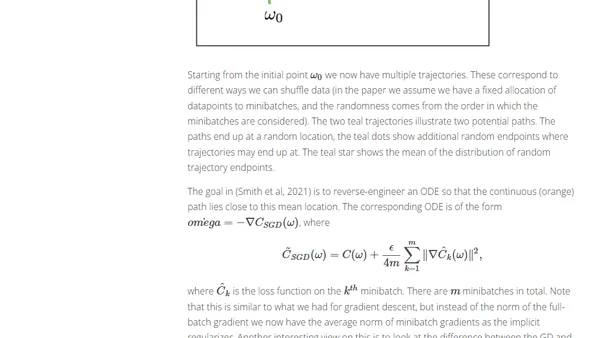

Explores how Stochastic Gradient Descent (SGD) inherently prefers certain minima, leading to better generalization in deep learning, beyond classical theory.

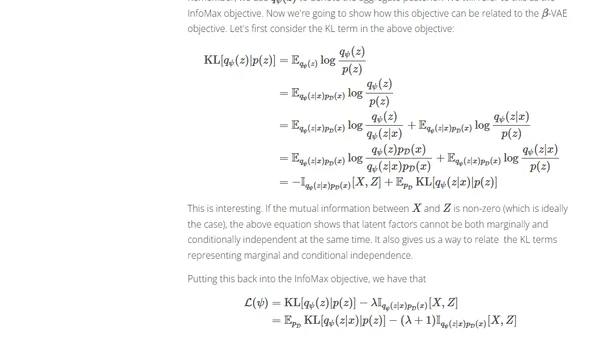

A technical exploration of the β-VAE objective from an information maximization perspective, discussing its role in learning disentangled representations.

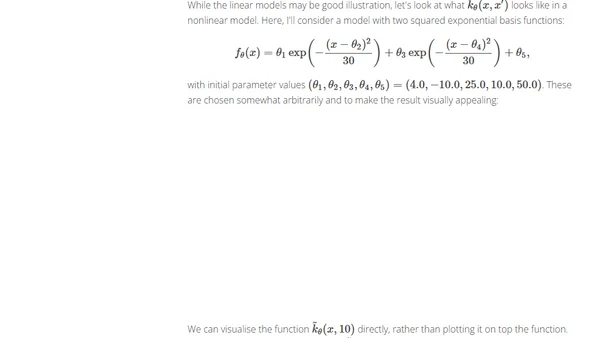

Explains the Neural Tangent Kernel concept through simple 1D regression examples to illustrate how neural networks evolve during training.

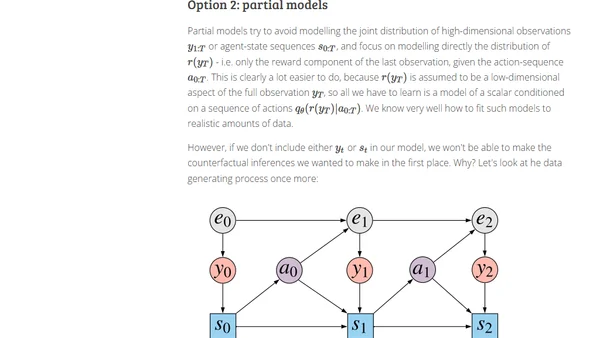

Explains the concept of causally correct partial models for reinforcement learning in POMDPs, focusing on counterfactual policy evaluation.

Explores a meta-learning method using the Implicit Function Theorem to efficiently optimize millions of hyperparameters via implicit differentiation.

A data scientist's journey from dogmatic Bayesianism to a pragmatic, 'secular' use of Bayesian tools without requiring belief in the model's literal existence.