State of AI 2026 with Sebastian Raschka, Nathan Lambert, and Lex Fridman

A 4.5-hour interview discussing the state of AI in 2026, covering LLMs, geopolitics, training, open vs. closed models, AGI timelines, and industry implications.

A 4.5-hour interview discussing the state of AI in 2026, covering LLMs, geopolitics, training, open vs. closed models, AGI timelines, and industry implications.

A 4.5-hour interview discussing the state of AI in 2026, covering LLMs, geopolitics, training, open vs. closed models, AGI timelines, and industry implications.

A 2025 AI research review covering tabular machine learning, the societal impacts of AI scale, and open-source data-science tools.

Leading AI researchers debate whether current scaling and innovations are sufficient to achieve Artificial General Intelligence (AGI).

Weekly AI news summary covering robotics, cybersecurity, AI licensing, and compute infrastructure trends.

A 2025 year-in-review of Large Language Models, covering major developments in reasoning, architecture, costs, and predictions for 2026.

A curated list of notable LLM research papers from the second half of 2025, categorized by topics like reasoning, training, and multimodal models.

A review of key paradigm shifts in Large Language Models (LLMs) in 2025, focusing on RLVR training and new conceptual models of AI intelligence.

Olmo 3 is a new fully open-source large language model from AI2, featuring training data, code, and unique interpretability for reasoning traces.

An analysis of using LLMs like ChatGPT for academic research, highlighting their utility and inherent risks as research tools.

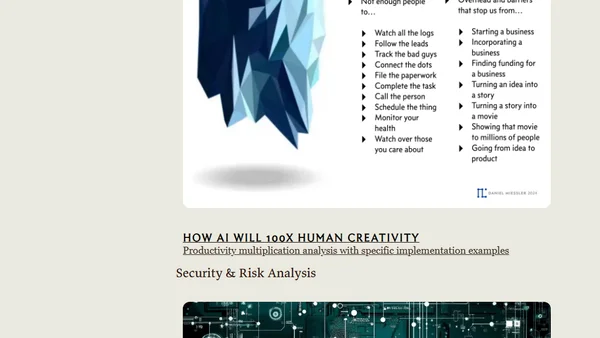

A comprehensive collection of AI research, frameworks, and guides covering technical architecture, economic impact, and societal transformation.

An AI researcher shares her journey into GPU programming and introduces WebGPU Puzzles, a browser-based tool for learning GPU fundamentals from scratch.

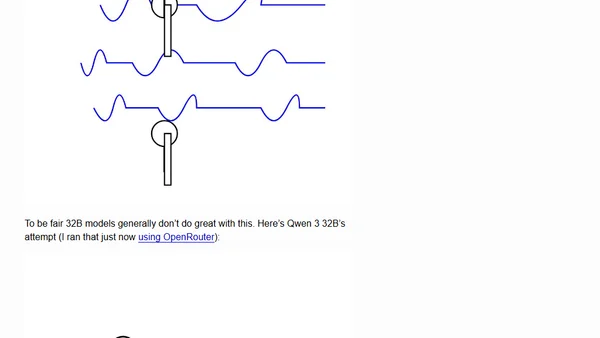

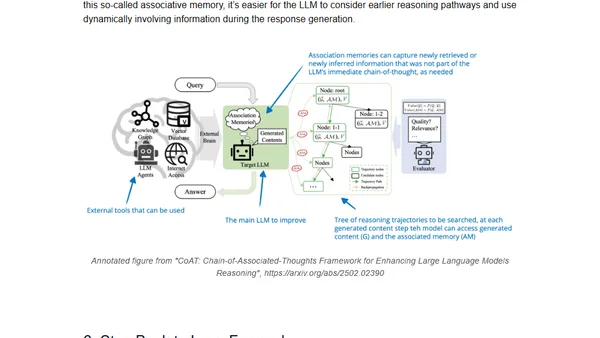

Explores recent research on improving LLM reasoning through inference-time compute scaling methods, comparing various techniques and their impact.

A Google researcher's curated review of key AI research papers from 2024, covering LLMs, new architectures, agents, and security.

A curated list of notable LLM and AI research papers published in 2024, providing a resource for those interested in the latest developments.

Argues that the 'age of data' for AI is not ending but evolving into an era of 'superhuman data' with higher quality and knowledge density.

A guide on starting and running a weekly paper club for learning about AI/ML research papers and building a technical community.

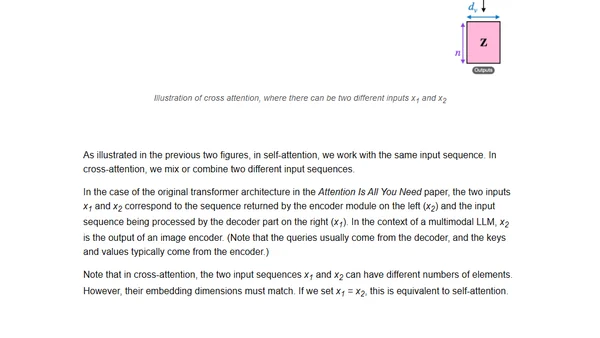

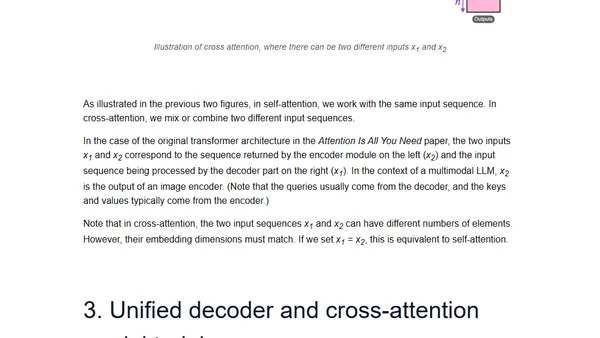

Explains how multimodal LLMs work, compares recent models like Llama 3.2, and outlines two main architectural approaches for building them.

Explains how multimodal LLMs work, reviews recent models like Llama 3.2, and compares different architectural approaches.

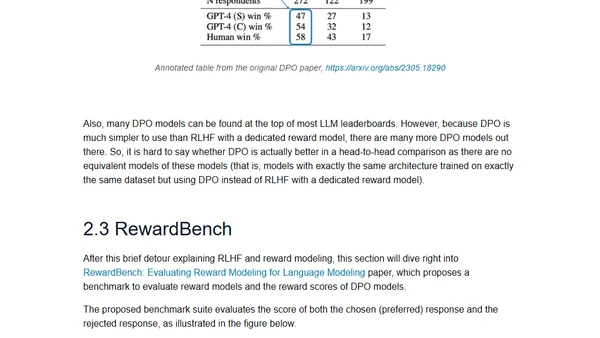

Discusses strategies for continual pretraining of LLMs and evaluating reward models for RLHF, based on recent research papers.