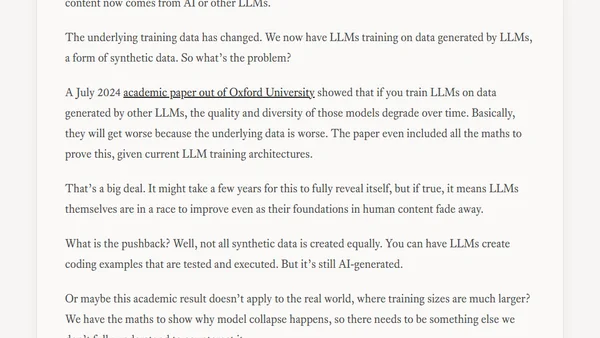

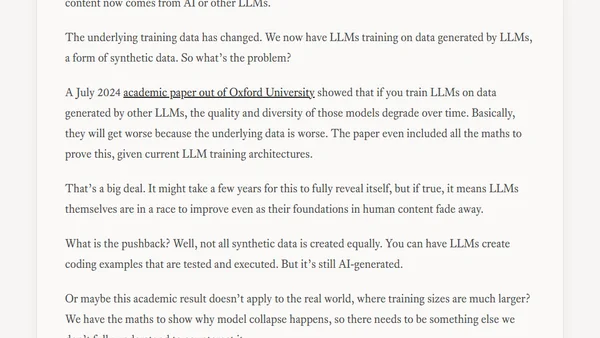

Is AI Model Collapse Inevitable?

Explores the risk of AI model collapse as LLMs increasingly train on AI-generated synthetic data, potentially degrading future model quality.

Explores the risk of AI model collapse as LLMs increasingly train on AI-generated synthetic data, potentially degrading future model quality.

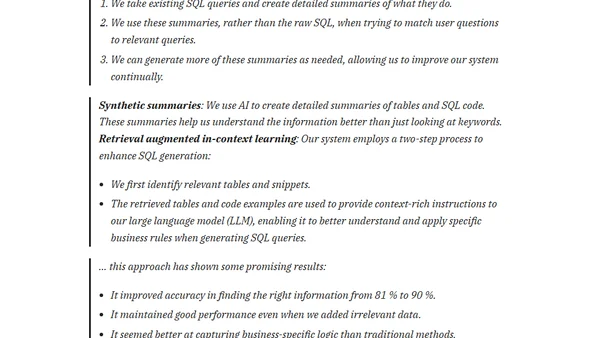

Explains a technique using AI-generated summaries of SQL queries to improve the accuracy of text-to-SQL systems with LLMs.

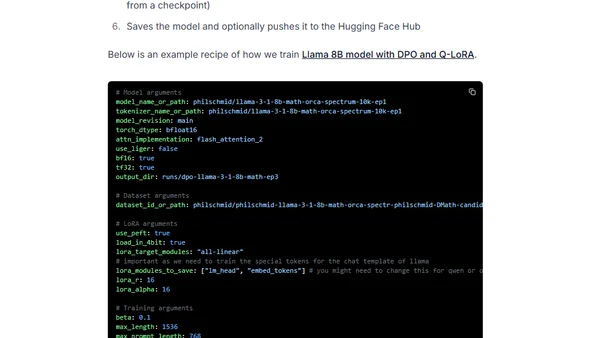

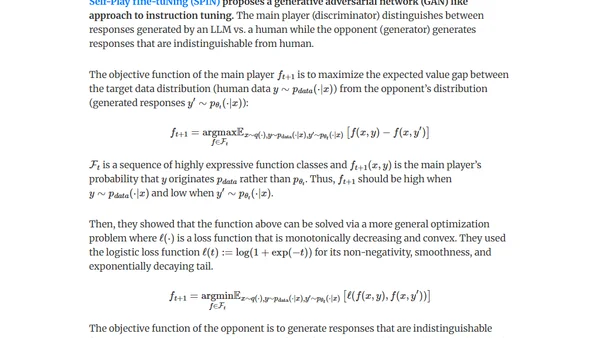

A technical guide on aligning open-source large language models (LLMs) in 2025 using Direct Preference Optimization (DPO) and synthetic data.

Final notes from a book on LLM prompt engineering, covering evaluation frameworks, offline/online testing, and LLM-as-judge techniques.

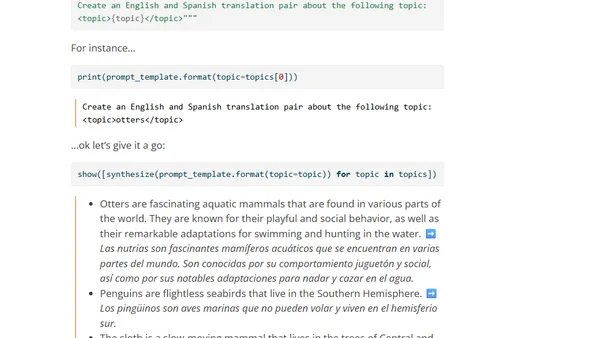

Explores the use of LLMs to generate synthetic data for training AI models, discussing challenges, an experiment with coding data, and a new library.

Explores methods for generating synthetic data (distillation & self-improvement) to fine-tune LLMs for pretraining, instruction-tuning, and preference-tuning.

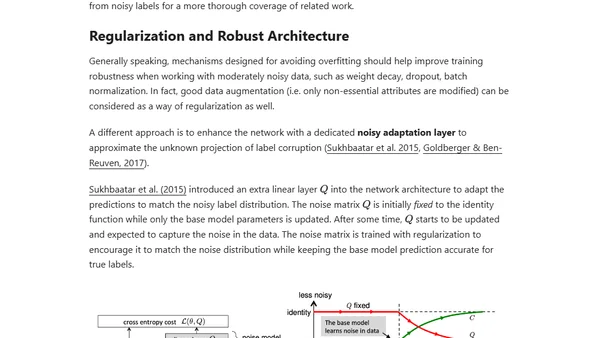

Explores synthetic data generation methods like augmentation and pretrained models to overcome limited training data in machine learning.