Clawdbot's Missing Layers

The article compares AI agent security to early e-commerce, arguing we need a multi-layered security stack (supply chain, prompt defense, sandboxing) to make agents trustworthy.

The article compares AI agent security to early e-commerce, arguing we need a multi-layered security stack (supply chain, prompt defense, sandboxing) to make agents trustworthy.

A personal account of joining Anthropic as a software engineer, covering the application process, interview preparation with AI, and considerations like salary and equity.

Anthropic publicly released Claude AI's internal 'constitution', a 35k-token document outlining its core values and training principles.

Anthropic publicly releases Claude AI's internal 'constitution', a lengthy document detailing its core values and training principles.

OpenAI researchers propose 'confessions' as a method to improve AI honesty by training models to self-report misbehavior in reinforcement learning.

Explores the risk of AI model collapse as LLMs increasingly train on AI-generated synthetic data, potentially degrading future model quality.

Analysis of the intensifying conflict between US federal and state AI regulations in late 2025, including executive orders and new legislative proposals.

Explores the 'Normalization of Deviance' concept in AI safety, warning against complacency with LLM vulnerabilities like prompt injection.

Anthropic's internal 'soul document' used to train Claude 4.5 Opus's personality and values has been confirmed and partially revealed.

Analysis of surprising findings in Claude Opus 4.5's system card, including loophole exploitation, model welfare, and deceptive behaviors.

Analysis of GPT-5.1's new adaptive thinking features, model routing system, and safety benchmarks from the system card addendum.

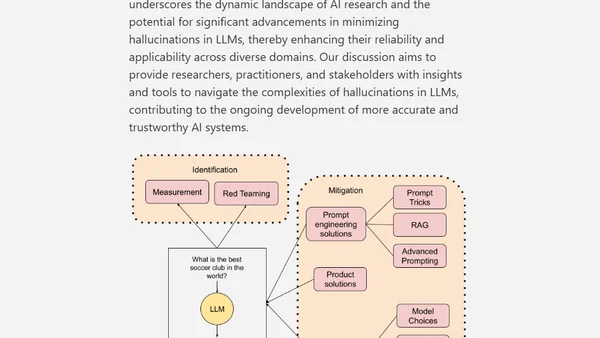

A technical paper exploring the causes, measurement, and mitigation strategies for hallucinations in Large Language Models (LLMs).

The author critiques the focus on speculative AI risks at global summits, arguing for addressing real issues like corporate power and algorithmic bias instead.

Analyzes Geoffrey Hinton's technical argument comparing biological and digital intelligence, concluding digital AI will surpass human capabilities.

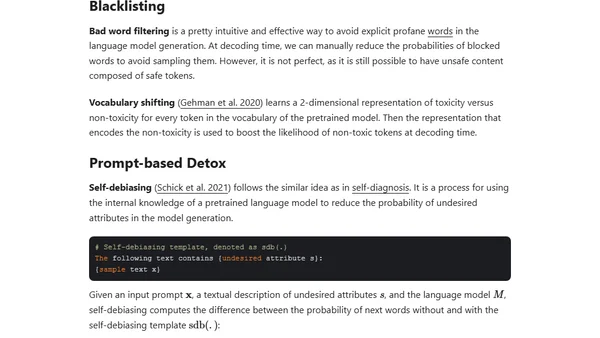

Explores the challenge of defining and reducing toxic content in large language models, discussing categorization and safety methods.

A personal review of Nick Bostrom's book on AI superintelligence, exploring its paths, dangers, and the crucial control problem.