Kimi K2.5: Visual Agentic Intelligence

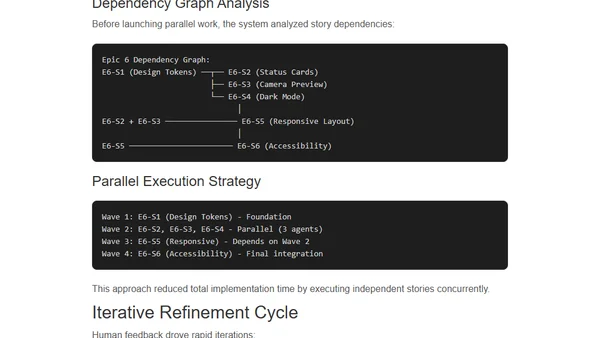

Kimi K2.5 is a new multimodal AI model with visual understanding and a self-directed agent swarm for complex, parallel task execution.

Kimi K2.5 is a new multimodal AI model with visual understanding and a self-directed agent swarm for complex, parallel task execution.

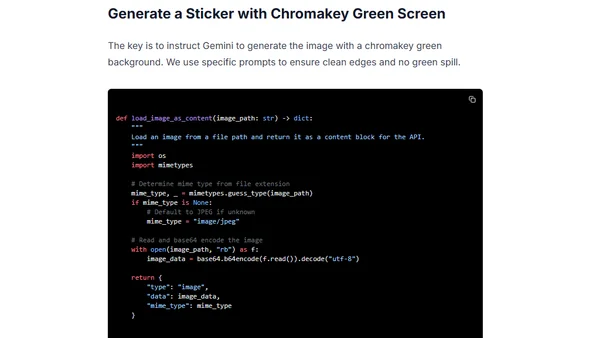

A technical guide on generating transparent PNG stickers using the Gemini API with chromakey green and HSV color detection for clean background removal.

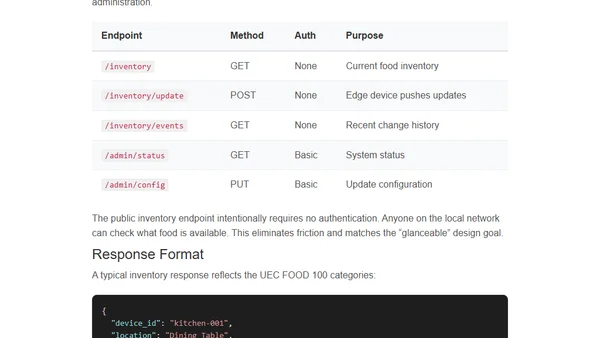

Case study on building an edge AI food monitoring system using AI-led development with the BMAD framework, achieving rapid delivery with minimal human oversight.

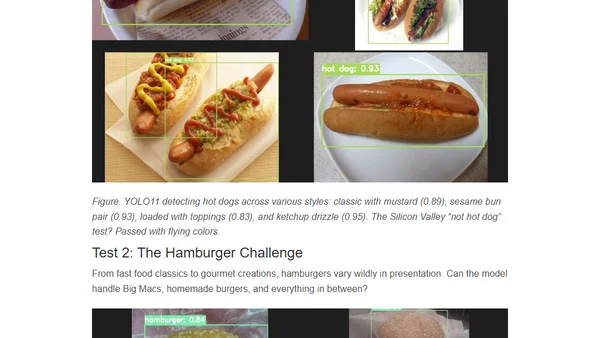

A guide to building an offline, edge AI food monitoring system using a Raspberry Pi, YOLO11, and a local-first architecture for privacy.

A technical comparison of YOLO-based food detection from 2018 to 2026, showing the evolution of deep learning tooling and ease of use.

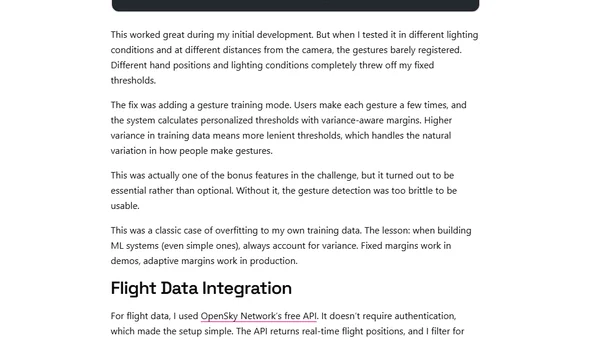

A developer builds a gesture-controlled flight tracker using MediaPipe, TanStack Start, and OpenSky API for the Advent of AI 2025 challenge.

Technical report on Qwen3-VL's video processing capabilities, achieving near-perfect accuracy in long-context needle-in-a-haystack evaluations.

Experiment testing if AI vision models improve SVG drawings of a pelican on a bicycle through iterative, agentic feedback loops.

An analysis of AI video generation using a specific, complex prompt to test the capabilities and limitations of models like Sora 2.

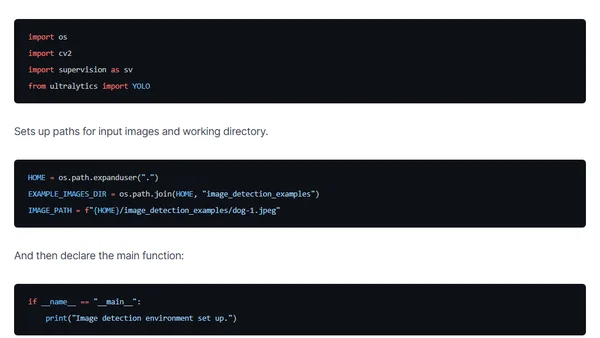

A tutorial on using Python, Ultralytics YOLO, and Supervision for computer vision tasks like object detection and image annotation.

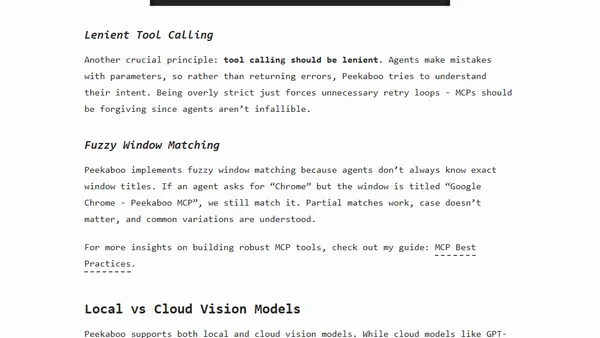

Introduces Peekaboo MCP, a macOS tool that enables AI agents to capture screenshots and perform visual question answering using local or cloud vision models.

A review of Building Regulariser, a Python package that improves AI-generated building footprints from satellite imagery by making outlines more regular and plausible.

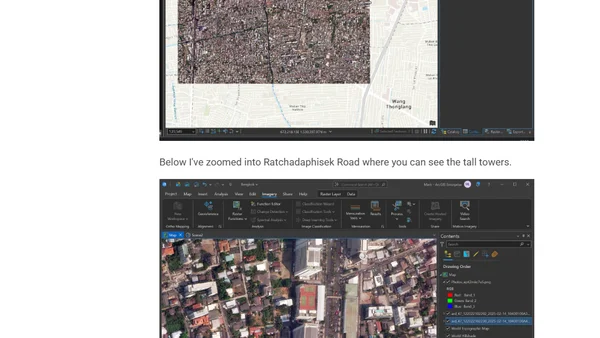

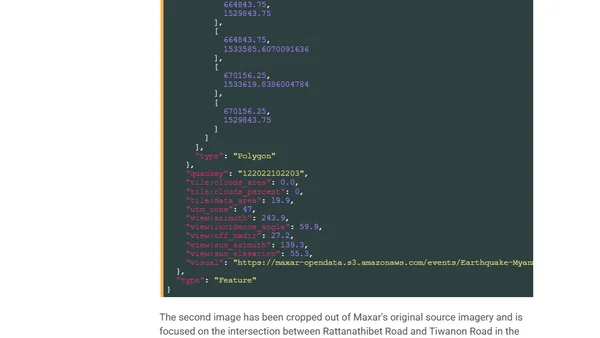

A technical guide applying the Depth Anything V2 AI model to analyze high-resolution Maxar satellite imagery of Bangkok for depth estimation.

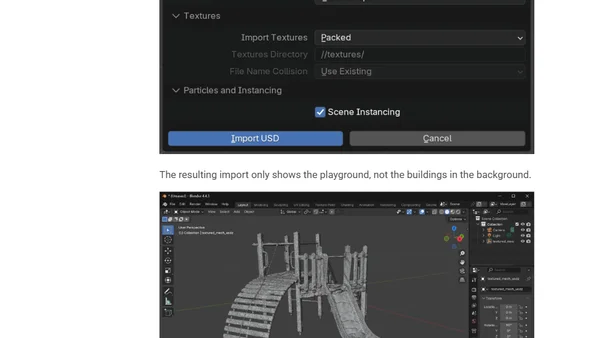

Explores 3D Gaussian Splatting, a technique for creating real-time 3D worlds from videos, comparing different generation methods and web-based tools.

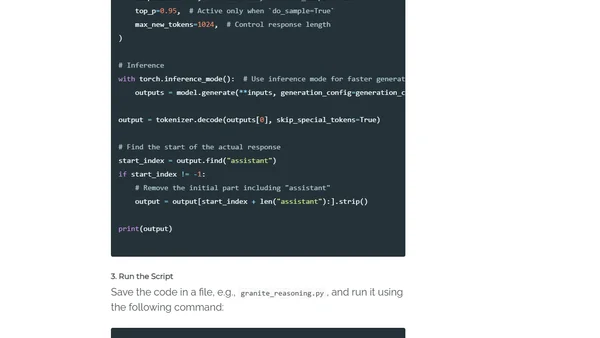

A technical guide exploring IBM's Granite 3.1 AI models, covering their reasoning and vision capabilities with a demo and local setup instructions.

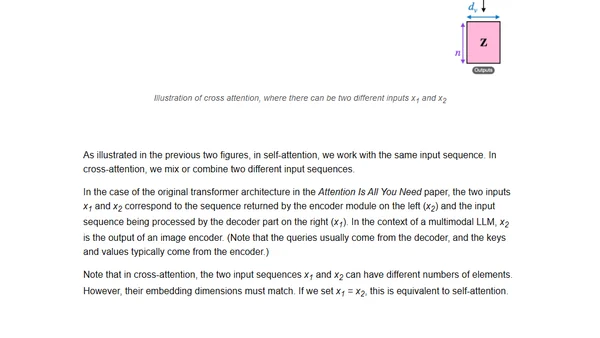

Explains how multimodal LLMs work, compares recent models like Llama 3.2, and outlines two main architectural approaches for building them.

Explains how multimodal LLMs work, reviews recent models like Llama 3.2, and compares different architectural approaches.

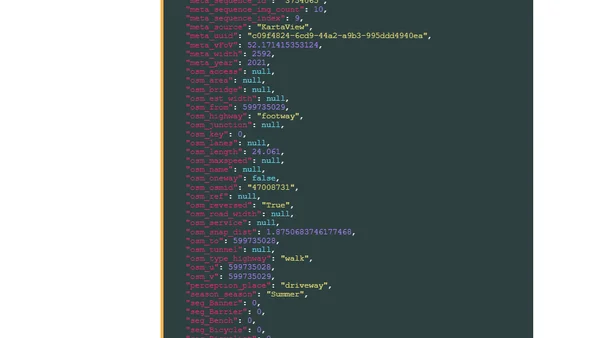

Analyzing the Global Streetscapes dataset, a massive collection of AI-labeled street view imagery, using Python, DuckDB, and a high-performance workstation.

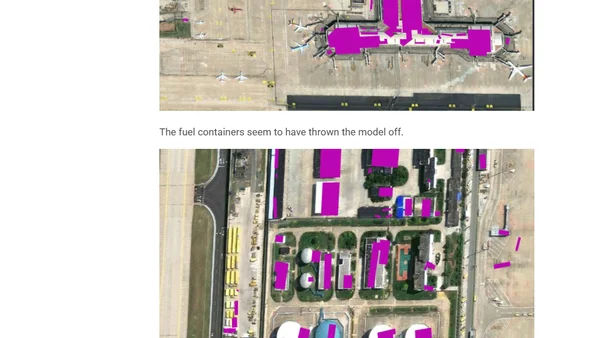

Analysis of a research paper detailing an AI model that extracted 281 million building footprints from satellite imagery across East Asia.

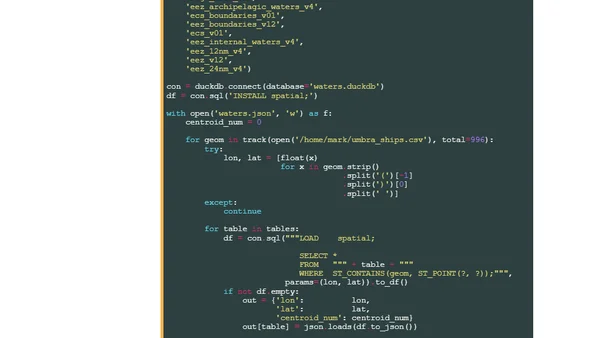

A technical guide on training a ship detection model using YOLOv5 on Umbra's high-resolution Synthetic Aperture Radar (SAR) satellite imagery.