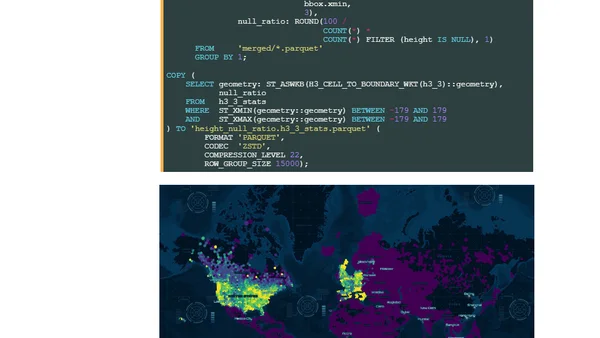

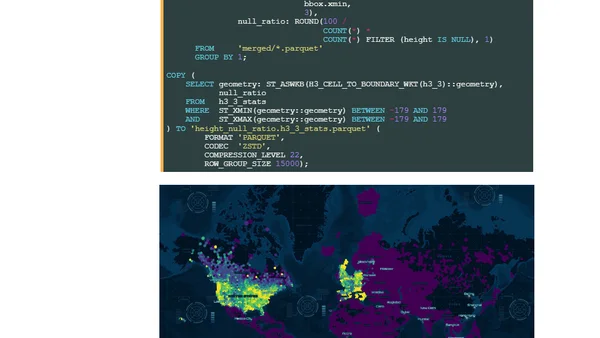

Microsoft's 2026 Global ML Building Footprints

Analysis of Microsoft's 2026 Global ML Building Footprints dataset, including technical setup and data exploration using DuckDB and QGIS.

Analysis of Microsoft's 2026 Global ML Building Footprints dataset, including technical setup and data exploration using DuckDB and QGIS.

A beginner-friendly introduction to using PySpark for big data processing with Apache Spark, covering the fundamentals.

Announces 9 new free and paid books added to the Big Book of R collection, covering data science, visualization, and package development.

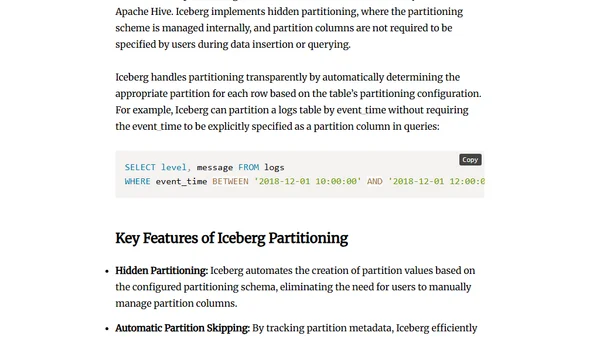

A comprehensive 2025 guide to Apache Iceberg, covering its architecture, ecosystem, and practical use for data lakehouse management.

An introduction to Apache Parquet, a columnar storage file format for efficient data processing and analytics.

Explains the hierarchical structure of Parquet files, detailing how pages, row groups, and columns optimize storage and query performance.

Explores why Parquet is the ideal columnar file format for optimizing storage and query performance in modern data lake and lakehouse architectures.

Final guide in a series covering performance tuning and best practices for optimizing Apache Parquet files in big data workflows.

An introduction to Apache Iceberg, a table format for data lakehouses, explaining its architecture and providing learning resources.

Interview with Suresh Srinivas on his career in big data, founding Hortonworks, scaling Uber's data platform, and leading the OpenMetadata project.

Compares partitioning techniques in Apache Hive and Apache Iceberg, highlighting Iceberg's advantages for query performance and data management.

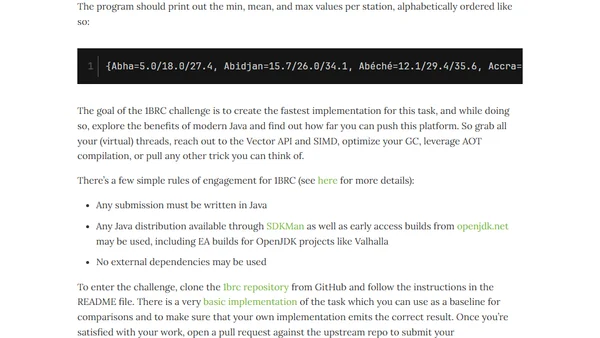

A Java programming challenge to process one billion rows of temperature data, focusing on performance optimization and modern Java features.

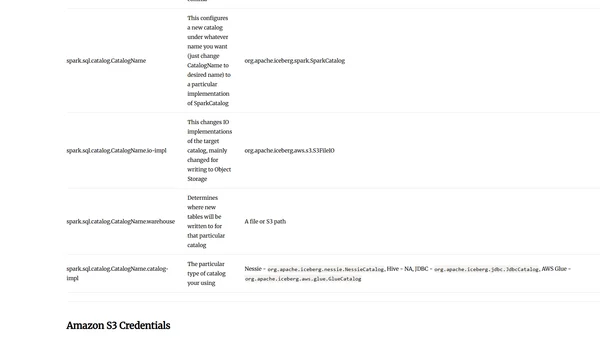

A guide to configuring Apache Spark for use with the Apache Iceberg table format, covering packages, flags, and programmatic setup.

Argues that raw data is overvalued without proper context and conversion into meaningful information and knowledge.

Explains the APPROX_COUNT_DISTINCT function for faster, memory-efficient distinct counts in SQL, comparing it to exact COUNT(DISTINCT).

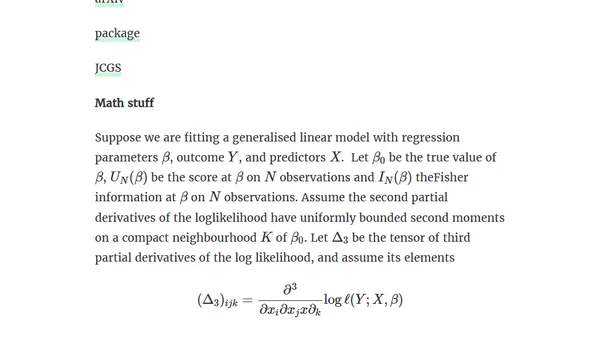

A method for faster generalized linear models on large datasets using a single database query and one Newton-Raphson iteration.

A personal reflection on the trade-offs between convenience and privacy in an era of AI, IoT, and pervasive data collection.

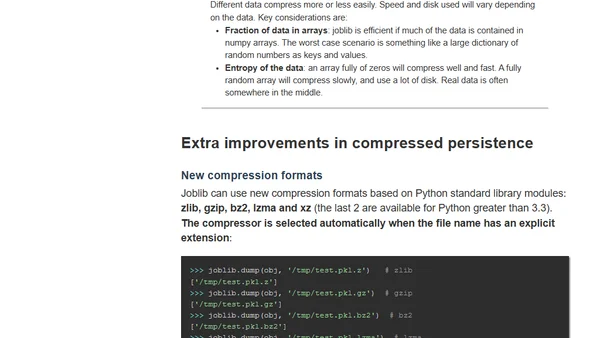

Explains improvements in joblib's compressed persistence for Python, focusing on reduced memory usage and single-file storage for large numpy arrays.

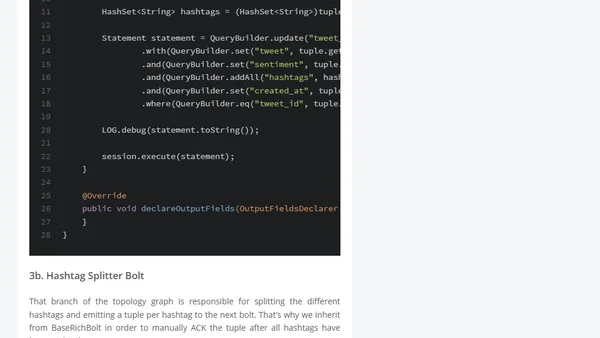

Technical guide on building a real-time Twitter sentiment analysis system using Apache Kafka and Storm.

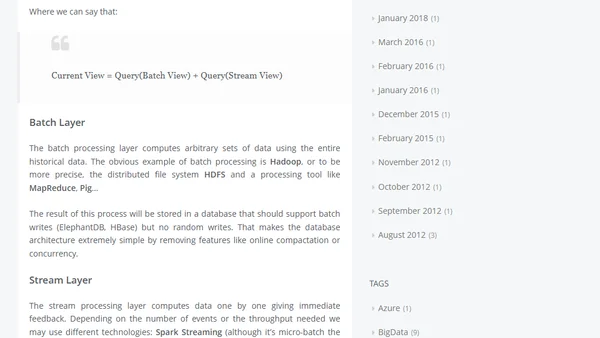

Explains Lambda Architecture for Big Data, combining batch processing (Hadoop) and real-time stream processing (Spark, Storm) to handle large datasets.