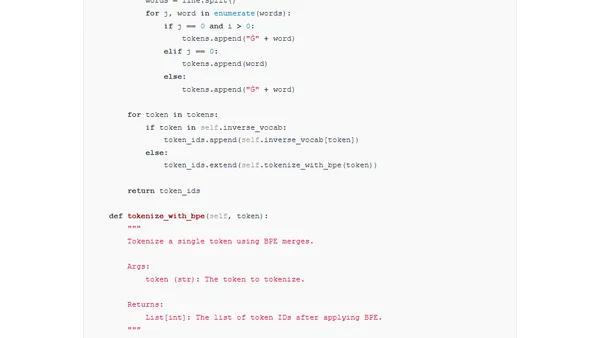

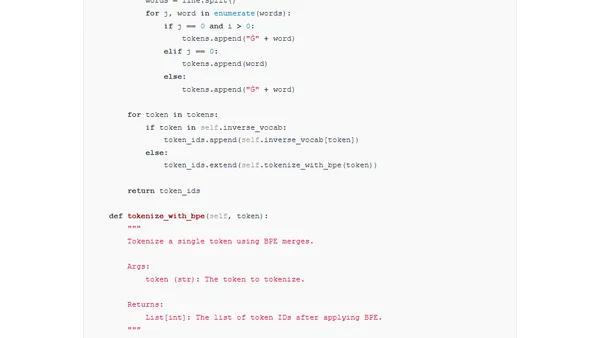

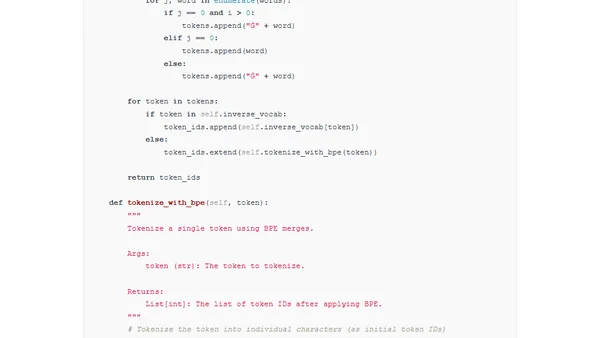

Implementing A Byte Pair Encoding (BPE) Tokenizer From Scratch

A step-by-step guide to implementing the Byte Pair Encoding (BPE) tokenizer from scratch, used in models like GPT and Llama.

A step-by-step guide to implementing the Byte Pair Encoding (BPE) tokenizer from scratch, used in models like GPT and Llama.

A step-by-step educational guide to building a Byte Pair Encoding (BPE) tokenizer from scratch, as used in models like GPT and Llama.

Introducing ModernBERT, a new family of state-of-the-art encoder models designed as a faster, more efficient replacement for the widely-used BERT.

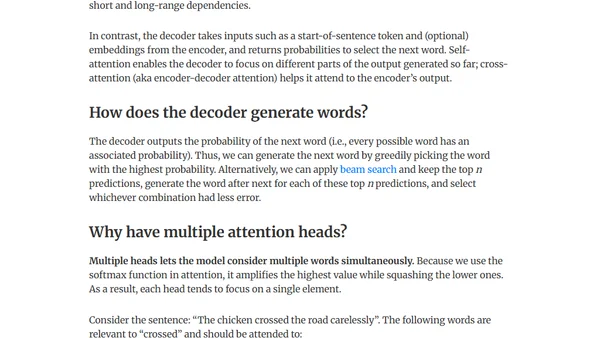

Explains the intuition behind the Attention mechanism and Transformer architecture, focusing on solving issues in machine translation and language modeling.

Explores user interfaces for LLMs that minimize text chat, using clicks and user context for more intuitive interactions.

Explores using GPT-3 text embeddings and a simple classifier to predict the winner of a headline A/B test, potentially replacing traditional testing.

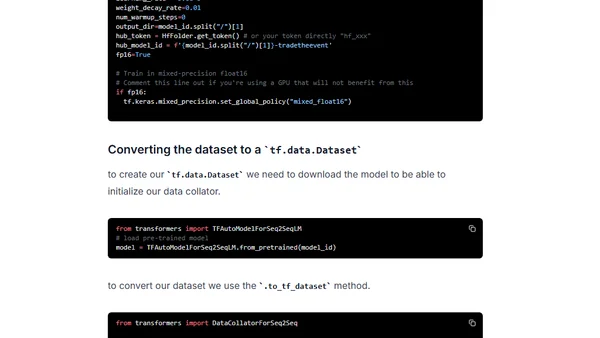

A tutorial on fine-tuning a Hugging Face Transformer model for financial text summarization using Keras and Amazon SageMaker.

A workshop series on using Hugging Face Transformers with Amazon SageMaker for enterprise-scale NLP, covering training, deployment, and MLOps.

A guide to attending AWS re:Invent 2021 machine learning and NLP sessions remotely, featuring keynotes and top session recommendations.

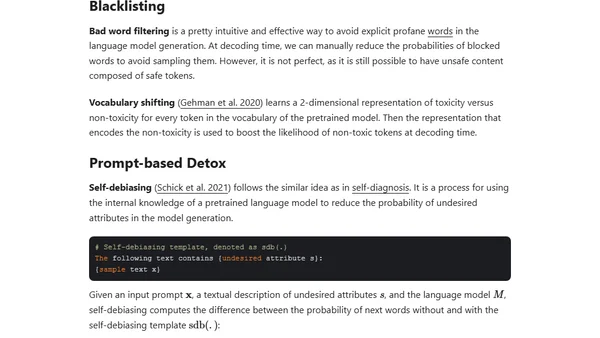

Explores the challenge of defining and reducing toxic content in large language models, discussing categorization and safety methods.

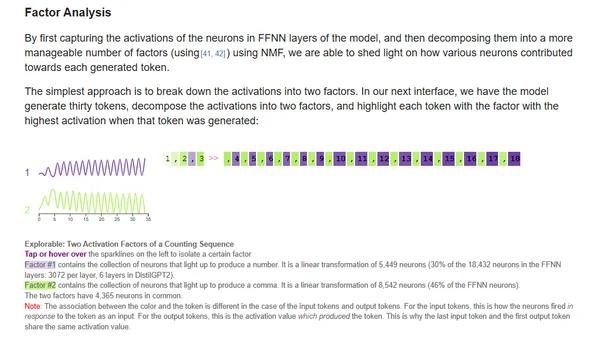

Explores interactive methods for interpreting transformer language models, focusing on input saliency and neuron activation analysis.

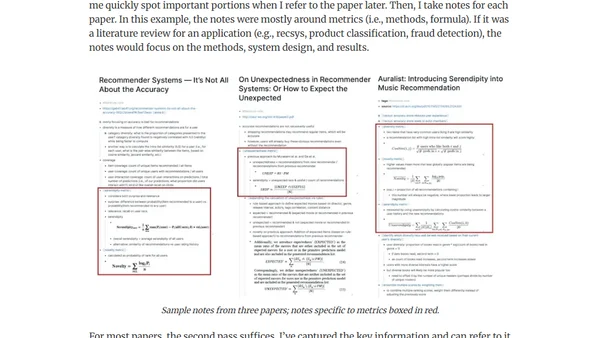

Explains how regularly reading academic papers improves data science skills, offering practical advice on selection and application.

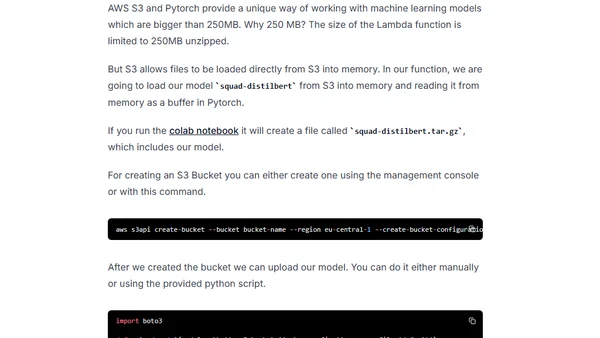

A tutorial on deploying a BERT question-answering model in a serverless environment using HuggingFace Transformers and AWS Lambda.

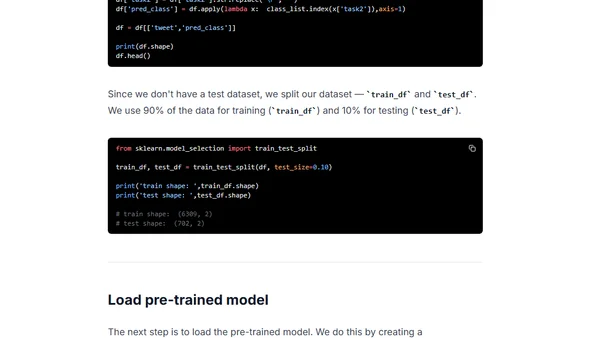

A tutorial on building a non-English text classification model using BERT and Simple Transformers, demonstrated with German tweets.

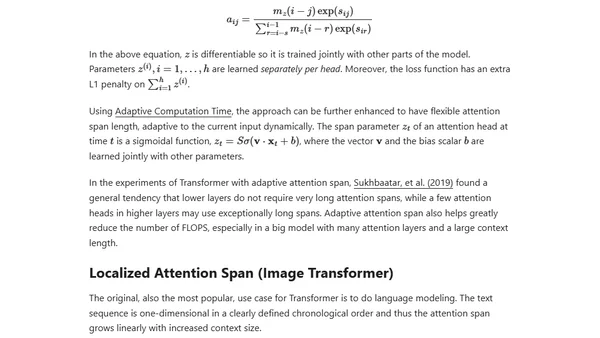

An updated overview of the Transformer model family, covering improvements for longer attention spans, efficiency, and new architectures since 2020.

A curated list of open-source and free tools for data annotation across computer vision, NLP, audio, and other domains, including image and video labeling.

A tutorial on using HuggingFace's API to access and fine-tune OpenAI's GPT-2 model for text generation.

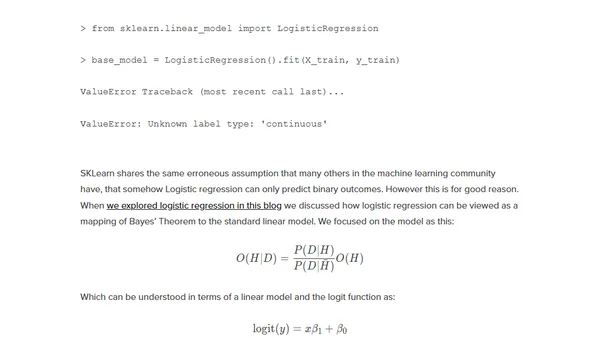

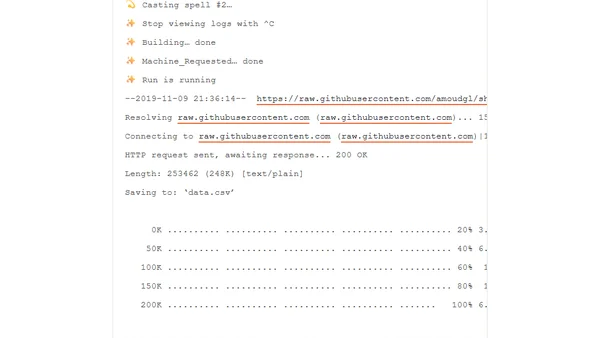

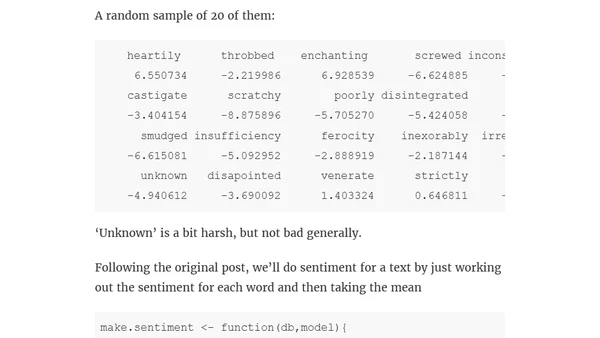

A tutorial replicating a Python experiment on creating a biased AI sentiment classifier, but using R, GloVe embeddings, and glmnet for logistic regression.

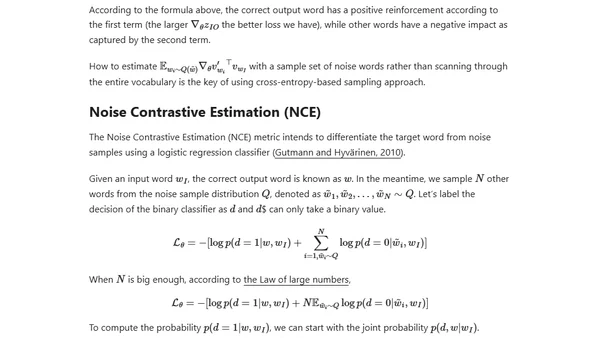

Explains word embeddings, comparing count-based and context-based methods like skip-gram for converting words into dense numeric vectors.

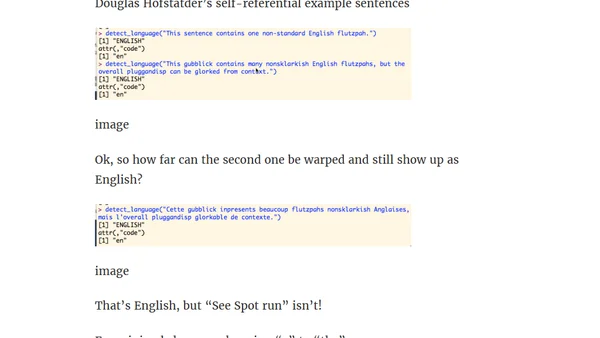

Testing the limits of an R language detection package by finding English sentences it misclassifies and exploring algorithmic decision-making.