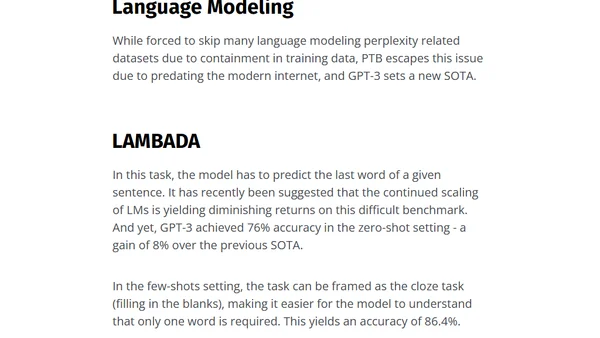

GPT-3, a Giant Step for Deep Learning and NLP

An analysis of OpenAI's GPT-3 language model, focusing on its 175B parameters, in-context learning capabilities, and performance on NLP tasks.

Yoel Zeldes is an algorithm engineer at AI21 Labs with a background in computer science from Hebrew University. He specializes in machine learning, NLP, computer vision, and distributed computing, focusing on data-driven solutions and clean, elegant code.

27 articles from this blog

An analysis of OpenAI's GPT-3 language model, focusing on its 175B parameters, in-context learning capabilities, and performance on NLP tasks.

A tutorial on using HuggingFace's API to access and fine-tune OpenAI's GPT-2 model for text generation.

Explores an unsupervised approach combining Mixture of Experts (MoE) with Variational Autoencoders (VAE) for conditional data generation without labels.

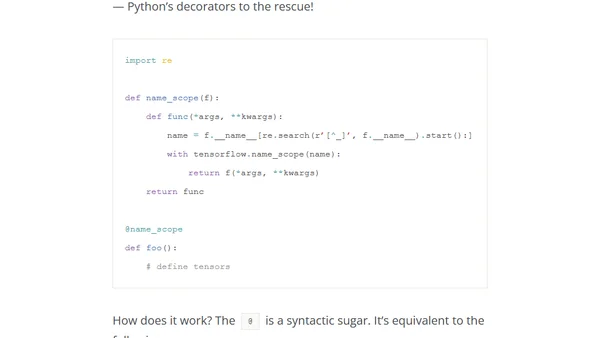

A guide to using Python decorators for automatic TensorFlow named scopes, improving code organization and TensorBoard visualization.

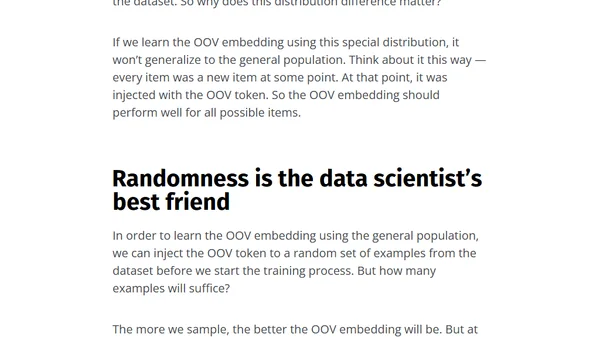

Explores handling Out-of-Vocabulary (OOV) values in machine learning, using deep learning for dynamic data in recommender systems as an example.

Explains how Graph Neural Networks and node2vec use graph structure and random walks to generate embeddings for machine learning tasks.

A guide on building a personal brand as a data scientist, covering path selection, blogging, and sharing knowledge within the community.

A data scientist details how a flawed train-test split method introduced bias when adding image thumbnails to a content recommendation model.

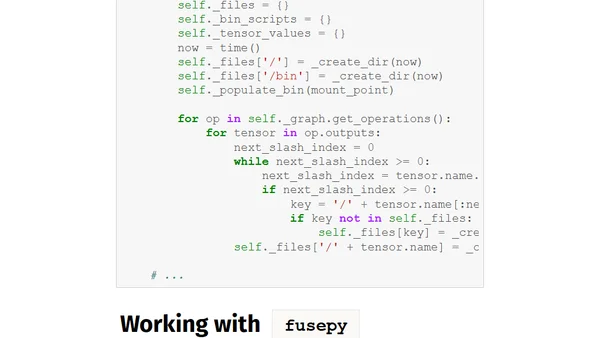

Introducing TFFS, a FUSE-based filesystem to interactively explore TensorFlow graphs and tensors using familiar Unix commands.

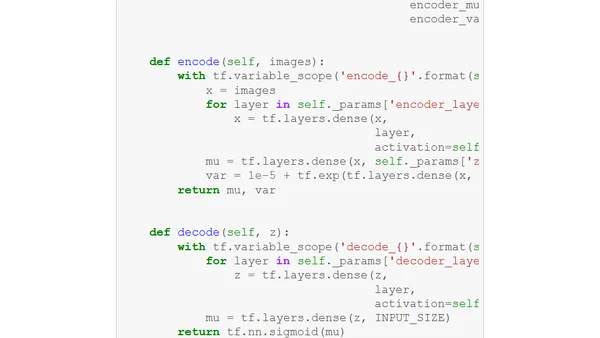

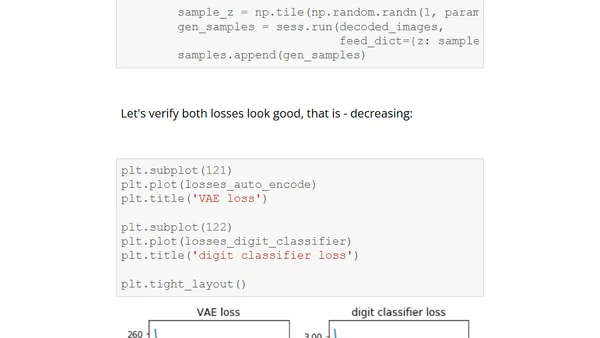

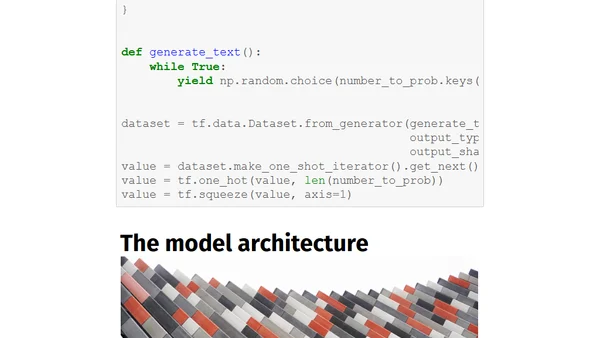

A detailed technical tutorial on implementing a Variational Autoencoder (VAE) with TensorFlow, including code and conditioning on digit types.

A technical case study on optimizing a slow multi-modal ML model for production using caching, async processing, and a microservices architecture.

A team wins a tech hackathon by creating an AR app that uses AI and computer vision to recommend web content based on what a phone camera sees.

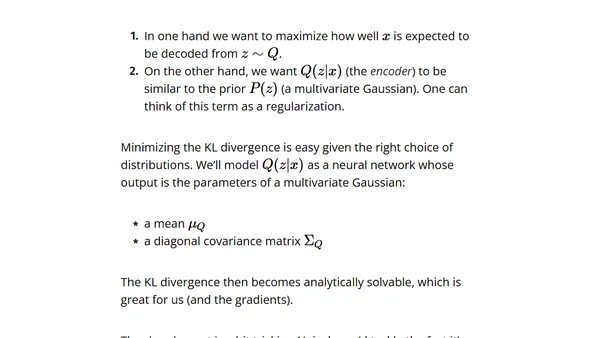

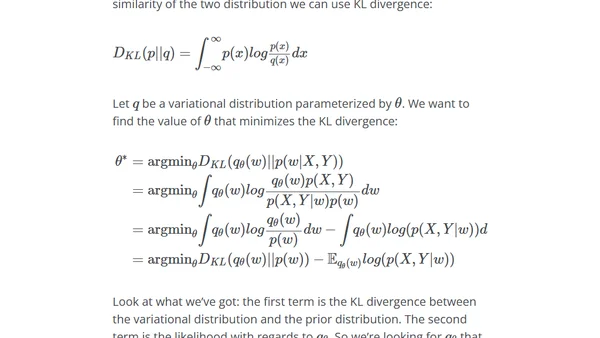

A technical explanation of Variational Autoencoders (VAEs), covering their theory, latent space, and how they generate new data.

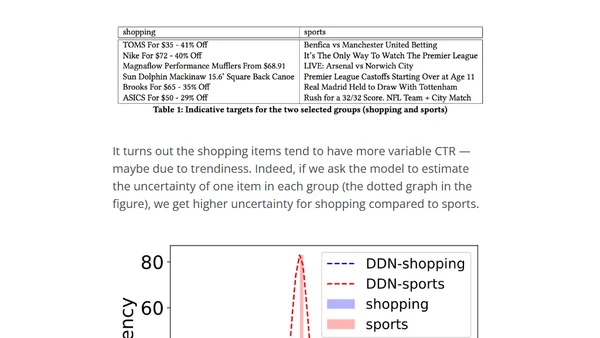

Explains how Taboola built a unified neural network model to predict CTR and estimate prediction uncertainty for recommender systems.

Explores how uncertainty modeling in recommender systems helps balance exploring new items versus exploiting known high-performing ones.

Explores Bayesian methods for quantifying uncertainty in deep neural networks, moving beyond single-point weight estimates.

Explores how uncertainty estimation in deep neural networks can be used for model interpretation, debugging, and improving reliability in high-risk applications.

Explains the Gumbel-Softmax trick, a method for training neural networks that need to sample from discrete distributions, enabling gradient flow.

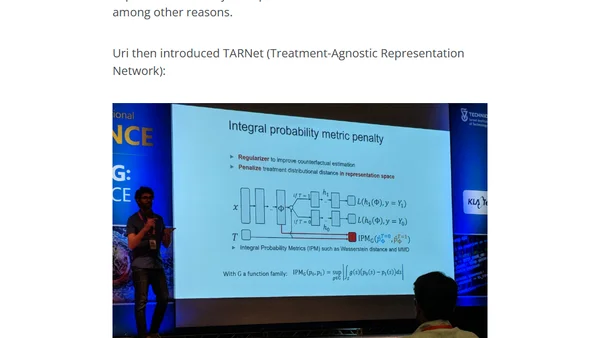

Highlights from a deep learning conference covering optimization algorithms' impact on generalization and human-in-the-loop efficiency.

A practical guide to implementing a hyperparameter tuning script for machine learning models, based on real-world experience from Taboola's engineering team.