Generative AI and AI Product Moats

Explores the strategic importance of Generative AI and how to build sustainable competitive advantages (moats) in AI products.

Jay Alammar is an educator and author known for visualizing machine learning and LLM concepts through clear, illustrated guides. He teaches thousands of learners and co-authored Hands-On Large Language Models.

9 articles from this blog

Explores the strategic importance of Generative AI and how to build sustainable competitive advantages (moats) in AI products.

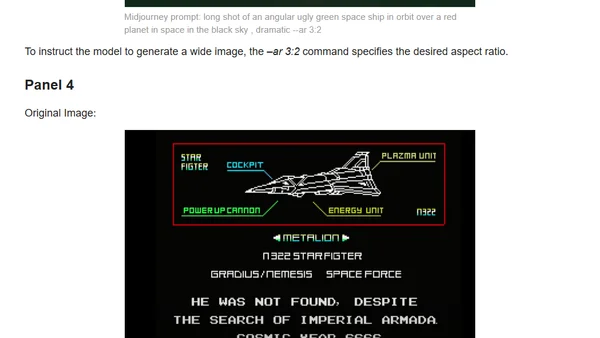

Using AI image generation tools like Stable Diffusion and Dall-E to recreate and enhance old video game graphics from the 1980s.

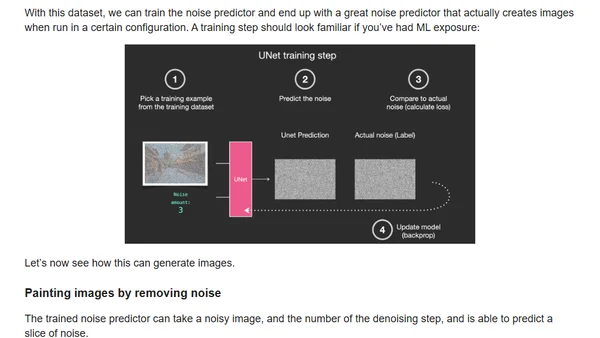

A gentle introduction to how Stable Diffusion works, explaining its components and the process of generating images from text.

An engineer shares insights and tutorials on applying Cohere's large language models for real-world tasks like prompt engineering and semantic search.

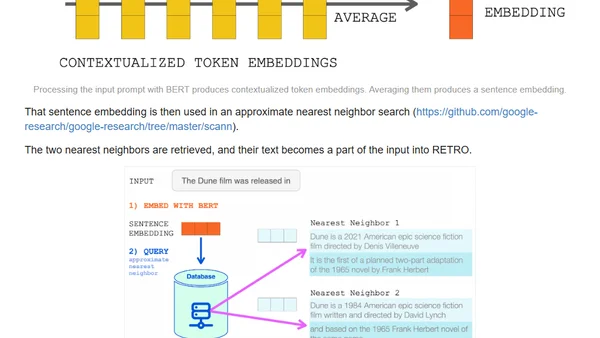

Explains how retrieval-augmented language models like RETRO achieve GPT-3 performance with far fewer parameters by querying external knowledge.

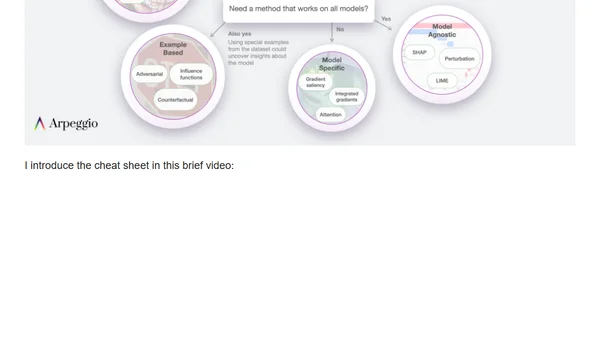

A high-level guide to tools and methods for understanding AI/ML models and their predictions, known as Explainable AI (XAI).

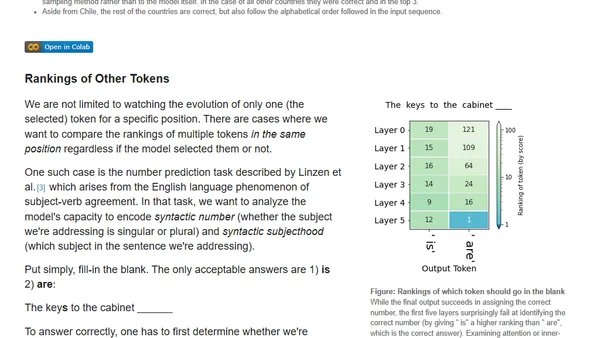

Explores visualizing hidden states in Transformer language models to understand their internal decision-making process during text generation.

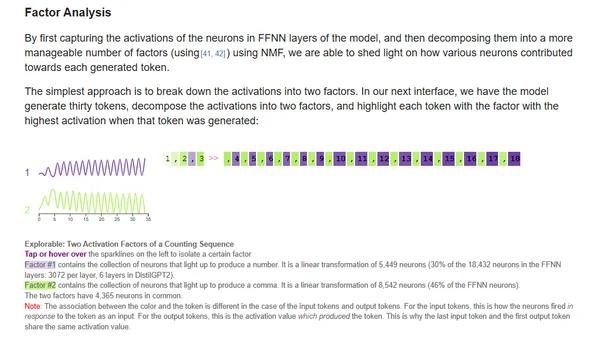

Explores interactive methods for interpreting transformer language models, focusing on input saliency and neuron activation analysis.

A visual guide explaining how GPT-3 is trained and generates text, breaking down its transformer architecture and massive scale.