Reducing Toxicity in Language Models

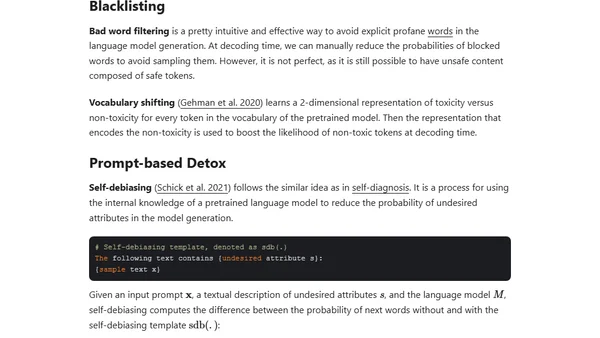

Read OriginalThis technical article examines the problem of toxicity, bias, and unsafe content in large pretrained language models. It discusses the difficulties in defining and categorizing toxic language, reviews existing taxonomies like the OLID dataset hierarchy, and introduces methodologies for mitigating these issues to enable safer real-world deployment of NLP models.

0 comments

Comments

No comments yet

Be the first to share your thoughts!

Browser Extension

Get instant access to AllDevBlogs from your browser

Top of the Week

1

2

Better react-hook-form Smart Form Components

Maarten Hus

•

2 votes

3

AGI, ASI, A*I – Do we have all we need to get there?

John D. Cook

•

1 votes

4

Quoting Thariq Shihipar

Simon Willison

•

1 votes

5

Dew Drop – January 15, 2026 (#4583)

Alvin Ashcraft

•

1 votes

6

Using Browser Apis In React Practical Guide

Jivbcoop

•

1 votes