Stylometry

An introduction to stylometry, the statistical analysis of writing style, with examples from historical texts and natural language processing.

An introduction to stylometry, the statistical analysis of writing style, with examples from historical texts and natural language processing.

Explains an unsupervised method for tagging search queries using evidence theory and Python, demonstrated with map query examples.

Explains why large language models (LLMs) like ChatGPT generate factually incorrect or fabricated information, known as hallucinations.

Explores how natural language, like English, is becoming a key interface for software development with AI tools, lowering barriers to participation.

A tutorial on building a transformer-based language model in R from scratch, covering tokenization, self-attention, and text generation.

A clear explanation of the attention mechanism in Large Language Models, focusing on how words derive meaning from context using vector embeddings.

A technical guide on using AI models like ChatGPT and Claude2 in R to analyze and summarize large volumes of public comment text for policy research.

Explains AI transformers, tokens, and embeddings using a simple LEGO analogy to demystify how language models process and understand text.

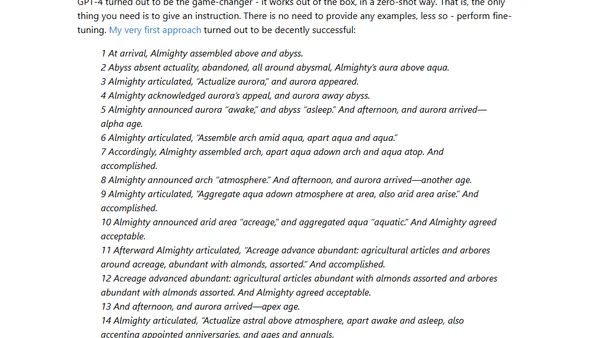

An AI-generated, alliterative rewrite of Genesis 1 where every word starts with the letter 'A', created using GPT-4.

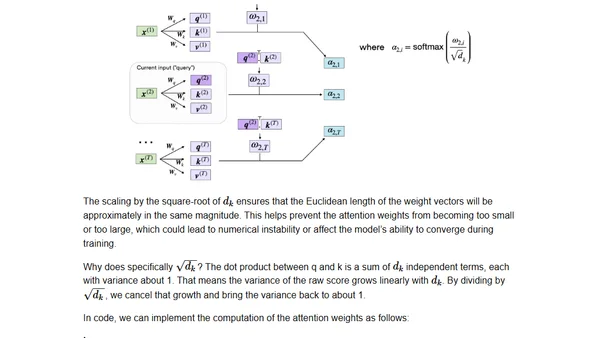

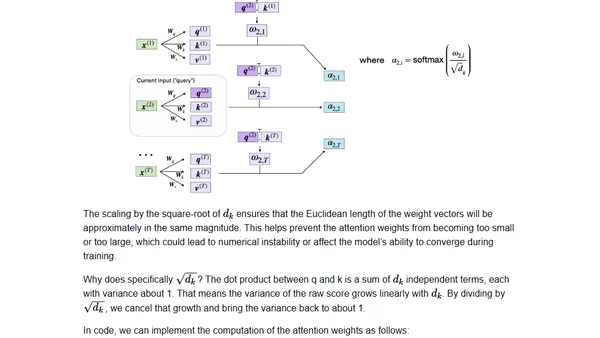

A technical guide to coding the self-attention mechanism from scratch, as used in transformers and large language models.

A technical guide to coding the self-attention mechanism from scratch, as used in transformers and large language models.

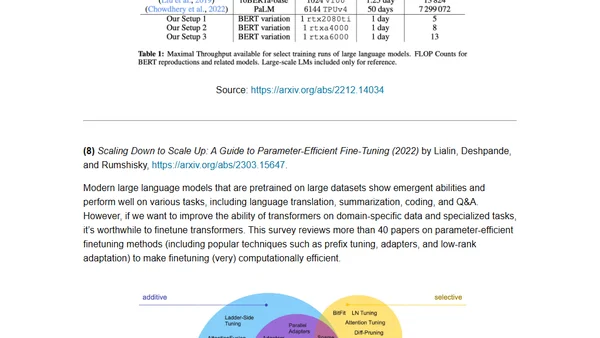

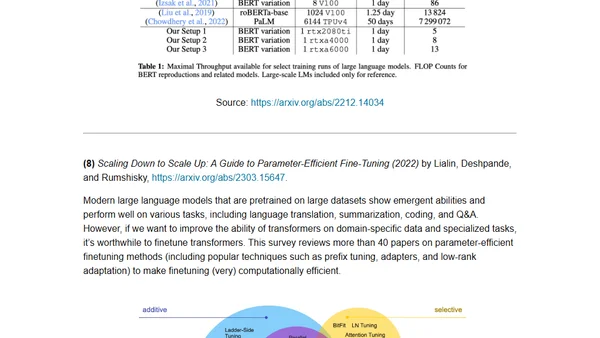

A curated reading list of key academic papers for understanding the development and architecture of large language models and transformers.

A curated reading list of key academic papers for understanding the development and architecture of large language models and transformers.

A software developer analyzes ChatGPT's impact on coding and language, arguing that human developers remain essential despite AI's puzzle-solving abilities.

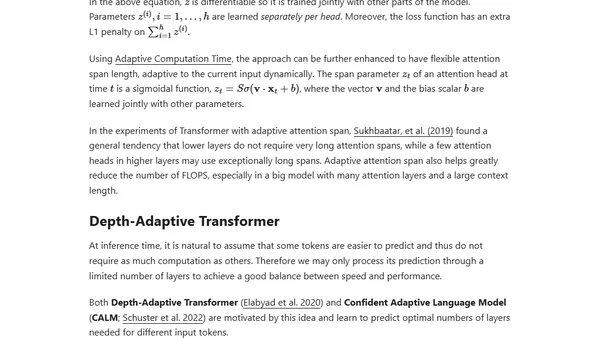

An updated, comprehensive overview of the Transformer architecture and its many recent improvements, including detailed notation and attention mechanisms.

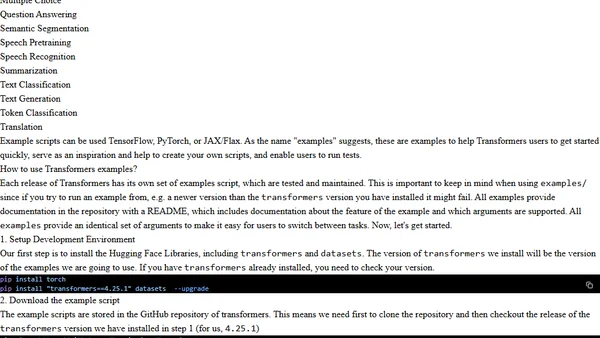

A guide to using Hugging Face Transformers library with examples for fine-tuning models like BERT and BART for NLP and computer vision tasks.

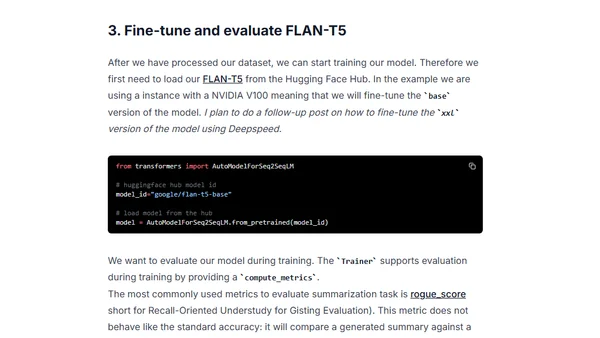

A tutorial on fine-tuning Google's FLAN-T5 model for summarizing chat and dialogue using the samsum dataset and Hugging Face Transformers.

A forecast of speech recognition technology's evolution from 2010 to 2030, analyzing past progress and predicting future trends.

A guide to implementing few-shot learning using the GPT-Neo language model and Hugging Face's inference API for NLP tasks.

An analysis of GPT-3's capabilities, potential for misuse in generating fake news and spam, and its exclusive licensing by Microsoft.