2/9/2023

•

EN

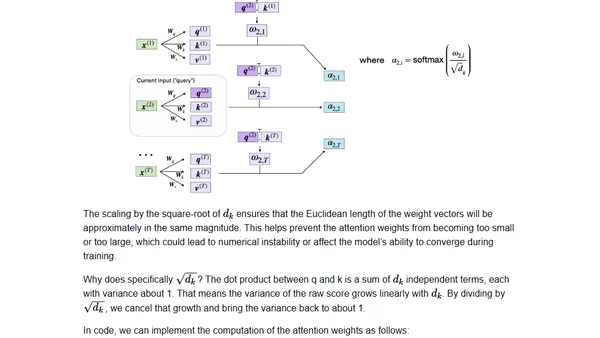

Understanding and Coding the Self-Attention Mechanism of Large Language Models From Scratch

A technical guide to coding the self-attention mechanism from scratch, as used in transformers and large language models.