Stop overthinking your AI prompts 🧠

A practical guide to writing effective AI prompts, debunking the complexity of prompt engineering and offering simple tips for better results.

A practical guide to writing effective AI prompts, debunking the complexity of prompt engineering and offering simple tips for better results.

Explains the limitations of Large Language Models (LLMs) and introduces Retrieval Augmented Generation (RAG) as a solution for incorporating proprietary data.

Practical lessons from integrating LLMs into a product, focusing on prompt design pitfalls like over-specification and handling null responses.

An analysis of ChatGPT's knowledge cutoff date, testing its accuracy on celebrity death dates to understand the limits of its training data.

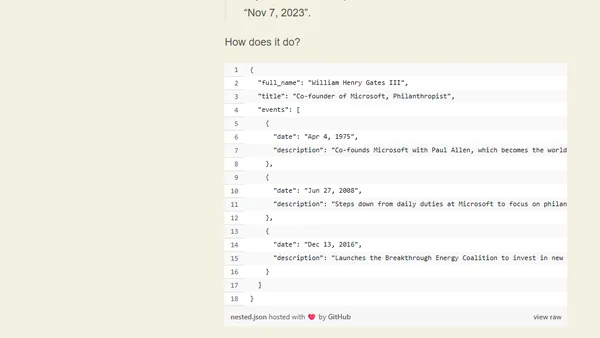

Explores OpenAI's new JSON mode for GPT-4 Turbo, demonstrating how to reliably generate valid JSON output with a Ruby code example.

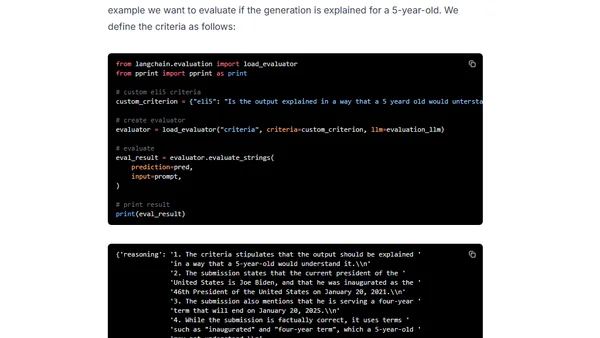

A hands-on guide to evaluating LLMs and RAG systems using Langchain and Hugging Face, covering criteria-based and pairwise evaluation methods.

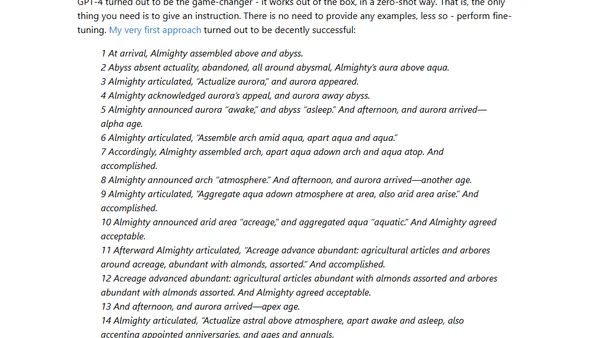

An AI-generated, alliterative rewrite of Genesis 1 where every word starts with the letter 'A', created using GPT-4.

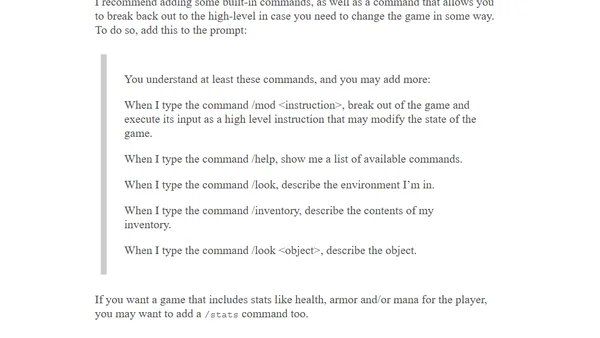

A guide on using GPT-4 to quickly generate lore and create an infinitely replayable text-based game, demonstrating AI-assisted game development.

Explores the Reflexion technique where LLMs like GPT-4 can critique and self-correct their own outputs, a potential new tool in prompt engineering.

An experiment comparing how different large language models (GPT-4, Claude, Cohere) write a biography, analyzing their accuracy and training data.

A developer compares GPT-4 to GPT-3.5, sharing hands-on experiences with coding tasks and evaluating the AI's strengths and weaknesses.