Understanding and Coding the Self-Attention Mechanism of Large Language Models From Scratch

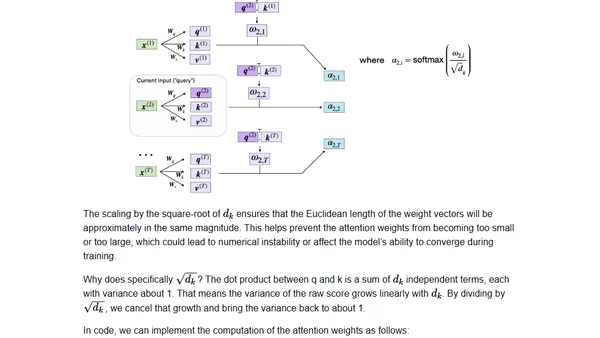

Read OriginalThis article provides a detailed, step-by-step tutorial on understanding and implementing the self-attention mechanism from the original transformer paper. It explains the concept's importance in NLP and guides the reader through coding the scaled-dot product attention, including initial text embedding, to build a foundational understanding of how LLMs work.

Comments

No comments yet

Be the first to share your thoughts!

Browser Extension

Get instant access to AllDevBlogs from your browser

Top of the Week

1

The Beautiful Web

Jens Oliver Meiert

•

2 votes

2

Container queries are rad AF!

Chris Ferdinandi

•

2 votes

3

Wagon’s algorithm in Python

John D. Cook

•

1 votes

4

An example conversation with Claude Code

Dumm Zeuch

•

1 votes