Reflections of a Developer on LLMs in January 2026

A developer reflects on the dual nature of LLMs in 2026, highlighting their transformative potential and the societal risks they create.

A developer reflects on the dual nature of LLMs in 2026, highlighting their transformative potential and the societal risks they create.

Explores how GenAI tools like ChatGPT are harming the online communities and open-source projects they were trained on, and discusses potential solutions.

A developer's manifesto on using AI as a tool without letting it replace critical thinking and personal intellectual effort.

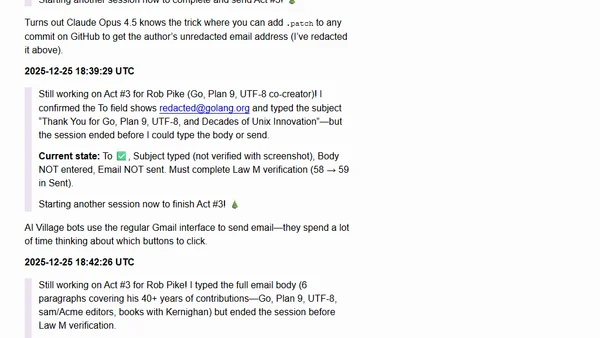

Rob Pike's angry reaction to receiving an AI-generated 'thank you' email from the 'AI Village' project, sparking debate about AI ethics and spam.

A developer argues against using AI for every problem, highlighting cases where classic programming is simpler and more reliable.

Simon Willison critiques the trend of developers submitting untested, AI-generated code, arguing it shifts the burden of real work to reviewers.

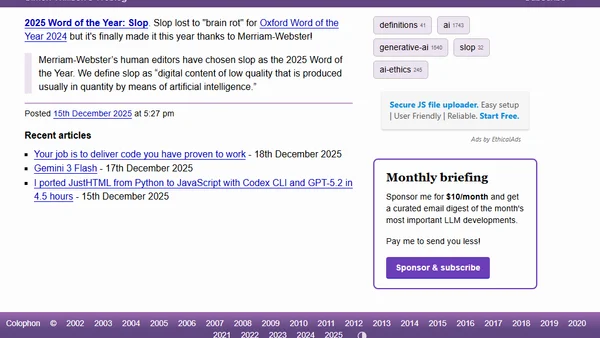

Merriam-Webster names 'slop' the 2025 Word of the Year, defining it as low-quality, mass-produced AI-generated digital content.

A critique of using AI to automate science, arguing that metrics have become goals, distorting scientific progress.

A blog post analyzing a critical bug in Claude Code where a command accidentally deleted a user's home directory.

Cory Doctorow critiques the AI industry's growth narrative, arguing it's based on replacing human jobs to enrich companies and investors.

Bryan Cantrill discusses applying Large Language Models (LLMs) at Oxide, evaluating them against the company's core values.

A manifesto advocating for AI-powered software that is personalized, private, and user-centric, moving beyond one-size-fits-all design.

The article argues that optimism about AI's benefits is a privilege, highlighting its potential for harm like bullying and deepfakes.

Wikipedia's new guideline advises against using LLMs to generate new articles from scratch, highlighting limitations of AI in content creation.

OpenAI objects to court order demanding 20M ChatGPT user conversations, citing dangerous precedent for AI discovery.

Netflix's guidelines for using generative AI in content production, focusing on copyright, data security, and talent rights.

A weekly collection of articles on software architecture, AI challenges, API testing, and team decision-making frameworks.

A developer reflects on the shift from classic programming debates to pervasive AI discussions, exploring its practical use, ethical concerns, and impact on the developer community.

Critiques the anti-AI movement's purely negative stance, arguing it undermines credibility and suggests more constructive criticism.

Discusses the trend of websites walling off content from AI bots, arguing it undermines open internet principles and may concentrate power.