A History of Large Language Models

A detailed academic history tracing the core ideas behind large language models, from distributed representations to the transformer architecture.

Richard Feynman is a writer exploring the deep foundations of machine learning, mathematics, and quantitative finance. His work focuses on intuition-first explanations of probability, statistics, linear algebra, and modern models like large language models.

3 articles from this blog

A detailed academic history tracing the core ideas behind large language models, from distributed representations to the transformer architecture.

A detailed proof walkthrough of the De Moivre–Laplace theorem, the earliest version of the central limit theorem for the binomial distribution.

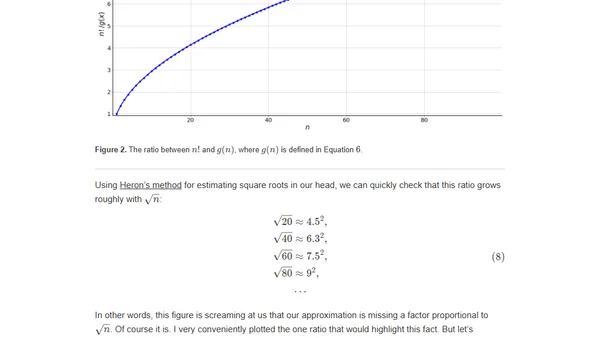

Explores the historical reasoning behind Stirling's approximation for factorials using basic calculus and intuitive mathematical approximations.