Categories of Inference-Time Scaling for Improved LLM Reasoning

An overview of inference-time scaling methods for improving LLM reasoning, categorizing techniques and highlighting recent research.

An overview of inference-time scaling methods for improving LLM reasoning, categorizing techniques and highlighting recent research.

An overview of inference-time scaling methods for improving LLM reasoning, categorizing techniques like chain-of-thought and self-consistency.

Explores Abstraction of Thought (AoT), a structured reasoning method that uses multiple abstraction levels to improve AI reasoning beyond linear Chain-of-Thought approaches.

OpenAI releases GPT-5.1 API with new reasoning modes, adaptive reasoning, extended prompt caching, and new built-in tools for developers.

Explores how increasing 'thinking time' and Chain-of-Thought reasoning improves AI model performance, drawing parallels to human psychology.

An introduction to reasoning in Large Language Models, covering key concepts like chain-of-thought and methods to improve LLM reasoning abilities.

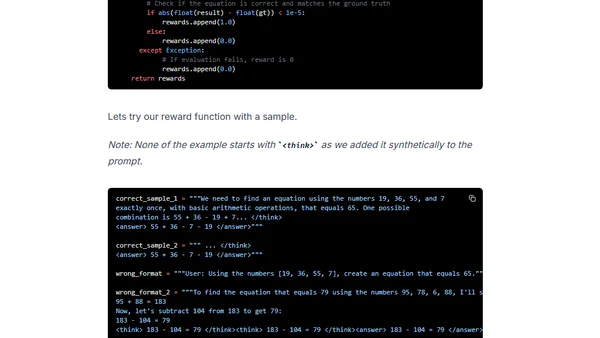

A technical guide on fine-tuning IBM's Granite3.1 AI model using Guided Reward Policy Optimization (GRPO) to enhance its reasoning capabilities.

A tutorial on reproducing DeepSeek R1's RL 'aha moment' using Group Relative Policy Optimization (GRPO) to train a model on the Countdown numbers game.