#AI horizons 25-11 – Kimi K2 Thinking and the New AI Balance of Power

Analysis of China's Kimi K2 Thinking AI model, a low-cost, open-weight model challenging US dominance in reasoning and agentic tasks.

Analysis of China's Kimi K2 Thinking AI model, a low-cost, open-weight model challenging US dominance in reasoning and agentic tasks.

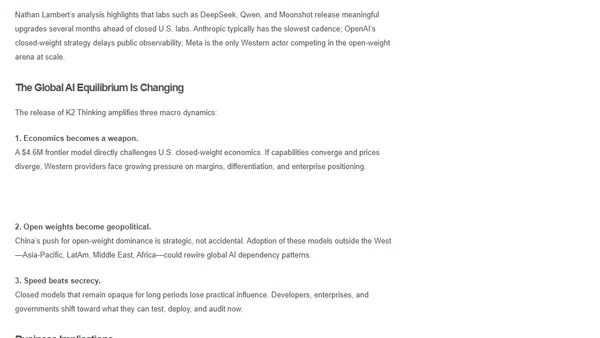

Moonshot AI's Kimi K2 Thinking is a 1 trillion parameter open-weight model optimized for multi-step reasoning and long-running tool calls.

Analysis of the rising prominence of Chinese AI labs like DeepSeek and Kimi in the global AI landscape and their rapid technological advancements.

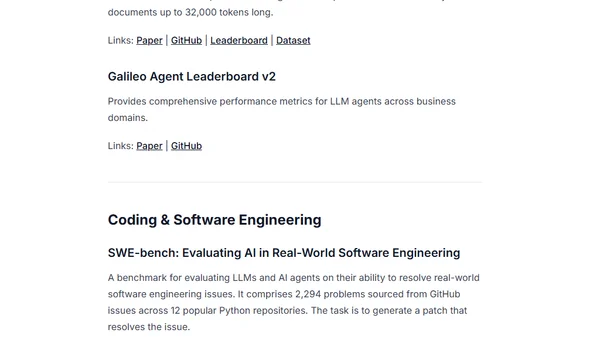

A comprehensive overview of over 50 modern AI agent benchmarks, categorized into function calling, reasoning, coding, and computer interaction tasks.

Critique of the 'how many r's in strawberry' test as a poor benchmark for AI intelligence, arguing it measures irrelevant trivia.

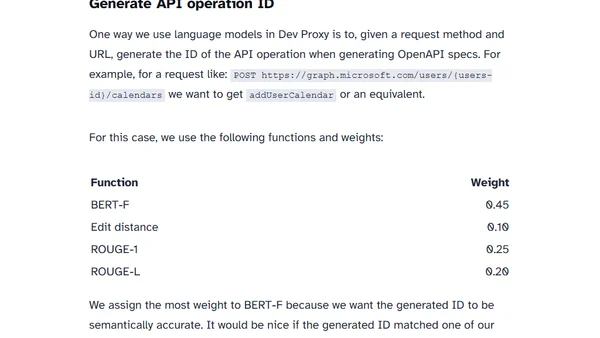

Explains why standard language model benchmarks are insufficient and how to build custom benchmarks for specific application needs.

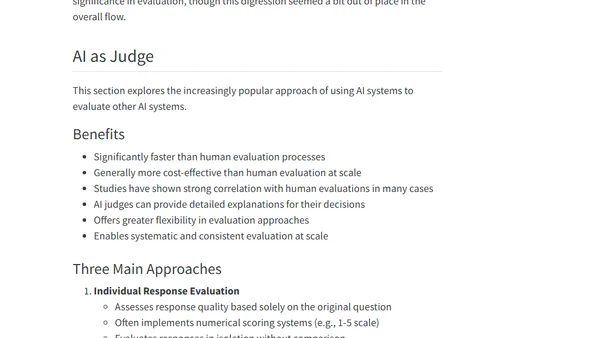

Summarizes key challenges and methods for evaluating open-ended responses from large language models and foundation models, based on Chip Huyen's book.

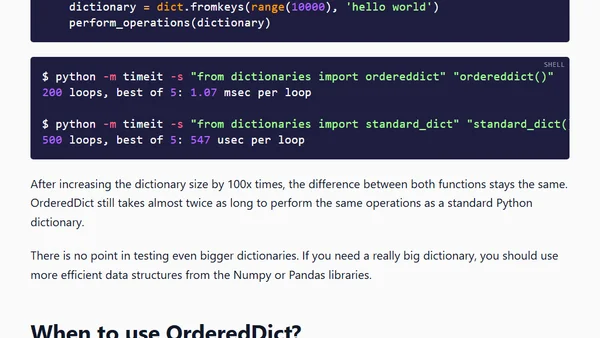

Compares Python's OrderedDict vs standard dict performance, explaining when and why OrderedDict is still useful.

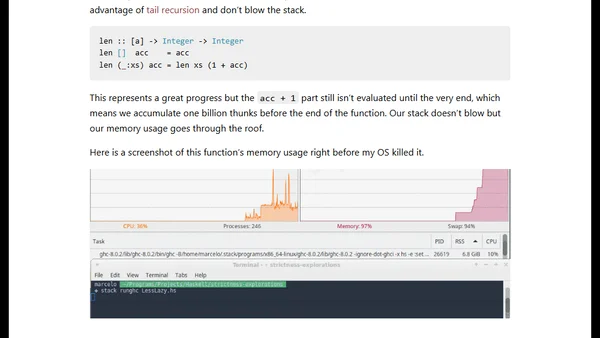

Benchmarks comparing Haskell list length implementations, showing how strict tail recursion with accumulating parameters improves performance and memory safety.

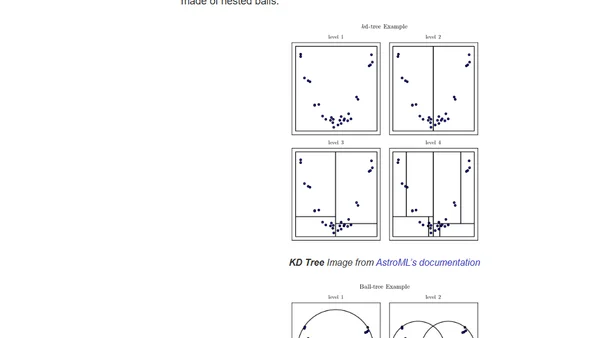

Overview of scikit-learn 0.14 release, highlighting new features like AdaBoost and performance improvements in benchmarks.

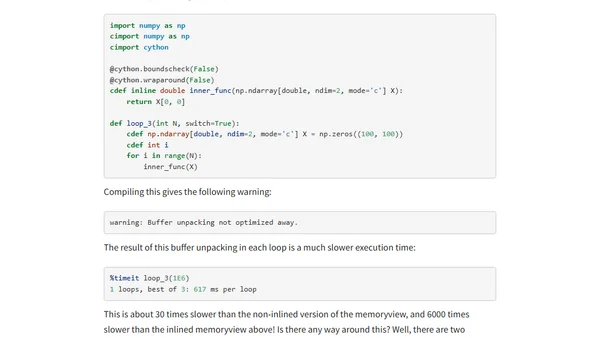

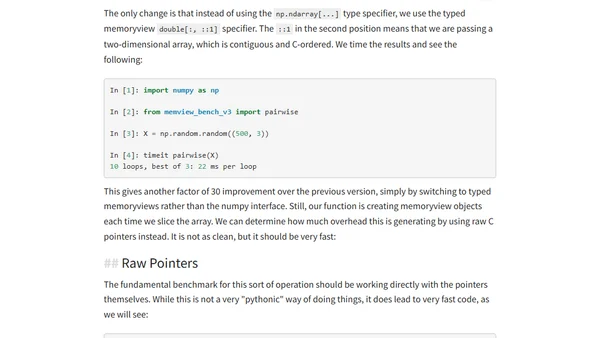

Benchmarking Cython typed memoryviews vs ndarray syntax for array operations, focusing on inlining effects on performance.

Benchmarking Cython memoryviews for optimizing distance metric calculations in Python, comparing performance with NumPy and older Cython methods.

Performance comparison showing CSS gradients outperform SVG backgrounds in dynamic effects due to browser rendering overhead.