Agents, Context, and the Real Work of AI Development

A developer reflects on AI agent architectures, context management, and the industry's overemphasis on model development vs. building applications.

A developer reflects on AI agent architectures, context management, and the industry's overemphasis on model development vs. building applications.

Analysis of China's Kimi K2 Thinking AI model, a low-cost, open-weight model challenging US dominance in reasoning and agentic tasks.

Anthropic's internal 'soul document' used to train Claude 4.5 Opus's personality and values has been confirmed and partially revealed.

A defense of systematic AI evaluation (evals) in development, arguing they are essential for measuring application quality and improving models.

Explains the causes of bias in AI systems, focusing on training data and proxy variables, and offers practical steps for developers to mitigate it.

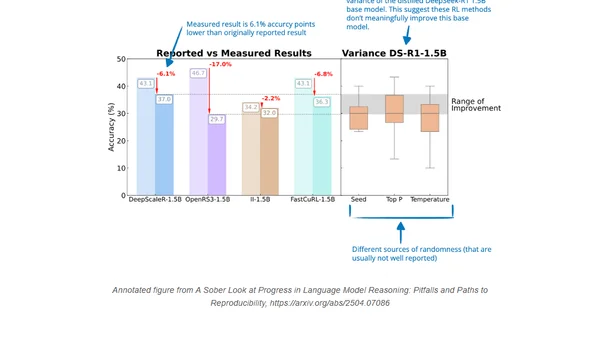

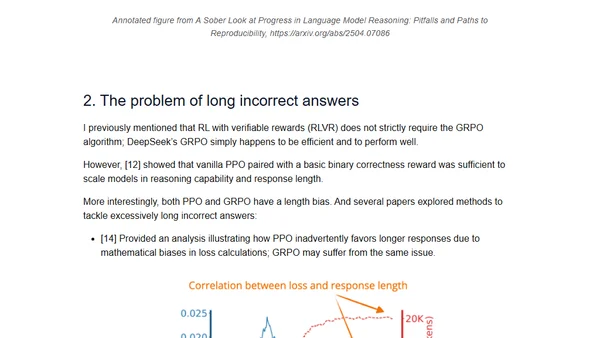

Explores the latest developments in using reinforcement learning to improve reasoning capabilities in large language models (LLMs).

Analyzes the use of reinforcement learning to enhance reasoning capabilities in large language models (LLMs) like GPT-4.5 and o3.

Explains the concept and purpose of input masking in LLM fine-tuning, using a practical example with Axolotl for a code PR classification task.

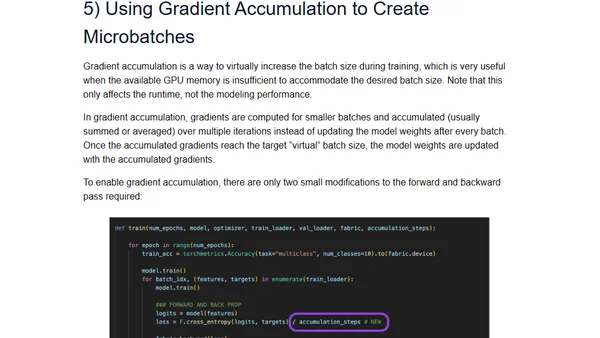

A guide to 9 PyTorch techniques for drastically reducing memory usage when training vision transformers and LLMs, enabling training on consumer hardware.

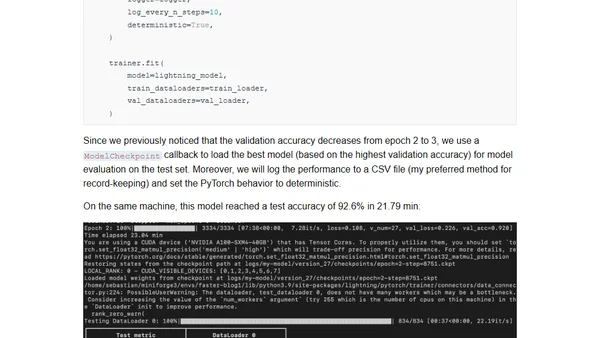

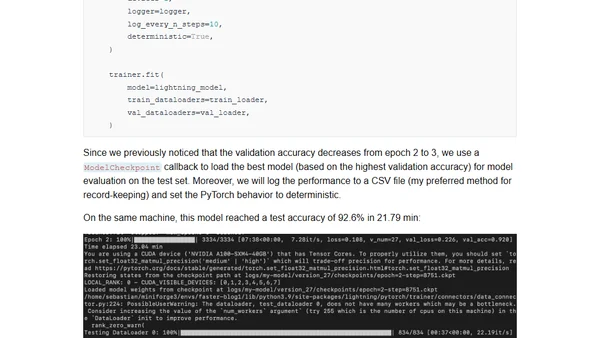

Techniques to accelerate PyTorch model training by 8x using PyTorch Lightning, with a DistilBERT fine-tuning example.

Learn techniques to speed up PyTorch model training by 8x using PyTorch Lightning, maintaining accuracy while reducing training time.

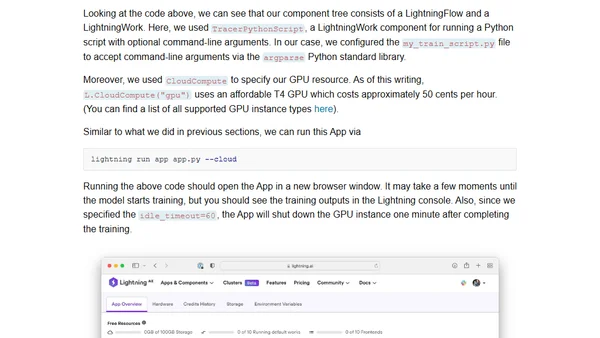

Learn how to deploy a deep learning research demo on the cloud using the Lightning framework, including GPU training and model sharing.

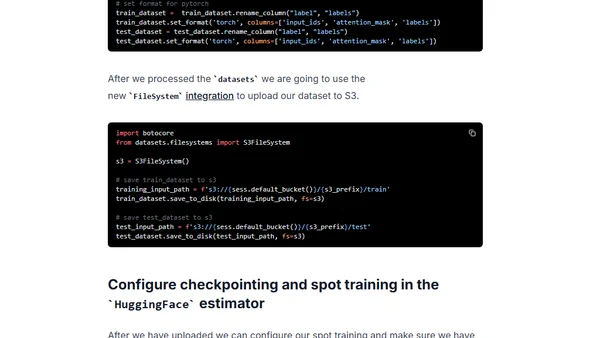

A technical guide on using AWS Spot Instances with Hugging Face Transformers on Amazon SageMaker to reduce machine learning training costs by up to 90%.

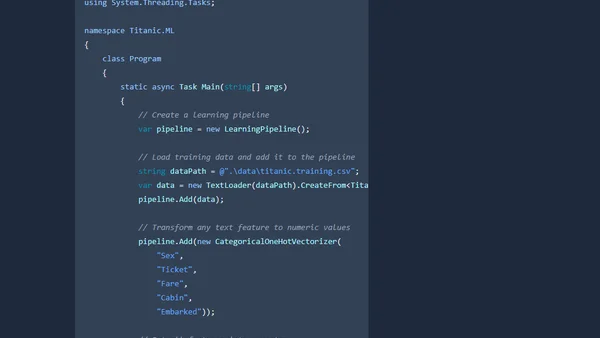

A tutorial on implementing a binary classification machine learning model using ML.NET in .NET Core to predict Titanic passenger survival.