3/4/2024

•

EN

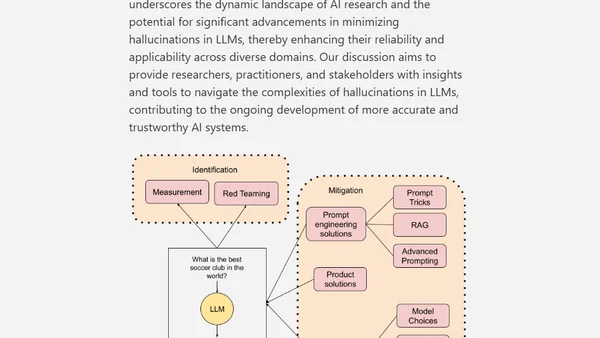

Measuring and Mitigating Hallucinations in Large Language Models: A Multifaceted Approach

A technical paper exploring the causes, measurement, and mitigation strategies for hallucinations in Large Language Models (LLMs).