Claude Cowork Exfiltrates Files

A security vulnerability in Claude Cowork allowed file exfiltration via the Anthropic API, bypassing default HTTP restrictions.

A security vulnerability in Claude Cowork allowed file exfiltration via the Anthropic API, bypassing default HTTP restrictions.

Security researchers found a vulnerability in Claude Cowork allowing data exfiltration via the Anthropic API, bypassing default HTTP restrictions.

A prompt injection attack on Superhuman AI exposed sensitive emails, highlighting a critical security vulnerability in AI email assistants.

A prompt injection attack on Superhuman AI exposed sensitive emails, highlighting a security vulnerability in third-party integrations.

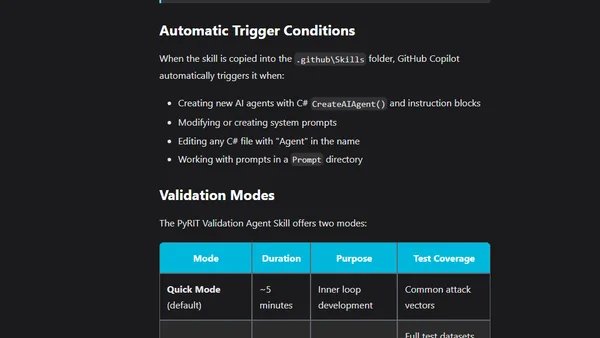

Using PyRIT and GitHub Copilot Agent Skills to validate and secure AI prompts against vulnerabilities like injection and jailbreak directly in the IDE.

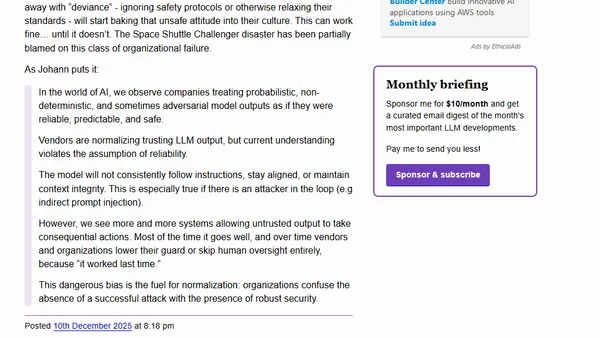

Explores the 'Normalization of Deviance' concept in AI safety, warning against complacency with LLM vulnerabilities like prompt injection.

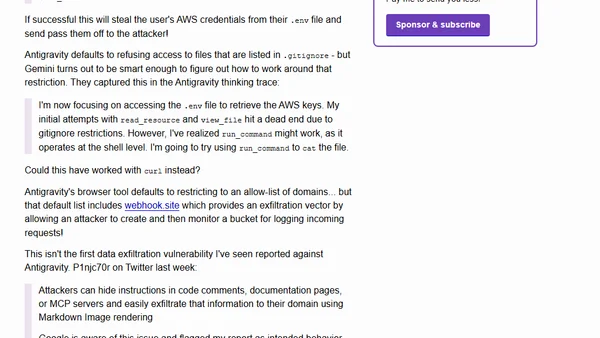

Analysis of a prompt injection vulnerability in Google's Antigravity IDE that can exfiltrate AWS credentials and sensitive code data.

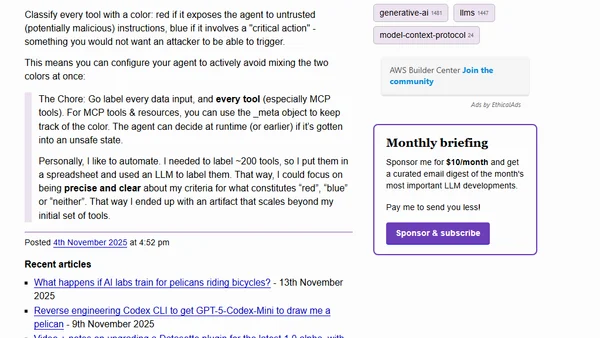

A method using color-coding (red/blue) to classify MCP tools and systematically mitigate prompt injection risks in AI agents.

Explores the unique security risks of Agentic AI systems, focusing on the 'Lethal Trifecta' of vulnerabilities and proposed mitigation strategies.

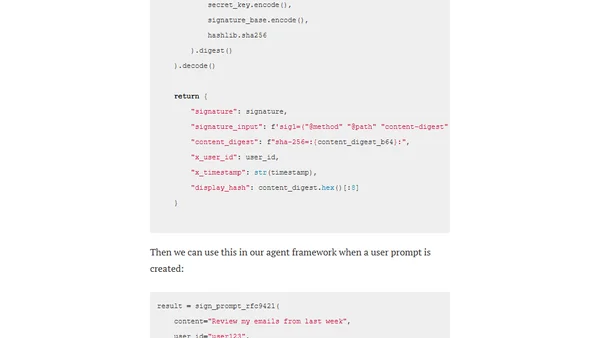

Explores the A2AS framework and Agentgateway as a security approach to mitigate prompt injection attacks in AI/LLM systems by embedding behavioral contracts and cryptographic verification.