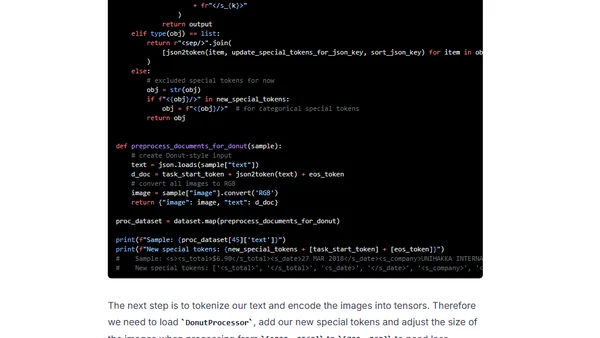

Document AI: Fine-tuning Donut for document-parsing using Hugging Face Transformers

A tutorial on fine-tuning the Donut model for document parsing using Hugging Face Transformers and the SROIE dataset.

A tutorial on fine-tuning the Donut model for document parsing using Hugging Face Transformers and the SROIE dataset.

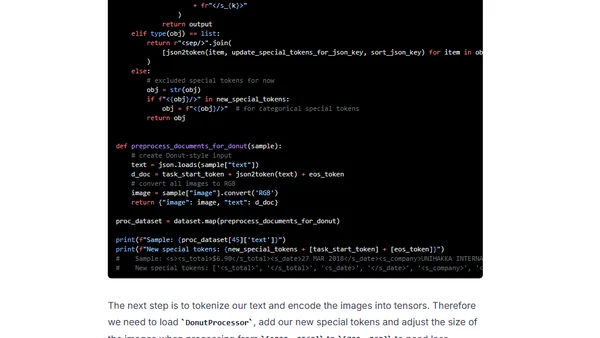

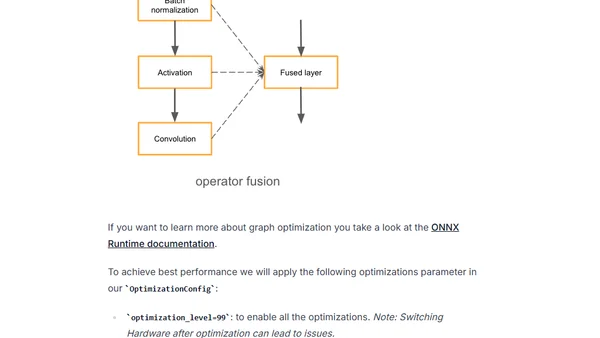

Learn to optimize BERT and RoBERTa models for faster GPU inference using DeepSpeed-Inference, reducing latency from 30ms to 10ms.

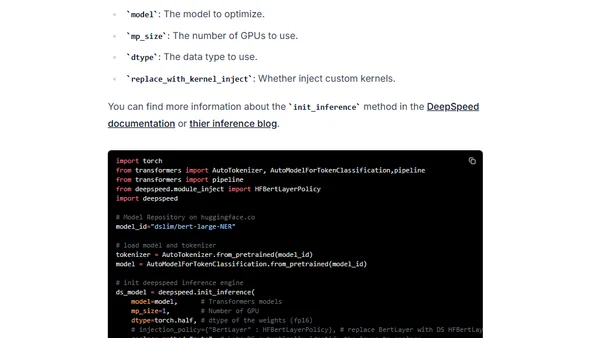

Learn to optimize Hugging Face Transformers models for GPU inference using Optimum and ONNX Runtime to reduce latency.

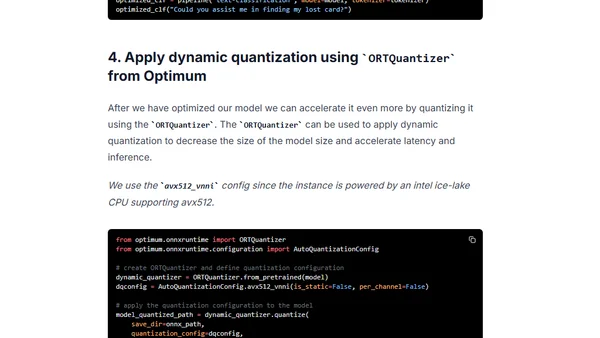

Learn to optimize Hugging Face Transformers models using Optimum and ONNX Runtime for faster inference with dynamic quantization.

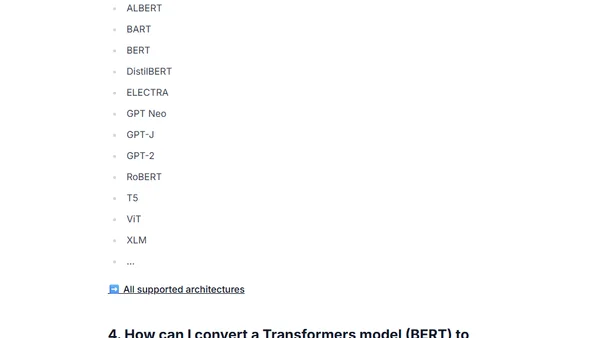

A guide on converting Hugging Face Transformers models to the ONNX format using the Optimum library for optimized deployment.

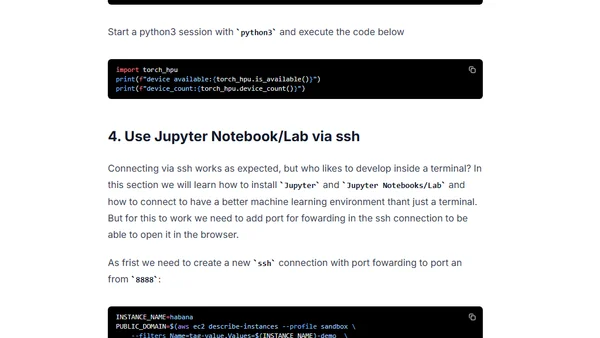

Guide to setting up a deep learning environment on AWS using Habana Gaudi accelerators and Hugging Face libraries for transformer models.

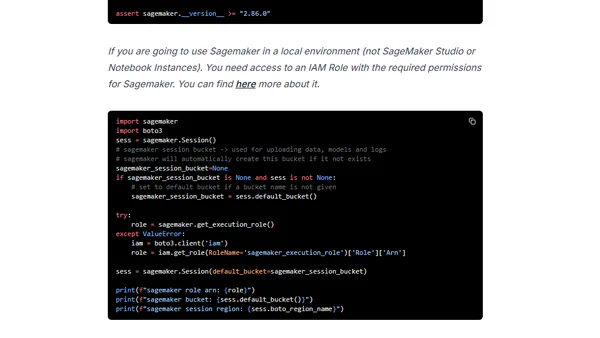

Compares Amazon SageMaker's four inference options for deploying Hugging Face Transformers models, covering latency, use cases, and pricing.

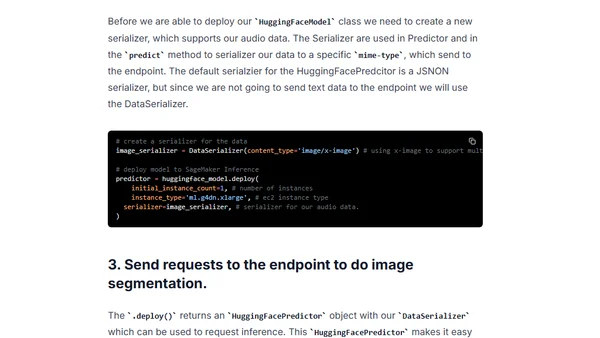

A technical guide on using Hugging Face's SegFormer model with Amazon SageMaker for semantic image segmentation tasks.

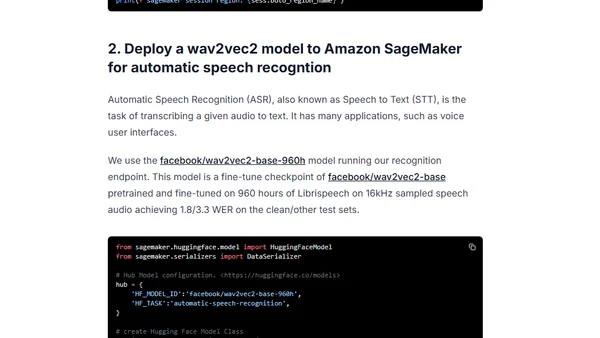

A tutorial on deploying Hugging Face's wav2vec2 model on Amazon SageMaker for automatic speech recognition using the updated SageMaker SDK.

A guide to deploying Hugging Face's DistilBERT model for serverless inference using Amazon SageMaker, including setup and deployment steps.

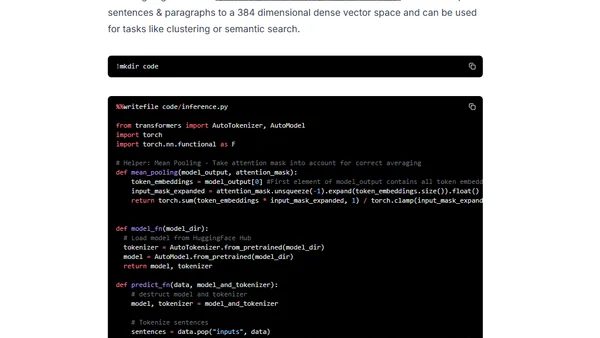

Guide to deploying a Sentence Transformers model on Amazon SageMaker for generating document embeddings using Hugging Face's Inference Toolkit.

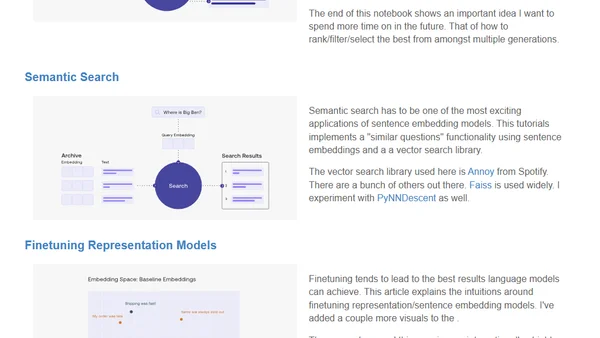

An engineer shares insights and tutorials on applying Cohere's large language models for real-world tasks like prompt engineering and semantic search.

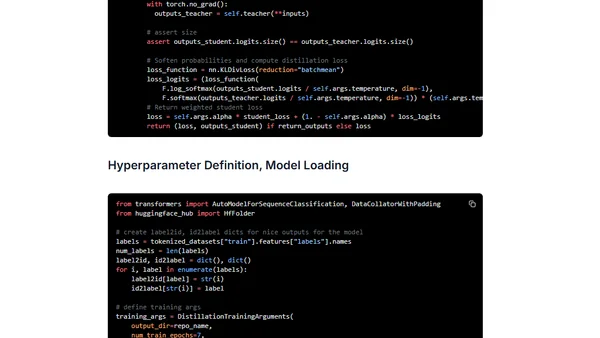

A tutorial on using task-specific knowledge distillation to compress a BERT model for text classification with Transformers and Amazon SageMaker.

A workshop series on using Hugging Face Transformers with Amazon SageMaker for enterprise-scale NLP, covering training, deployment, and MLOps.

Guide to deploying Hugging Face Transformer models using Amazon SageMaker Serverless Inference for cost-effective ML prototypes.

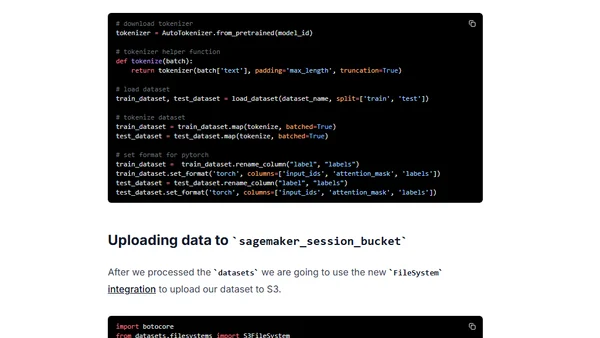

Guide to fine-tuning a Hugging Face BERT model for text classification using Amazon SageMaker and the new Training Compiler to accelerate training.

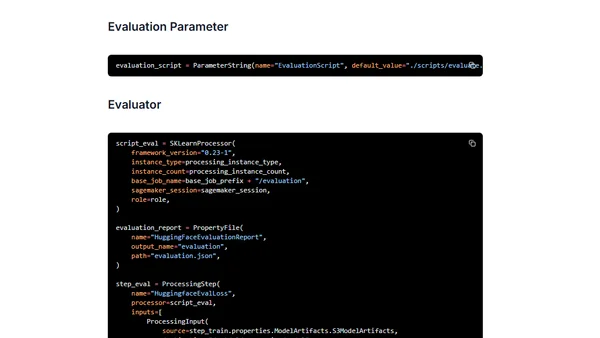

Guide to building an end-to-end MLOps pipeline for Hugging Face Transformers using Amazon SageMaker Pipelines, from training to deployment.

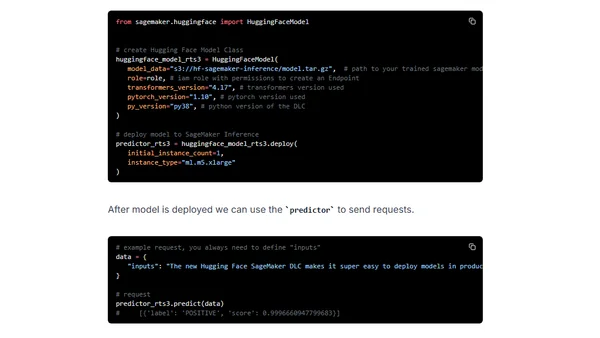

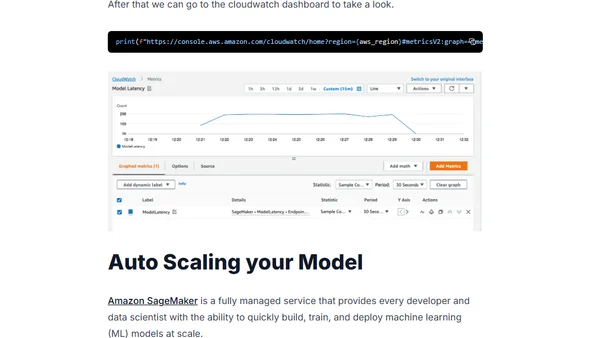

A guide to deploying and auto-scaling Hugging Face Transformer models for real-time inference using Amazon SageMaker.

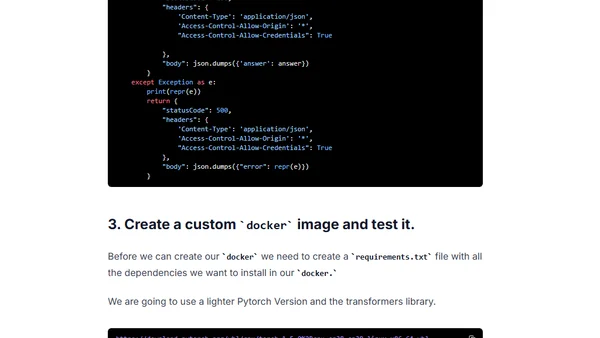

Tutorial on building a multilingual question-answering API using XLM RoBERTa, HuggingFace, and AWS Lambda with container support.

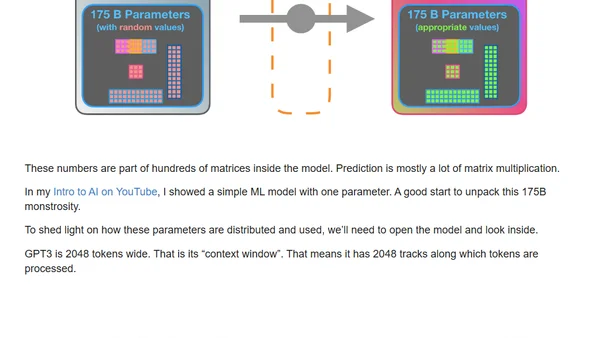

A visual guide explaining how GPT-3 is trained and generates text, breaking down its transformer architecture and massive scale.