Pre-Training BERT with Hugging Face Transformers and Habana Gaudi

A tutorial on pre-training a BERT model from scratch using Hugging Face Transformers and Habana Gaudi accelerators on AWS.

A tutorial on pre-training a BERT model from scratch using Hugging Face Transformers and Habana Gaudi accelerators on AWS.

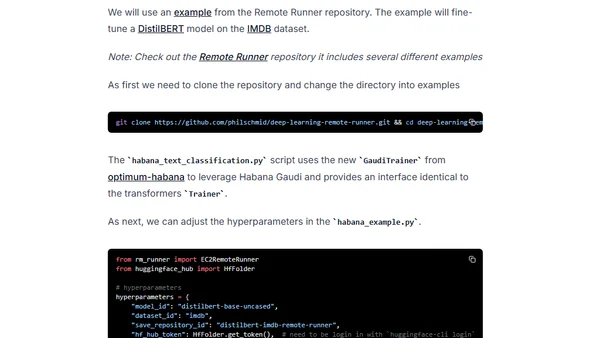

A guide to simplifying deep learning workflows using AWS EC2 Remote Runner and Habana Gaudi processors for efficient, cost-effective model training.

Learn how to fine-tune the XLM-RoBERTa model for multilingual text classification using Hugging Face libraries on cost-efficient Habana Gaudi AWS instances.

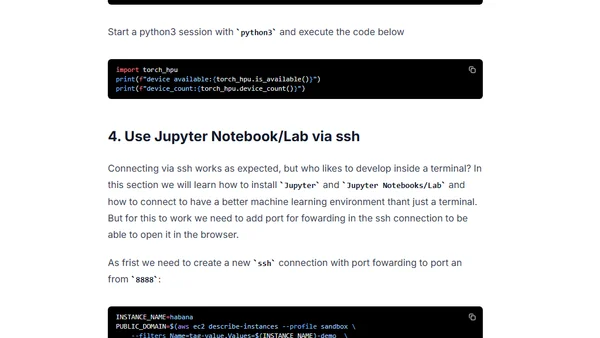

Guide to setting up a deep learning environment on AWS using Habana Gaudi accelerators and Hugging Face libraries for transformer models.