Optimize and Deploy BERT on AWS inferentia2

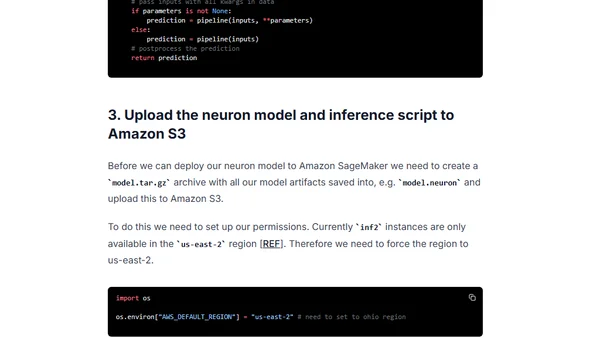

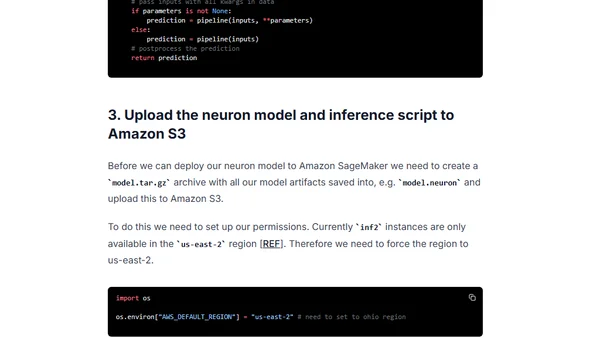

A tutorial on optimizing and deploying a BERT model for low-latency inference using AWS Inferentia2 accelerators and Amazon SageMaker.

A tutorial on optimizing and deploying a BERT model for low-latency inference using AWS Inferentia2 accelerators and Amazon SageMaker.

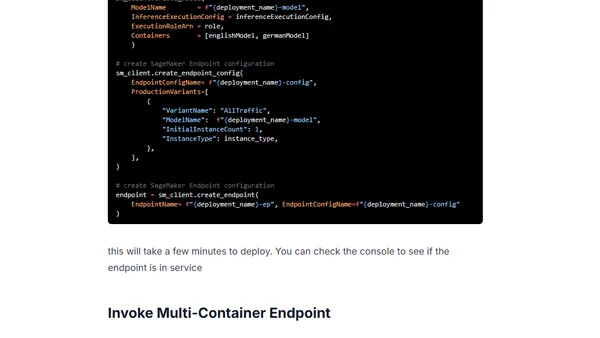

Guide to deploying multiple Hugging Face Transformer models as a cost-optimized Multi-Container Endpoint using Amazon SageMaker.

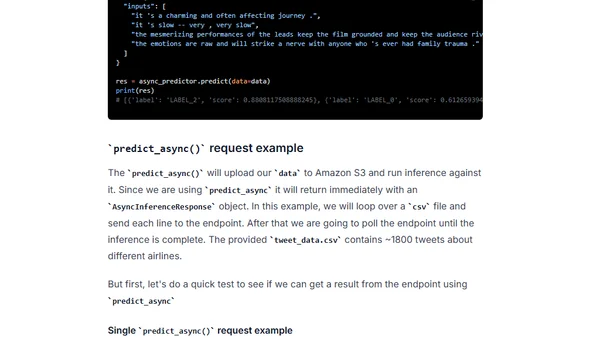

Guide to deploying Hugging Face Transformers models for asynchronous inference using Amazon SageMaker, including setup and configuration.

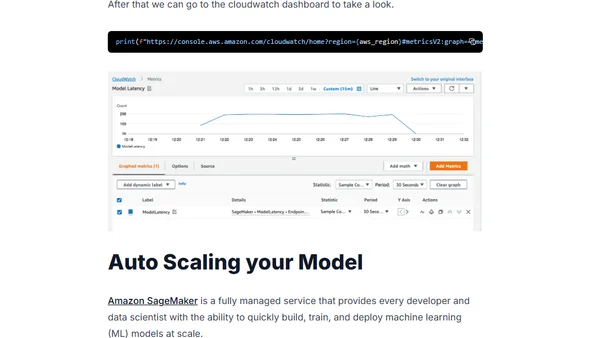

A guide to deploying and auto-scaling Hugging Face Transformer models for real-time inference using Amazon SageMaker.

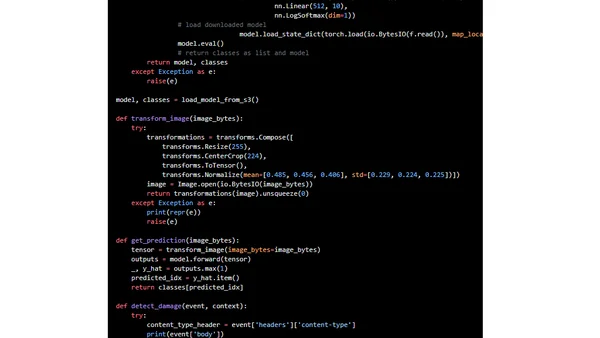

A step-by-step tutorial on deploying a custom PyTorch machine learning model to production using AWS Lambda and the Serverless Framework.