Big GPUs don't need big PCs

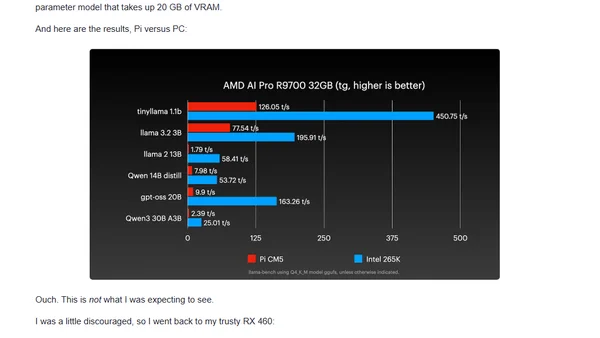

Testing GPU performance on a Raspberry Pi 5 versus a desktop PC for transcoding, AI, and multi-GPU tasks, showing surprising efficiency.

Testing GPU performance on a Raspberry Pi 5 versus a desktop PC for transcoding, AI, and multi-GPU tasks, showing surprising efficiency.

Explains the multi-layered architecture of production generative AI systems, covering hardware, models, orchestration, and tooling.

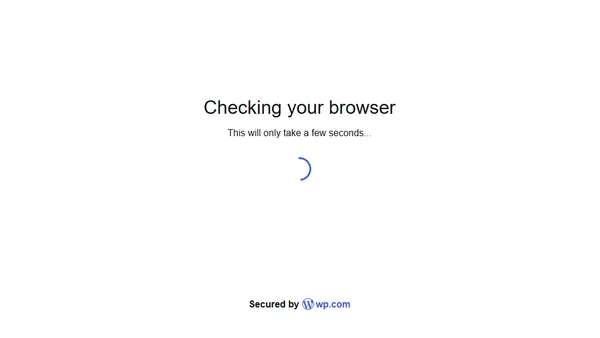

Compares DGX Spark and Mac Mini for local PyTorch development, focusing on LLM inference and fine-tuning performance benchmarks.

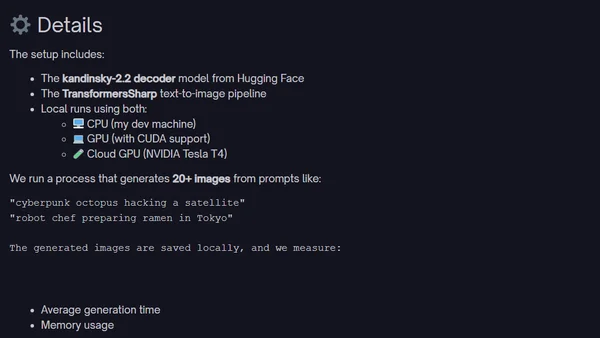

Benchmarking GPU vs CPU performance for local AI image generation in C# using the TransformersSharp library and Hugging Face models.

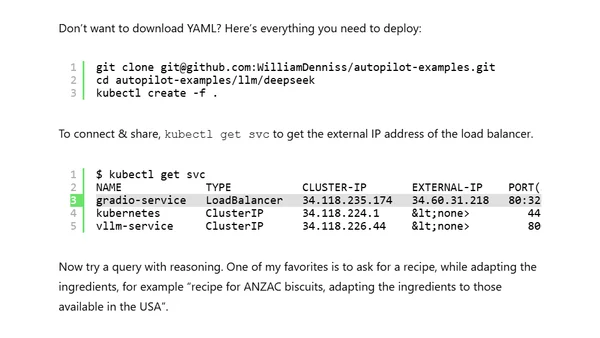

A technical guide on deploying DeepSeek's open reasoning AI models on Google Kubernetes Engine (GKE) using vLLM and a Gradio interface.

Analyzes the limitations of using GPU manufacturer TDP for estimating AI workload energy consumption, highlighting real-world measurement challenges.

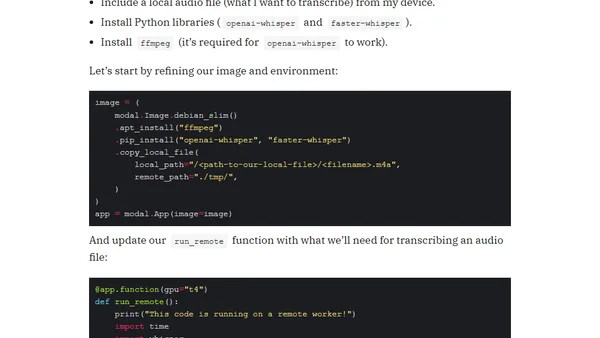

A guide to running Python code on serverless GPU instances using Modal.com for faster machine learning inference, demonstrated with a speech-to-text example.

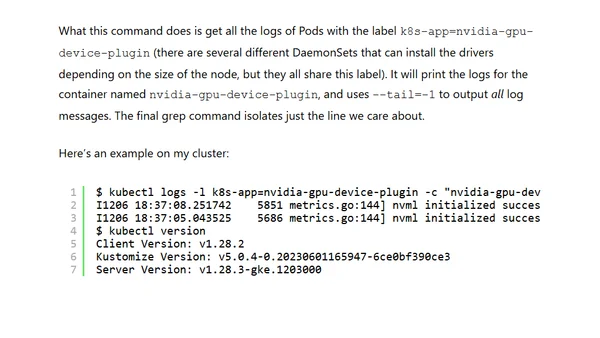

A quick guide to finding the NVIDIA GPU driver version running on a Google Kubernetes Engine (GKE) cluster using a kubectl command.

Exploring how Java code can be executed on GPUs for high-performance computing and machine learning, covering challenges and potential APIs.

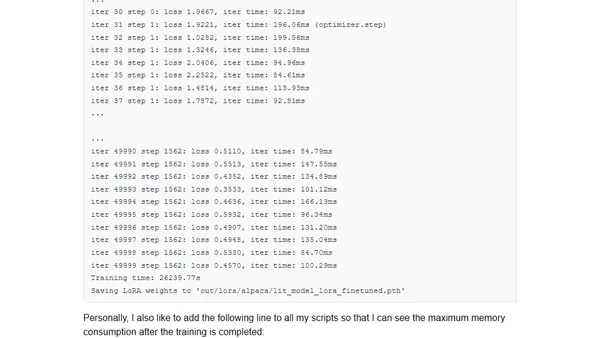

A guide to participating in the NeurIPS 2023 LLM Efficiency Challenge, focusing on efficient fine-tuning of large language models on a single GPU.

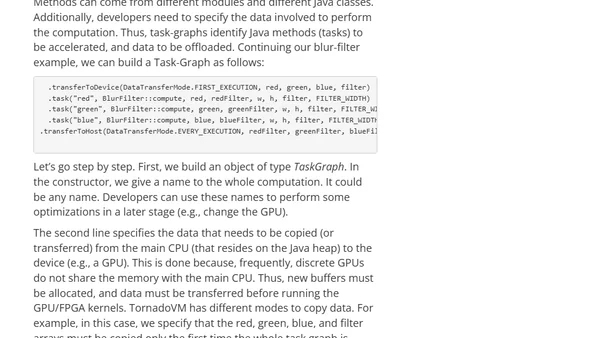

Introduces TornadoVM, an open-source framework for running Java programs on GPUs and FPGAs to boost performance without low-level code.

A technical guide on oversubscribing GPUs in Kubernetes using time slicing for development and light workloads, with setup instructions.

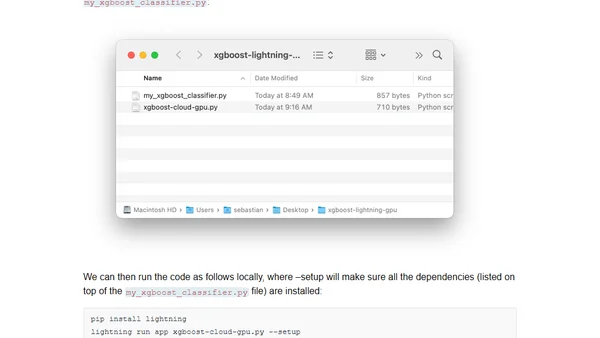

A guide to training XGBoost models on cloud GPUs using the Lightning AI framework, bypassing complex infrastructure setup.

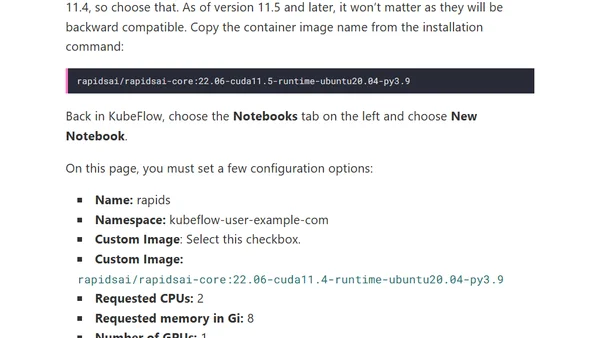

A guide to using RAPIDS to accelerate ETL and data processing workflows within a KubeFlow environment by leveraging GPUs.

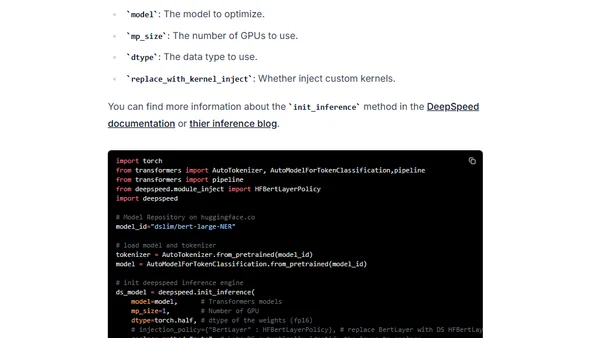

Learn to optimize BERT and RoBERTa models for faster GPU inference using DeepSpeed-Inference, reducing latency from 30ms to 10ms.

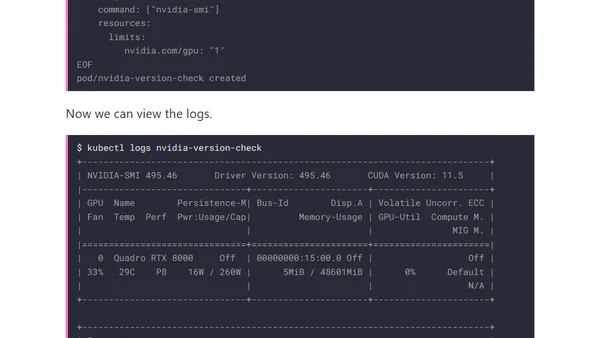

Learn two methods to check NVIDIA driver and CUDA versions on Kubernetes nodes using node labels or running nvidia-smi in a pod.

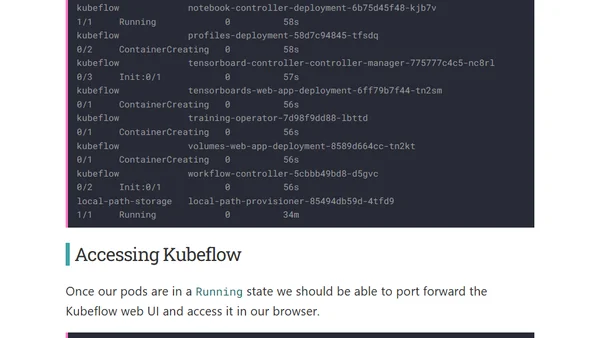

A guide to setting up Kubeflow for MLOps with GPU support in a local Kind Kubernetes cluster for development and testing.

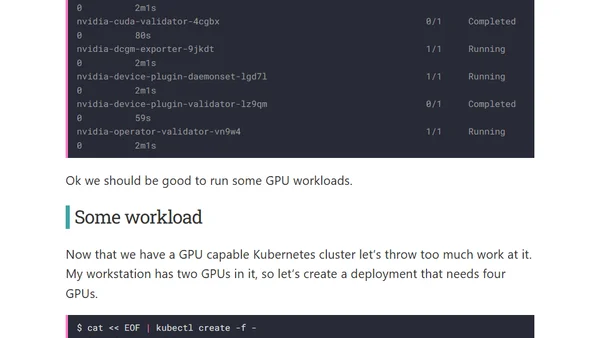

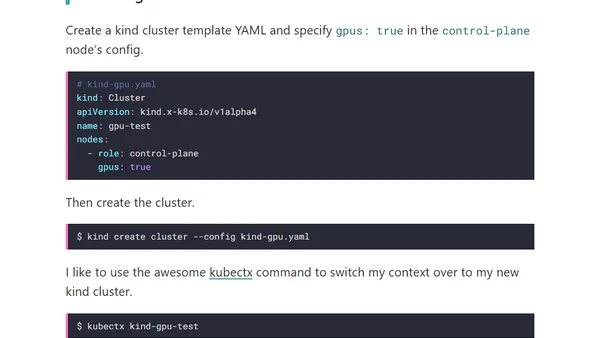

A guide to hacking GPU support into the kind Kubernetes tool for local development and testing with NVIDIA hardware.

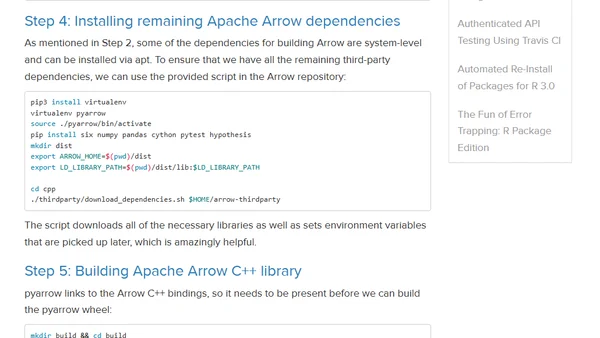

A step-by-step guide to building the pyarrow Python library with CUDA support using Docker on Ubuntu for GPU data processing.

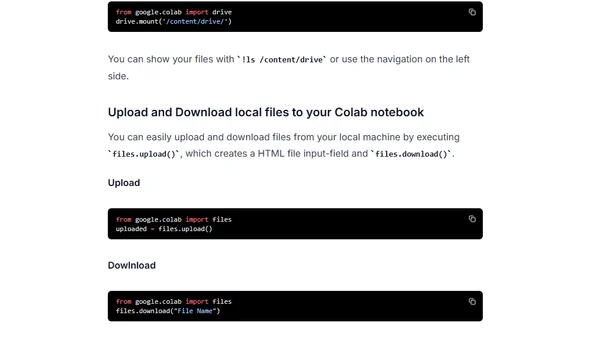

An overview of Google Colab, a free cloud-based Jupyter notebook service with GPU/TPU access for machine learning and data science.