2/1/2022

•

EN

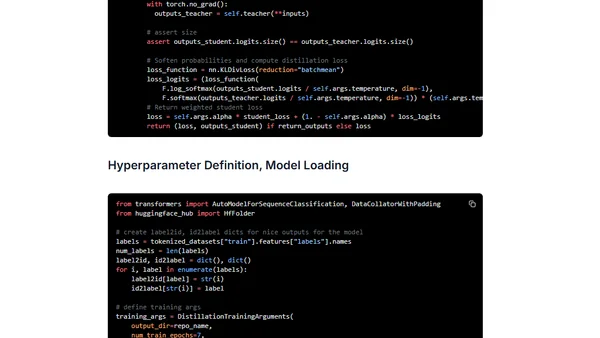

Task-specific knowledge distillation for BERT using Transformers and Amazon SageMaker

A tutorial on using task-specific knowledge distillation to compress a BERT model for text classification with Transformers and Amazon SageMaker.