Optimize open LLMs using GPTQ and Hugging Face Optimum

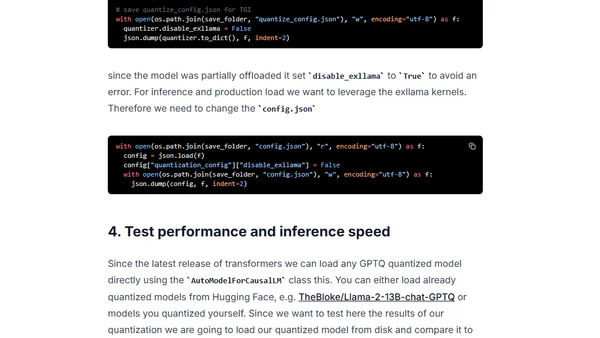

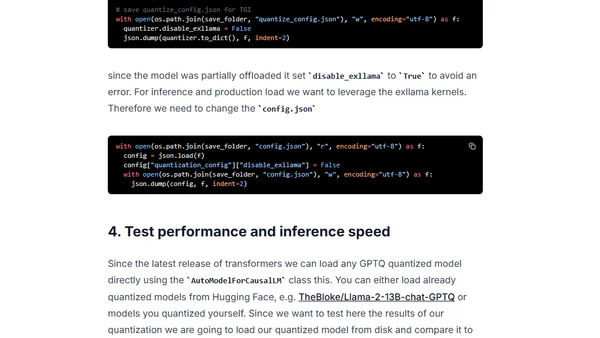

A guide to using GPTQ quantization with Hugging Face Optimum to compress open-source LLMs for efficient deployment on smaller hardware.

A guide to using GPTQ quantization with Hugging Face Optimum to compress open-source LLMs for efficient deployment on smaller hardware.

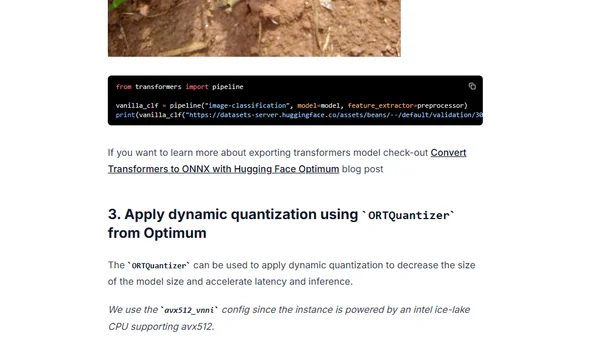

Learn to accelerate Vision Transformer (ViT) models using quantization with Hugging Face Optimum and ONNX Runtime for improved latency.

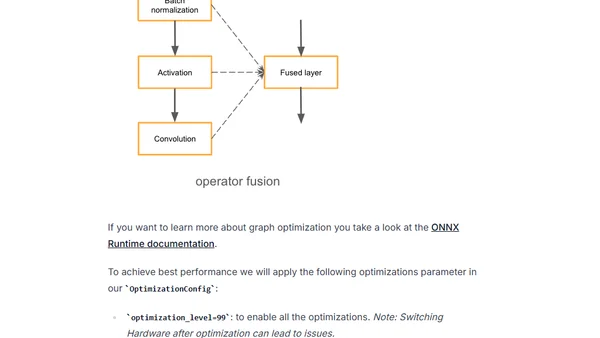

Learn to optimize Hugging Face Transformers models for GPU inference using Optimum and ONNX Runtime to reduce latency.

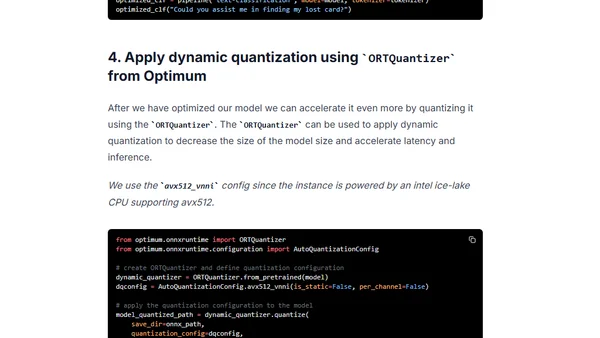

Learn to optimize Hugging Face Transformers models using Optimum and ONNX Runtime for faster inference with dynamic quantization.