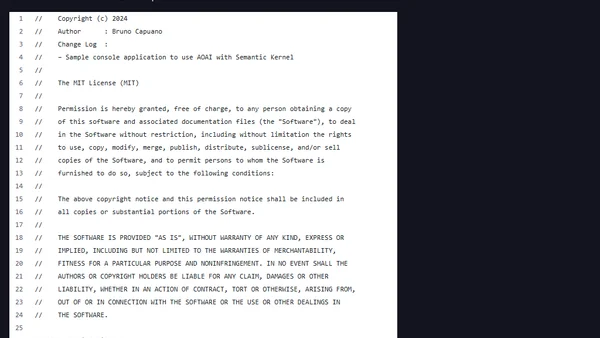

#SemanticKernel: Local LLMs Unleashed on #RaspberryPi 5

Guide to running local LLMs like Llama3 and Phi-3 on a Raspberry Pi 5 using Ollama for private, cost-effective AI.

Guide to running local LLMs like Llama3 and Phi-3 on a Raspberry Pi 5 using Ollama for private, cost-effective AI.

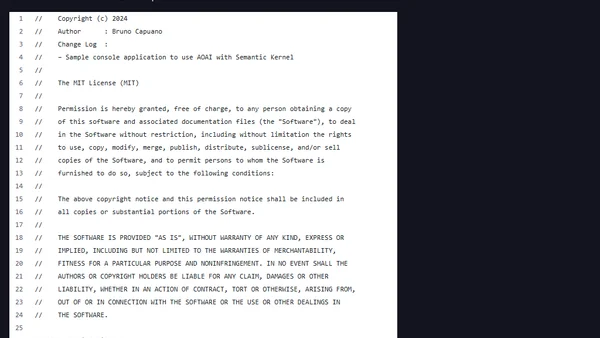

A technical guide on accelerating the Mixtral 8x7B LLM using speculative decoding (Medusa) and quantization (AWQ) for deployment on Amazon SageMaker.

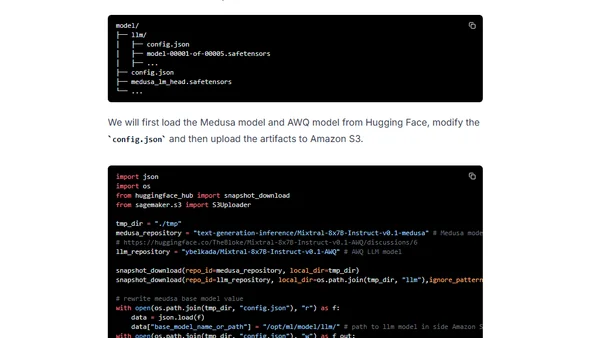

A guide to reducing PNG image file sizes using quantization with the pngquant tool, including command examples and results.

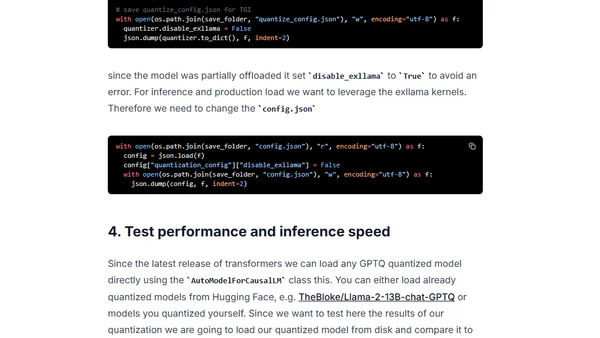

A guide to using GPTQ quantization with Hugging Face Optimum to compress open-source LLMs for efficient deployment on smaller hardware.

Learn to accelerate Vision Transformer (ViT) models using quantization with Hugging Face Optimum and ONNX Runtime for improved latency.

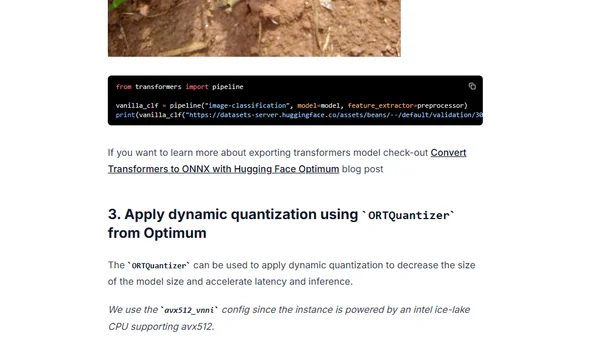

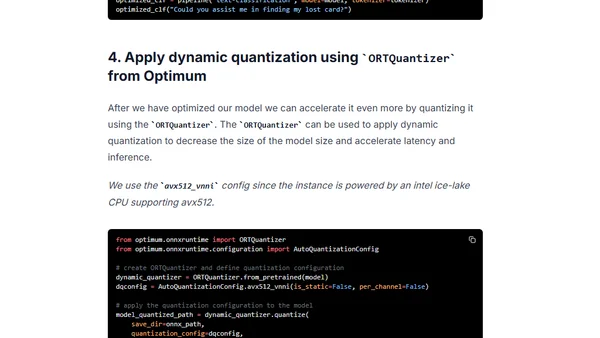

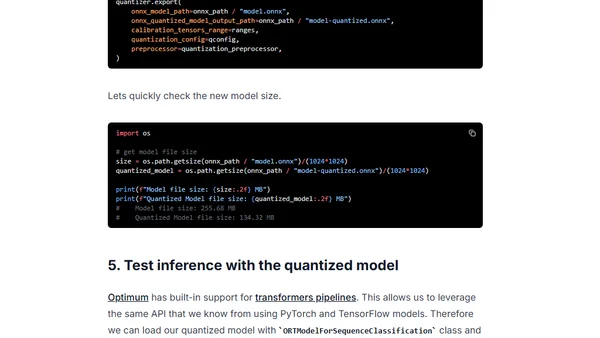

Learn to optimize Hugging Face Transformers models using Optimum and ONNX Runtime for faster inference with dynamic quantization.

Learn how to use Hugging Face Optimum and ONNX Runtime to apply static quantization to a DistilBERT model, achieving ~3x latency improvements.