Quoting Steve Krouse

Explains how MCP servers enable faster development by using LLMs to dynamically read specs, unlike traditional APIs.

Explains how MCP servers enable faster development by using LLMs to dynamically read specs, unlike traditional APIs.

Qualcomm enters the data center AI chip market, challenging Nvidia and AMD with new rack-scale processors focused on inference efficiency and memory bandwidth.

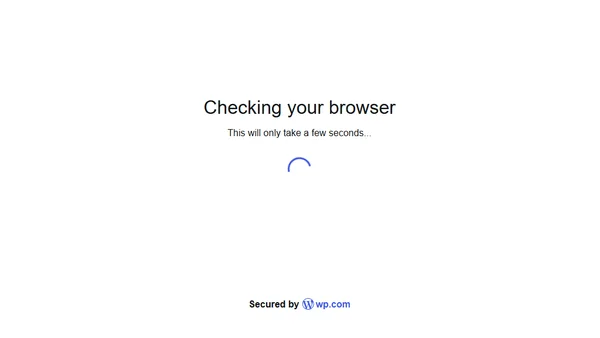

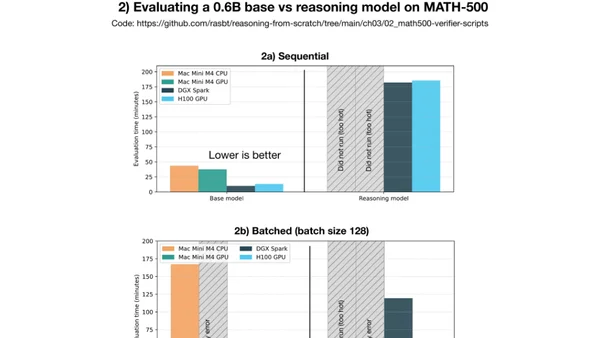

A technical comparison of the DGX Spark and Mac Mini M4 Pro for local PyTorch development and LLM inference, including benchmarks.

Compares DGX Spark and Mac Mini for local PyTorch development, focusing on LLM inference and fine-tuning performance benchmarks.

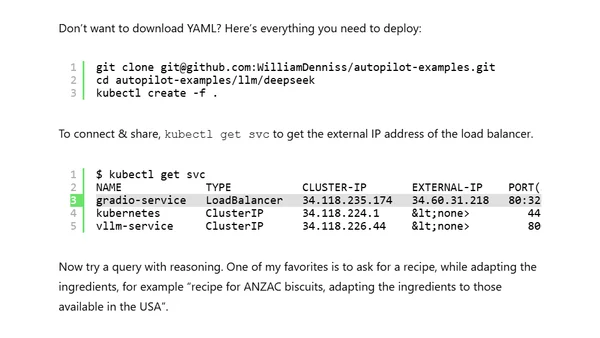

A technical guide on deploying DeepSeek's open reasoning AI models on Google Kubernetes Engine (GKE) using vLLM and a Gradio interface.

A guide on running Large Language Models (LLMs) locally for inference, covering tools like Ollama and Open WebUI for privacy and cost control.

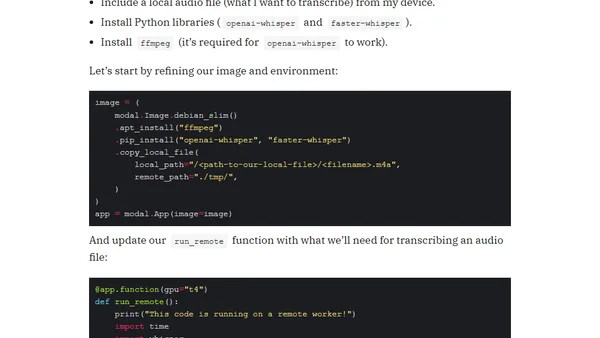

A guide to running Python code on serverless GPU instances using Modal.com for faster machine learning inference, demonstrated with a speech-to-text example.

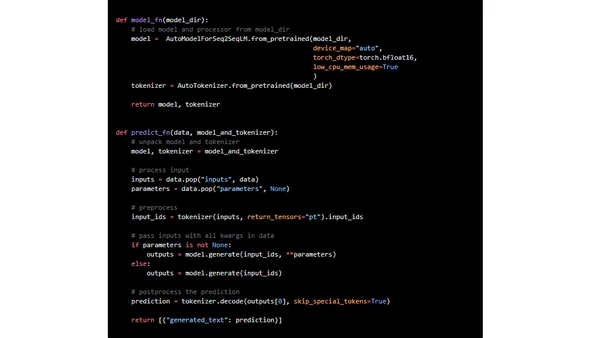

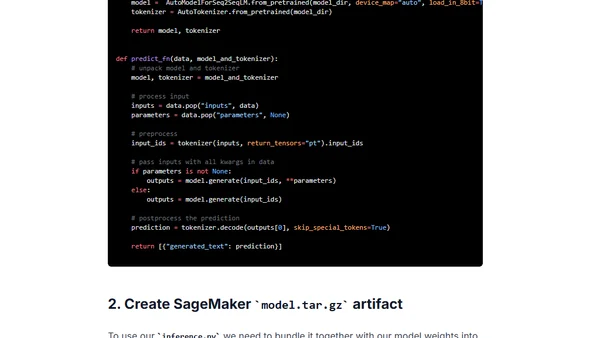

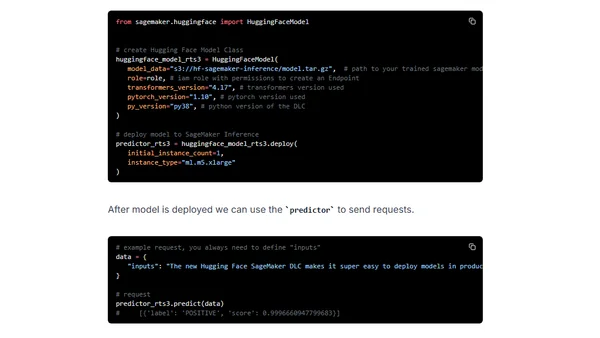

A technical guide on deploying Google's FLAN-UL2 20B large language model for real-time inference using Amazon SageMaker and Hugging Face.

A technical guide on deploying the FLAN-T5-XXL large language model for real-time inference using Amazon SageMaker and Hugging Face.

Compares Amazon SageMaker's four inference options for deploying Hugging Face Transformers models, covering latency, use cases, and pricing.

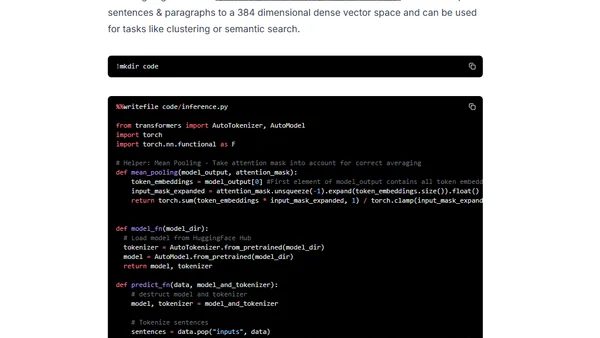

Guide to deploying a Sentence Transformers model on Amazon SageMaker for generating document embeddings using Hugging Face's Inference Toolkit.

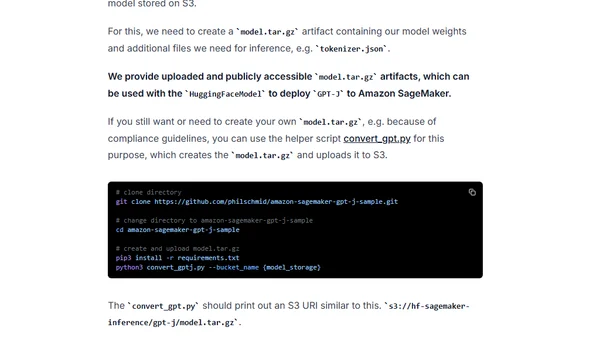

A guide to deploying the GPT-J 6B language model for production inference using Hugging Face Transformers and Amazon SageMaker.

A guide to setting up and using the Google Coral USB TPU Accelerator for faster machine learning inference on Windows 10.

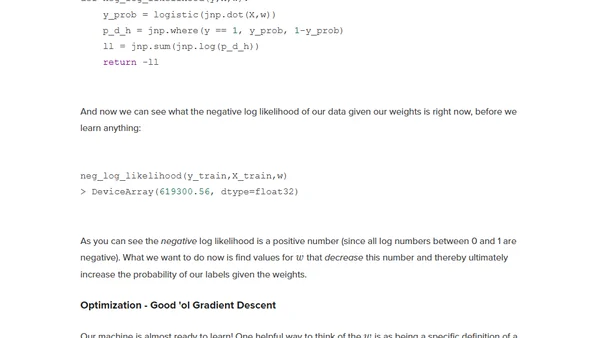

Explores the connection between machine learning and statistics by building a statistical inference model from a neural network example.

Explores the difference between inference and prediction in data modeling, using a Click Through Rate (CTR) example to contrast Machine Learning and Statistics.