Deploy Mixtral 8x7B on AWS Inferentia2 with Hugging Face Optimum

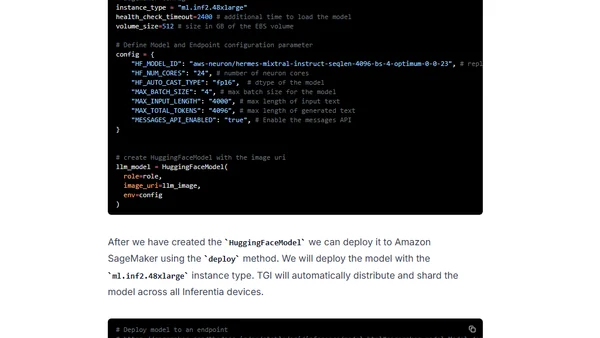

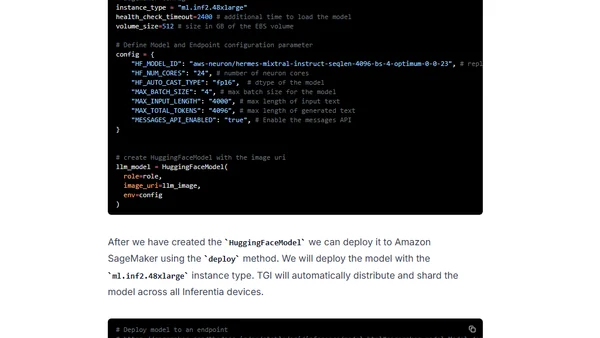

A technical guide on deploying the Mixtral 8x7B LLM on AWS Inferentia2 using Hugging Face Optimum and Amazon SageMaker.

A technical guide on deploying the Mixtral 8x7B LLM on AWS Inferentia2 using Hugging Face Optimum and Amazon SageMaker.

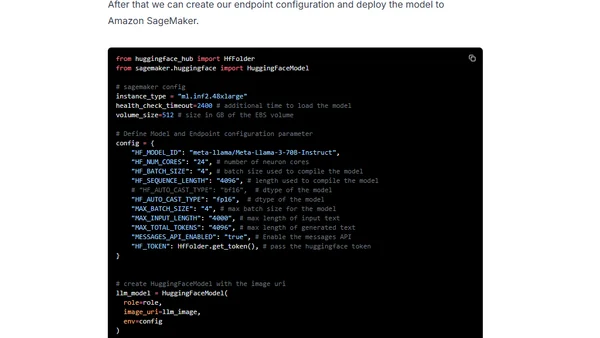

A technical guide on deploying Meta's Llama 3 70B Instruct model on AWS Inferentia2 using Hugging Face Optimum and Amazon SageMaker.

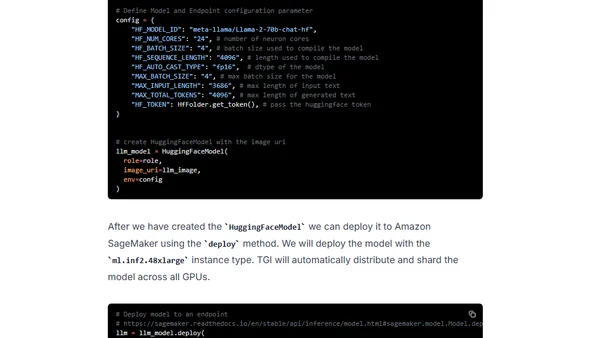

A technical guide on deploying Meta's Llama 2 70B large language model on AWS Inferentia2 hardware using Hugging Face Optimum and SageMaker.

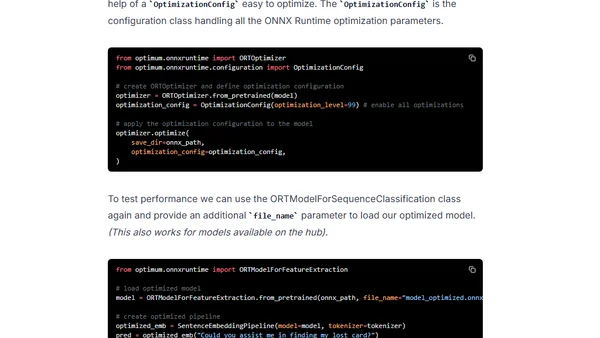

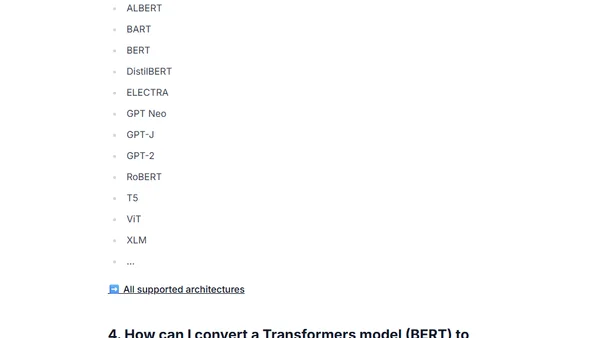

Learn to optimize Sentence Transformers models for faster inference using Hugging Face Optimum, ONNX Runtime, and dynamic quantization.

A guide on converting Hugging Face Transformers models to the ONNX format using the Optimum library for optimized deployment.

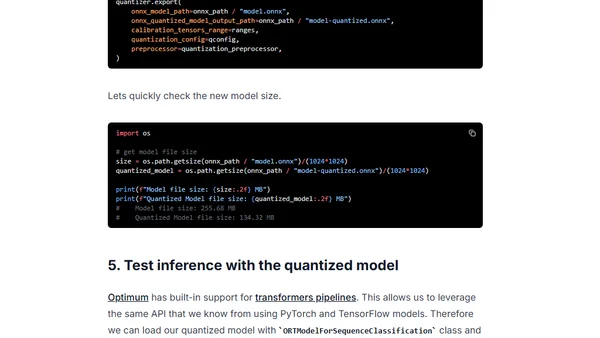

Learn how to use Hugging Face Optimum and ONNX Runtime to apply static quantization to a DistilBERT model, achieving ~3x latency improvements.